728x90

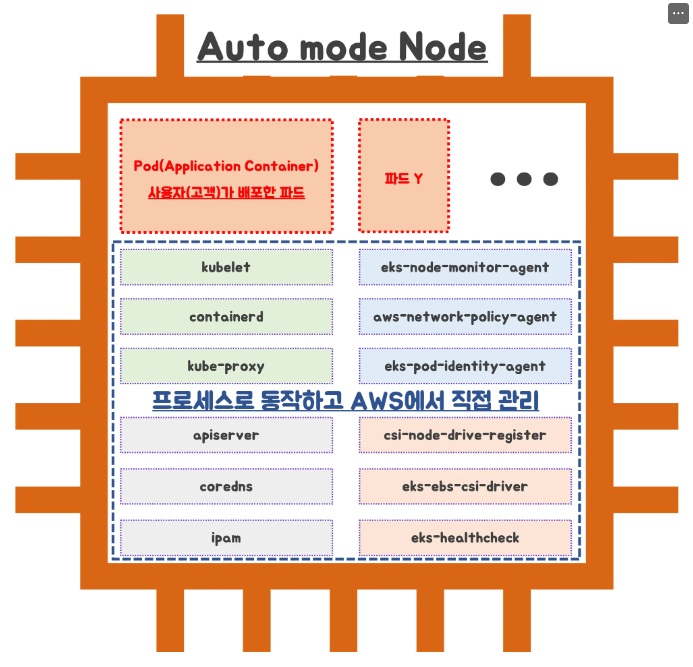

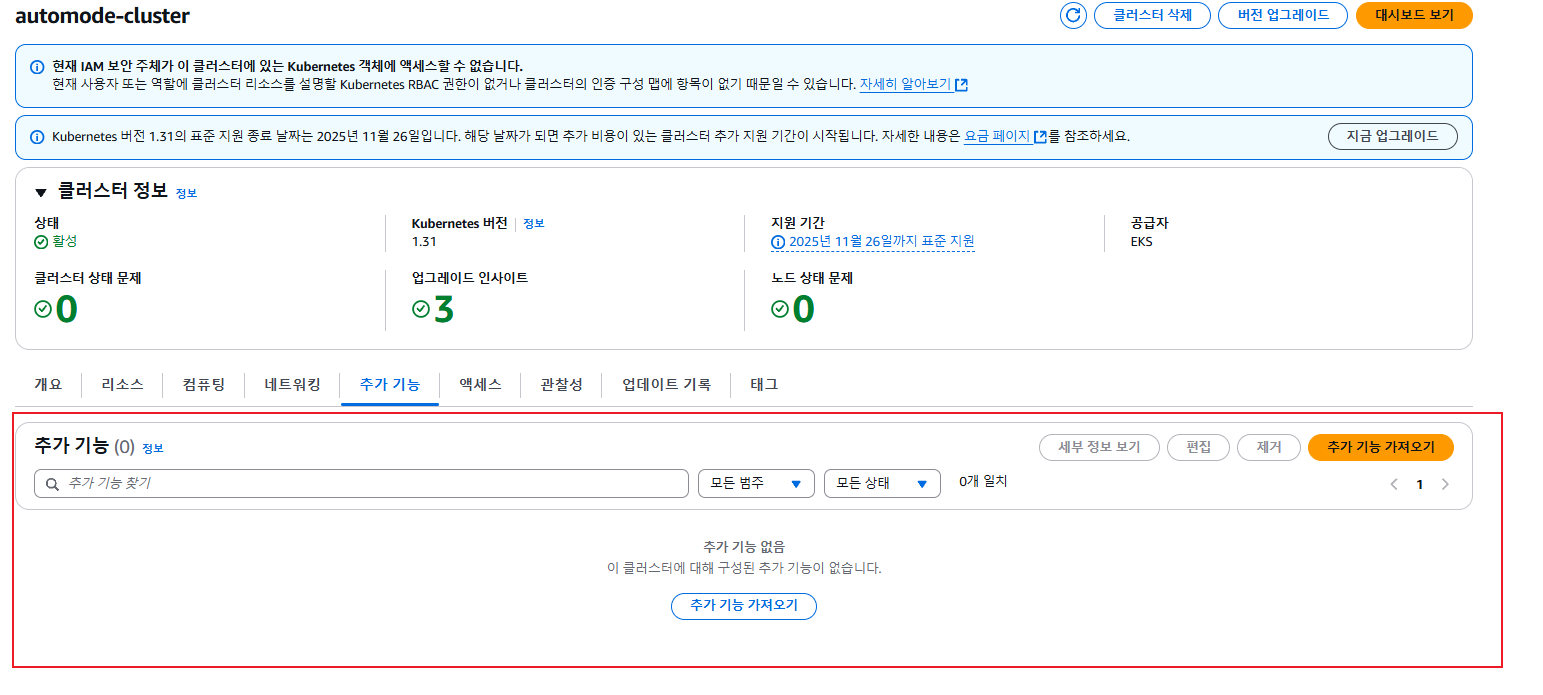

Auto mode란

EKS Cluster 운영 시, 필수적으로 설치 및 구성하는 요소에 대해서 AWS가 관리 운영해주는 방식

- karpenter, EKS Pod Identity, kube-proxy, CNI, Amazone EBS CSI Driver, AWS Load Balancer Contorller

Auto mode 주요 특징

- Auto mode : 6가지 구성요소가 파드로 구성되는게 아니고, 해당 노드에 systemd 데몬(agent)로 실행

- 노드의 expire기간: 기본 14일 / 최대 21일

- karpenter를 사용하여 노드 관리

제약사항

- 노드에 대해 불변으로 취급되는 AMI를 사용합니다. 이러한 AMI는 잠금 소프트웨어를 강제하고 SELinux 필수 액세스 제어를 활성화하며 읽기 전용 루트 파일 시스템을 제공합니다. 또한 EKS Auto Mode에서 실행하는 노드의 최대 수명은 21일. SELinux 강제 모드와 읽기 전용 루트 파일 시스템을 사용하여 AMI의 파일에 대한 액세스를 차단합니다.

- SSH 또는 SSM 액세스를 허용하지 않음으로써 노드에 대한 직접 액세스를 방지합니다.

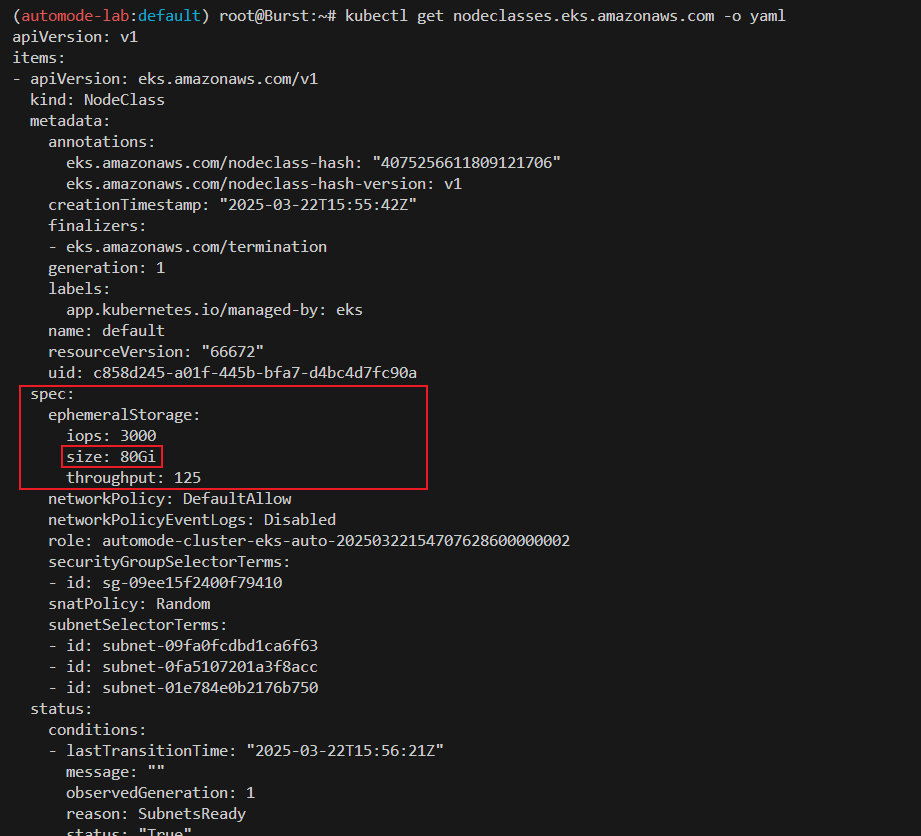

- Create node class : ephemeralStorage(80GiB), 노드 당 최대 파드 110개 제한.

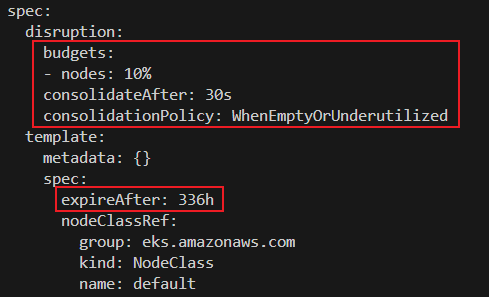

- Create node pool : 기본 노드풀은 활성화/비활성화 가능, budgets 로 disruption 중지 가능.

- Create ingress class : IngressClassParams 리소스 및 API 일부 변경, 미지원 기능 확인

- Create StorageClss : provisioner: ebs.csi.eks.amazonaws.com , EBS 성능 프로메테우스 메트릭 접근 불가.

- Update Kubernetes version : 업그레이드 시 자체 관리 Add-on 등은 고객 담당.

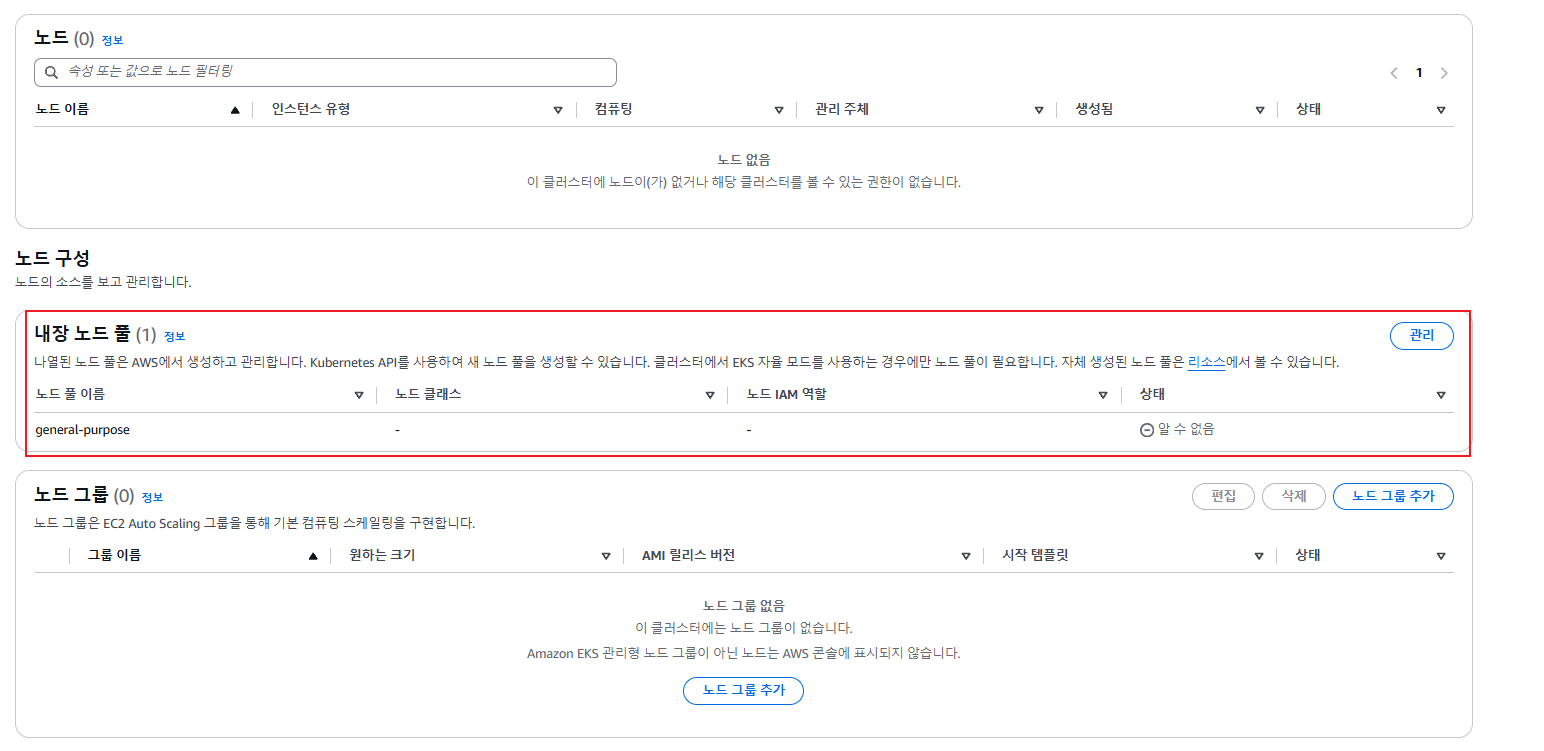

- Review build-in node pools : 공통(온디멘드) - system(amd, arm64), general-purpose(amd64).

- Run critical add-ons : system 노드풀로 전용인스턴스(dedicated instances)에 파드 배포

- Control deployment : mixed mode 시 Auto-mode node 사용/미사용 방법 잘 확인 할 것

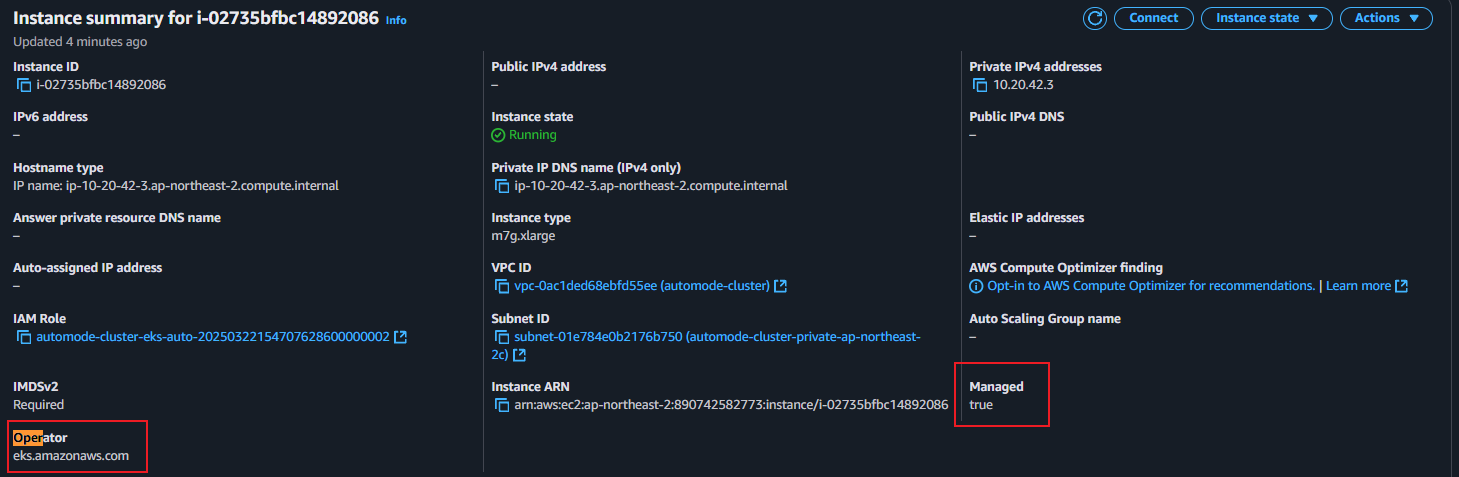

- Managed Instances : AWS 소유 관리 인스턴스(OS, CRI, Kubelet 등 관리/책임)

- EKS Auto Mode에서 생성된 EC2 인스턴스는 다른 EC2 인스턴스와 다르며 관리되는 인스턴스입니다. 이러한 관리되는 인스턴스는 EKS가 소유하고 있으며 더 제한적입니다. EKS Auto Mode에서 관리하는 인스턴스에는 직접 액세스하거나 소프트웨어를 설치할 수 없습니다.

- EKS 자동 모드는 다음 인스턴스 유형을 지원합니다 : vCPU 1개 이상, 불가(nano, micro, small)

EKS Auto mode 배포 실습(https://github.com/aws-samples/sample-aws-eks-auto-mode)

GitHub - aws-samples/sample-aws-eks-auto-mode

Contribute to aws-samples/sample-aws-eks-auto-mode development by creating an account on GitHub.

github.com

- variables.tf 수정 : ap-northeast-2 , 10.20.0.0/16

# Get the code : 배포 코드에 addon 내용이 읍다!

git clone https://github.com/aws-samples/sample-aws-eks-auto-mode.git

cd sample-aws-eks-auto-mode/terraform

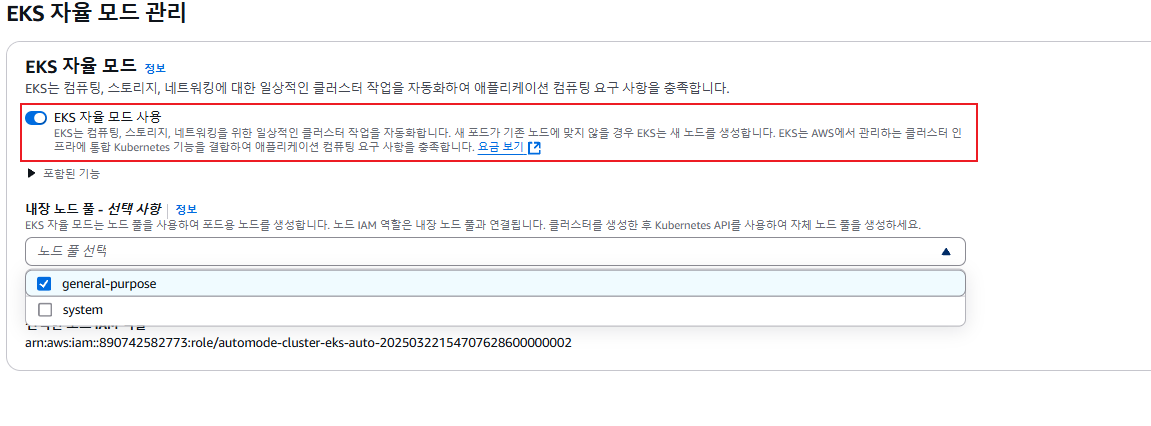

# eks.tf : "system" 은 '전용인스턴스'로 추가하지 않는다

...

cluster_compute_config = {

enabled = true

node_pools = ["general-purpose"]

}

...

# Initialize and apply Terraform

terraform init

terraform plan

terraform apply -auto-approve

...

null_resource.create_nodepools_dir: Creating...

null_resource.create_nodepools_dir: Provisioning with 'local-exec'...

null_resource.create_nodepools_dir (local-exec): Executing: ["/bin/sh" "-c" "mkdir -p ./../nodepools"]

...

# Configure kubectl

cat setup.tf

ls -l ../nodepools

$(terraform output -raw configure_kubectl)

# kubectl context 변경

kubectl ctx

kubectl config rename-context "arn:aws:eks:ap-northeast-2:$(aws sts get-caller-identity --query 'Account' --output text):cluster/automode-cluster" "automode-lab"

kubectl ns default

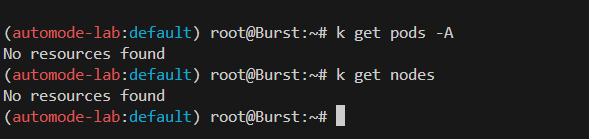

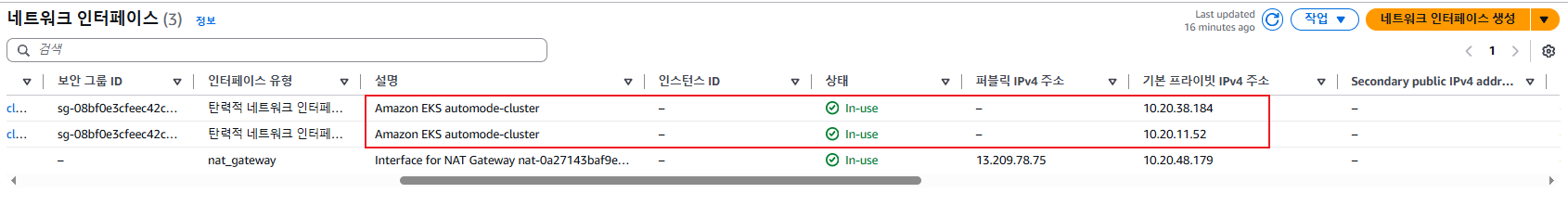

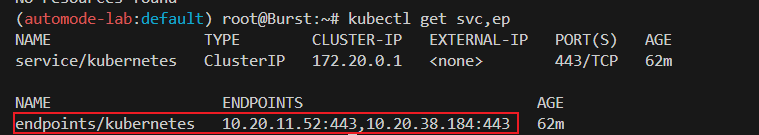

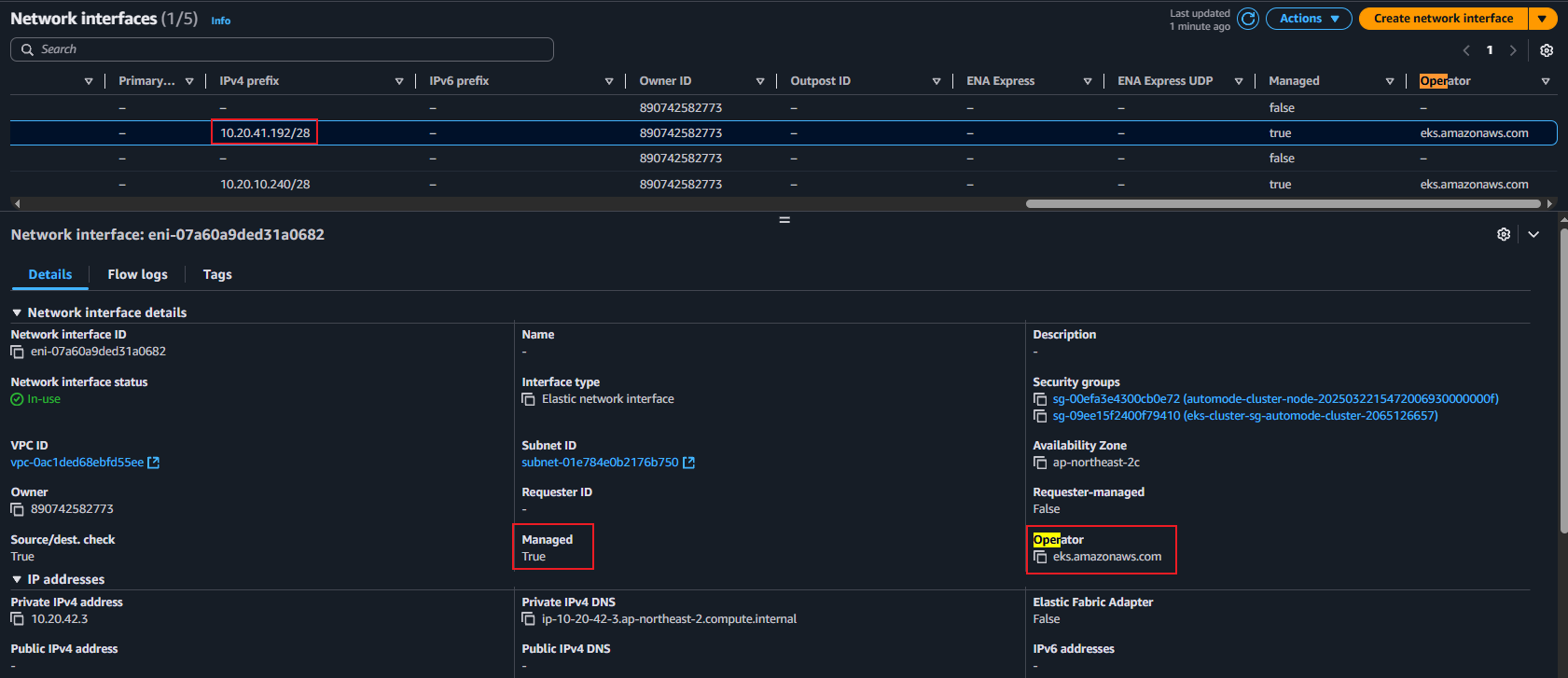

# 아래 IP의 ENI 찾아보자

kubectl get svc,ep

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 27m

NAME ENDPOINTS AGE

endpoints/kubernetes 10.20.22.204:443,10.20.40.216:443 27m

#

terraform state list

terraform show

terraform state show 'module.eks.aws_eks_cluster.this[0]'

...

compute_config {

enabled = true

node_pools = [

"general-purpose",

]

node_role_arn = "arn:aws:iam::911283464785:role/automode-cluster-eks-auto-20250316042752605600000003"

}

...

- 확인

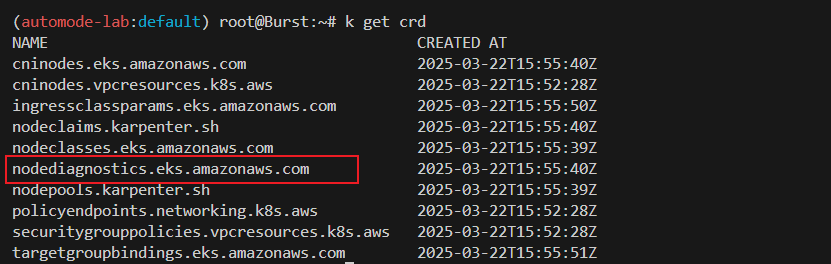

kubectl get crd

kubectl api-resources | grep -i node

nodes no v1 false Node

cninodes cni,cnis eks.amazonaws.com/v1alpha1 false CNINode

nodeclasses eks.amazonaws.com/v1 false NodeClass

nodediagnostics eks.amazonaws.com/v1alpha1 false NodeDiagnostic

nodeclaims karpenter.sh/v1 false NodeClaim

nodepools karpenter.sh/v1 false NodePool

runtimeclasses node.k8s.io/v1 false RuntimeClass

csinodes storage.k8s.io/v1 false CSINode

cninodes cnd vpcresources.k8s.aws/v1alpha1 false CNINode

# 노드에 Access가 불가능하니, 분석 지원(CRD)제공

kubectl explain nodediagnostics

GROUP: eks.amazonaws.com

KIND: NodeDiagnostic

VERSION: v1alpha1

DESCRIPTION:

The name of the NodeDiagnostic resource is meant to match the name of the

node which should perform the diagnostic tasks

#

kubectl get nodeclasses.eks.amazonaws.com

NAME ROLE READY AGE

default automode-cluster-eks-auto-20250314121820950800000003 True 29m

kubectl get nodeclasses.eks.amazonaws.com -o yaml

...

spec:

ephemeralStorage:

iops: 3000

size: 80Gi

throughput: 125

networkPolicy: DefaultAllow

networkPolicyEventLogs: Disabled

role: automode-cluster-eks-auto-20250314121820950800000003

securityGroupSelectorTerms:

- id: sg-05d210218e5817fa1

snatPolicy: Random # ???

subnetSelectorTerms:

- id: subnet-0539269140458ced5

- id: subnet-055dc112cdd434066

- id: subnet-0865f60e4a6d8ad5c

status:

...

instanceProfile: eks-ap-northeast-2-automode-cluster-4905473370491687283

securityGroups:

- id: sg-05d210218e5817fa1

name: eks-cluster-sg-automode-cluster-2065126657

subnets:

- id: subnet-0539269140458ced5

zone: ap-northeast-2a

zoneID: apne2-az1

- id: subnet-055dc112cdd434066

zone: ap-northeast-2b

zoneID: apne2-az2

- id: subnet-0865f60e4a6d8ad5c

zone: ap-northeast-2c

zoneID: apne2-az3

#

kubectl get nodepools

NAME NODECLASS NODES READY AGE

general-purpose default 0 True 33m

kubectl get nodepools -o yaml

...

spec:

disruption:

budgets:

- nodes: 10%

consolidateAfter: 30s

consolidationPolicy: WhenEmptyOrUnderutilized

template:

metadata: {}

spec:

expireAfter: 336h # 14일

nodeClassRef:

group: eks.amazonaws.com

kind: NodeClass

name: default

requirements:

- key: karpenter.sh/capacity-type

operator: In

values:

- on-demand

- key: eks.amazonaws.com/instance-category

operator: In

values:

- c

- m

- r

- key: eks.amazonaws.com/instance-generation

operator: Gt

values:

- "4"

- key: kubernetes.io/arch

operator: In

values:

- amd64

- key: kubernetes.io/os

operator: In

values:

- linux

terminationGracePeriod: 24h0m0s

...

#

kubectl get mutatingwebhookconfiguration

kubectl get validatingwebhookconfiguration

- nodediagnostics CRD: Auto mode는 Node에 대한 접근 및 상태확인이 불가능하기 때문에 간적적으로 노드 확인을 위하여 기본적으로 제공

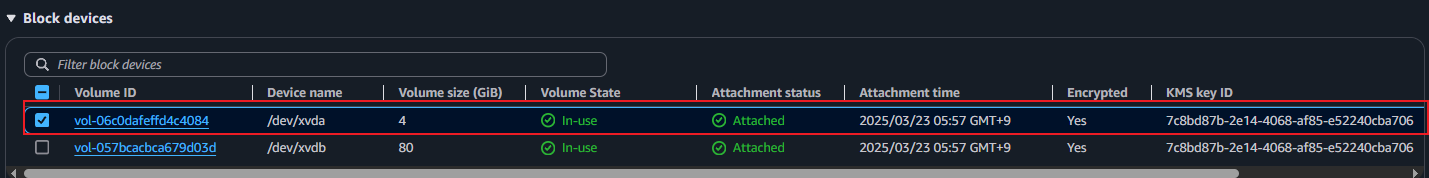

- ephemeralStorage

- Data저장용 Disk

- Root Disk의 경우 "Read-only"

- budgets 및 기본 노드 만료일 확인

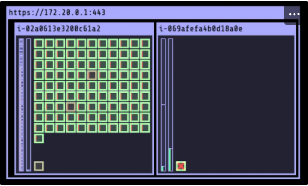

- kube-ops-view 설치

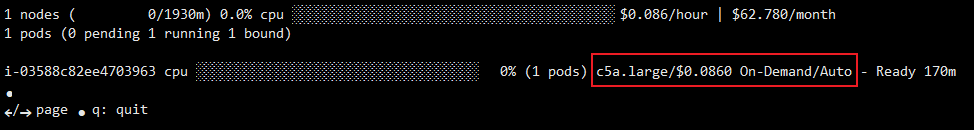

# 모니터링

eks-node-viewer --node-sort=eks-node-viewer/node-cpu-usage=dsc --extra-labels eks-node-viewer/node-age

watch -d kubectl get node,pod -A

# helm 배포

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

kubectl get events -w --sort-by '.lastTimestamp' # 출력 이벤트 로그 분석해보자

# 확인

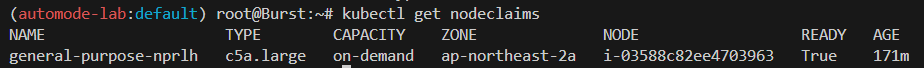

kubectl get nodeclaims

NAME TYPE CAPACITY ZONE NODE READY AGE

general-purpose-528mt c5a.large on-demand ap-northeast-2c i-09cf206aee76f0bee True 54s

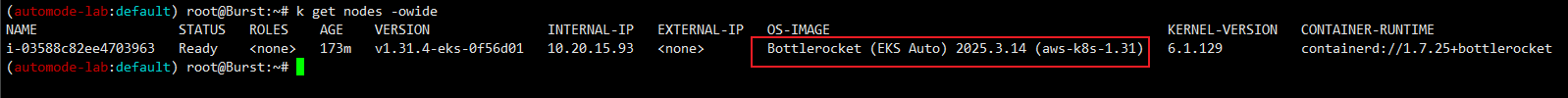

# OS, KERNEL, CRI 확인

kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

i-09cf206aee76f0bee Ready <none> 2m14s v1.31.4-eks-0f56d01 10.20.44.40 <none> Bottlerocket (EKS Auto) 2025.3.9 (aws-k8s-1.31) 6.1.129 containerd://1.7.25+bottlerocket

# CNI 노드 확인

kubectl get cninodes.eks.amazonaws.com

NAME AGE

i-09cf206aee76f0bee 3m24s

#[신규 터미널] 포트 포워딩

kubectl port-forward deployment/kube-ops-view -n kube-system 8080:8080 &

# 접속 주소 확인 : 각각 1배, 1.5배, 3배 크기

echo -e "KUBE-OPS-VIEW URL = http://localhost:8080"

echo -e "KUBE-OPS-VIEW URL = http://localhost:8080/#scale=1.5"

echo -e "KUBE-OPS-VIEW URL = http://localhost:8080/#scale=3"

open "http://127.0.0.1:8080/#scale=1.5" # macOS

Auto mode 실습(Karpenter 동작 확인)

# Step 1: Review existing compute resources (optional)

kubectl get nodepools

general-purpose

# Step 2: Deploy a sample application to the cluster

# eks.amazonaws.com/compute-type: auto selector requires the workload be deployed on an Amazon EKS Auto Mode node.

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: inflate

spec:

replicas: 1

selector:

matchLabels:

app: inflate

template:

metadata:

labels:

app: inflate

spec:

terminationGracePeriodSeconds: 0

nodeSelector:

eks.amazonaws.com/compute-type: auto

securityContext:

runAsUser: 1000

runAsGroup: 3000

fsGroup: 2000

containers:

- name: inflate

image: public.ecr.aws/eks-distro/kubernetes/pause:3.7

resources:

requests:

cpu: 1

securityContext:

allowPrivilegeEscalation: false

EOF

# Step 3: Watch Kubernetes Events

kubectl get events -w --sort-by '.lastTimestamp'

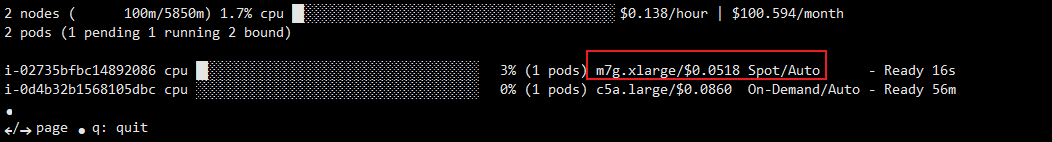

kubectl get nodes- pod 증가에 따른 Node정보 확인

- pod 증가 시, Node 스펙이 변경되며 해당 Node로 Pod 배치(c5a.large → c5a.2xlarge)

- 기존에 배치되어 있던 "kube-ops-view" pod는 신규 node로 재배치

- Pod 감소 시, Node 스펙이 변경되며 해당 Node로 Pod 배치(c5a.2xlarge → c5a.large)

# 모니터링

eks-node-viewer --node-sort=eks-node-viewer/node-cpu-usage=dsc --extra-labels eks-node-viewer/node-age

watch -d kubectl get node,pod -A

#

kubectl scale deployment inflate --replicas 100 && kubectl get events -w --sort-by '.lastTimestamp'

#

kubectl scale deployment inflate --replicas 200 && kubectl get events -w --sort-by '.lastTimestamp'

#

kubectl scale deployment inflate --replicas 50 && kubectl get events -w --sort-by '.lastTimestamp'

# 실습 확인 후 삭제

kubectl delete deployment inflate && kubectl get events -w --sort-by '.lastTimestamp'

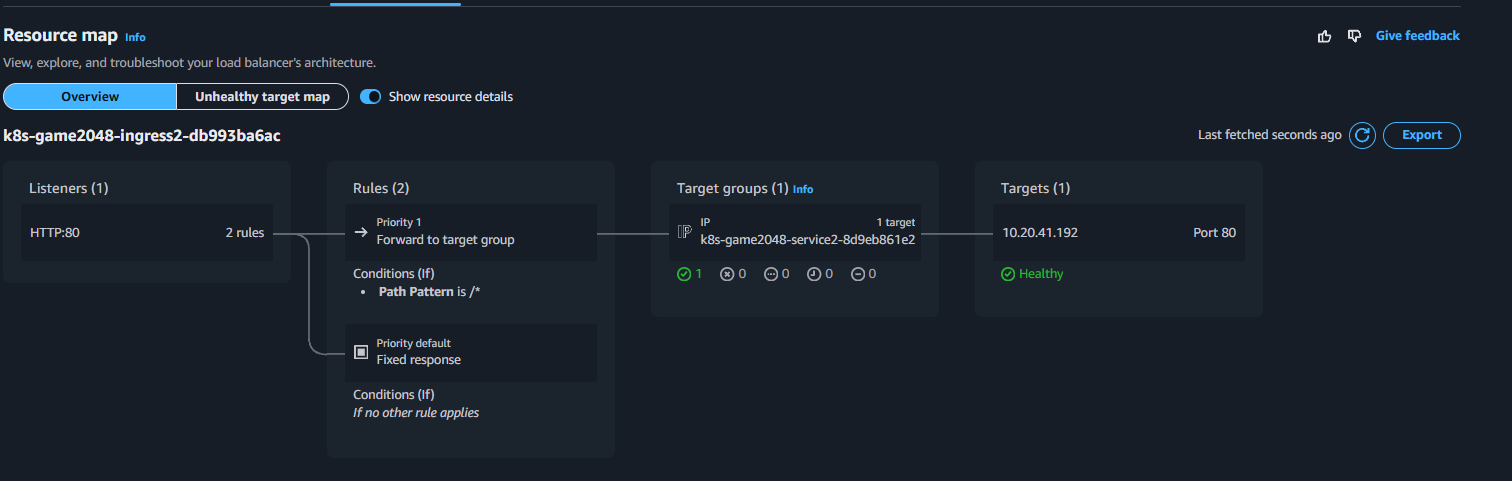

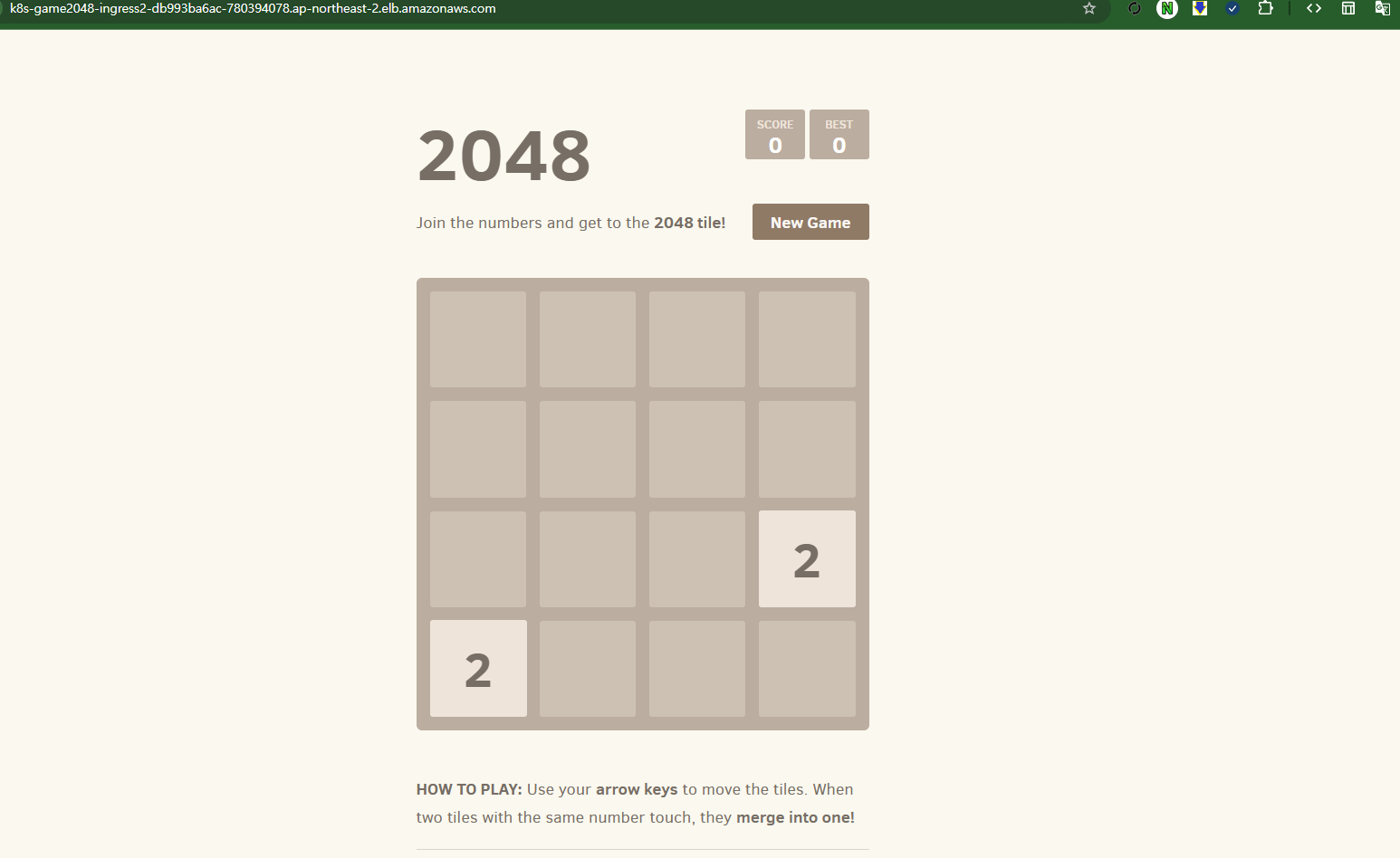

[네트워킹] Graviton Workloads (2048 game) 배포 with ingress(ALB) : custom nodeclass/pool 사용

# custom node pool 생성 : 고객 NodePool : Karpenter 와 키가 다르니 주의!

## 기존(karpenter.k8s.aws/instance-family) → 변경(eks.amazonaws.com/instance-family) - Link

ls ../nodepools

cat ../nodepools/graviton-nodepool.yaml

kubectl apply -f ../nodepools/graviton-nodepool.yaml

---

apiVersion: eks.amazonaws.com/v1

kind: NodeClass

metadata:

name: graviton-nodeclass

spec:

role: automode-cluster-eks-auto-20250314121820950800000003

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: "automode-demo"

securityGroupSelectorTerms:

- tags:

kubernetes.io/cluster/automode-cluster: owned

tags:

karpenter.sh/discovery: "automode-demo"

---

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: graviton-nodepool

spec:

template:

spec:

nodeClassRef:

group: eks.amazonaws.com

kind: NodeClass

name: graviton-nodeclass

requirements:

- key: "eks.amazonaws.com/instance-category"

operator: In

values: ["c", "m", "r"]

- key: "eks.amazonaws.com/instance-cpu"

operator: In

values: ["4", "8", "16", "32"]

- key: "kubernetes.io/arch"

operator: In

values: ["arm64"]

taints:

- key: "arm64"

value: "true"

effect: "NoSchedule" # Prevents non-ARM64 pods from scheduling

limits:

cpu: 1000

disruption:

consolidationPolicy: WhenEmpty

consolidateAfter: 30s

#

kubectl get NodeClass

NAME ROLE READY AGE

default automode-cluster-eks-auto-20250314121820950800000003 True 64m

graviton-nodeclass automode-cluster-eks-auto-20250314121820950800000003 True 3m4s

kubectl get NodePool

NAME NODECLASS NODES READY AGE

general-purpose default 0 True 64m

graviton-nodepool graviton-nodeclass 0 True 3m32s

#

ls ../examples/graviton

cat ../examples/graviton/game-2048.yaml

...

resources:

requests:

cpu: "100m"

memory: "128Mi"

limits:

cpu: "200m"

memory: "256Mi"

automountServiceAccountToken: false

tolerations:

- key: "arm64"

value: "true"

effect: "NoSchedule"

nodeSelector:

kubernetes.io/arch: arm64

...

kubectl apply -f ../examples/graviton/game-2048.yaml

# c6g.xlarge : vCPU 4, 8 GiB RAM > 스팟 선택됨!

kubectl get nodeclaims

NAME TYPE CAPACITY ZONE NODE READY AGE

graviton-nodepool-ngp42 c6g.xlarge spot ap-northeast-2b i-0b7ca5072ebf3c969 True 9m48s

kubectl get nodeclaims -o yaml

...

spec:

expireAfter: 336h

...

kubectl get cninodes.eks.amazonaws.com

kubectl get cninodes.eks.amazonaws.com -o yaml

eks-node-viewer --resources cpu,memory

kubectl get node -owide

kubectl describe node

...

Taints: arm64=true:NoSchedule

...

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Fri, 14 Mar 2025 22:53:54 +0900 Fri, 14 Mar 2025 22:37:35 +0900 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Fri, 14 Mar 2025 22:53:54 +0900 Fri, 14 Mar 2025 22:37:35 +0900 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Fri, 14 Mar 2025 22:53:54 +0900 Fri, 14 Mar 2025 22:37:35 +0900 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Fri, 14 Mar 2025 22:53:54 +0900 Fri, 14 Mar 2025 22:37:35 +0900 KubeletReady kubelet is posting ready status

KernelReady True Fri, 14 Mar 2025 22:57:39 +0900 Fri, 14 Mar 2025 22:37:39 +0900 KernelIsReady Monitoring for the Kernel system is active

ContainerRuntimeReady True Fri, 14 Mar 2025 22:57:39 +0900 Fri, 14 Mar 2025 22:37:39 +0900 ContainerRuntimeIsReady Monitoring for the ContainerRuntime system is active

StorageReady True Fri, 14 Mar 2025 22:57:39 +0900 Fri, 14 Mar 2025 22:37:39 +0900 DiskIsReady Monitoring for the Disk system is active

NetworkingReady True Fri, 14 Mar 2025 22:57:39 +0900 Fri, 14 Mar 2025 22:37:39 +0900 NetworkingIsReady Monitoring for the Networking system is active

...

System Info:

Machine ID: ec272ed9293b6501bd9f665eed7e1627

System UUID: ec272ed9-293b-6501-bd9f-665eed7e1627

Boot ID: 97c24ba6-d319-4686-abf8-bb62c4f22888

Kernel Version: 6.1.129

OS Image: Bottlerocket (EKS Auto) 2025.3.9 (aws-k8s-1.31)

Operating System: linux

Architecture: arm64

Container Runtime Version: containerd://1.7.25+bottlerocket

Kubelet Version: v1.31.4-eks-0f56d01

Kube-Proxy Version: v1.31.4-eks-0f56d01

#

kubectl get deploy,pod -n game-2048 -owide

- ingress 배포 보안 그룹 소스에 ALB SG ID가 이미 들어가 있는 상태라서 아래 규칙 추가 없이 접속이 되어야 하지만, 혹시 잘 안될 경우 아래 추가 할 것

# Get security group IDs

ALB_SG=$(aws elbv2 describe-load-balancers \

--query 'LoadBalancers[?contains(DNSName, `game2048`)].SecurityGroups[0]' \

--output text)

EKS_SG=$(aws eks describe-cluster \

--name automode-cluster \

--query 'cluster.resourcesVpcConfig.clusterSecurityGroupId' \

--output text)

echo $ALB_SG $EKS_SG # 해당 보안그룹을 관리콘솔에서 정책 설정 먼저 확인해보자

# Allow ALB to communicate with EKS cluster : 실습 환경 삭제 때, 미리 $EKS_SG에 추가된 규칙만 제거해둘것.

aws ec2 authorize-security-group-ingress \

--group-id $EKS_SG \

--source-group $ALB_SG \

--protocol tcp \

--port 80

# 아래 웹 주소로 http 접속!

kubectl get ingress ingress-2048 \

-o jsonpath='{.status.loadBalancer.ingress[0].hostname}' \

-n game-2048

k8s-game2048-ingress2-db993ba6ac-782663732.ap-northeast-2.elb.amazonaws.com

- 삭제

# Remove application components

kubectl delete ingress -n game-2048 ingress-2048 # 먼저 $EKS_SG에 추가된 규칙만 제거할것!!!

kubectl delete svc -n game-2048 service-2048

kubectl delete deploy -n game-2048 deployment-2048

# 생성된 노드가 삭제 후에 노드 풀 제거 할 것 : Remove Graviton node pool

kubectl delete -f ../nodepools/graviton-nodepool.yaml

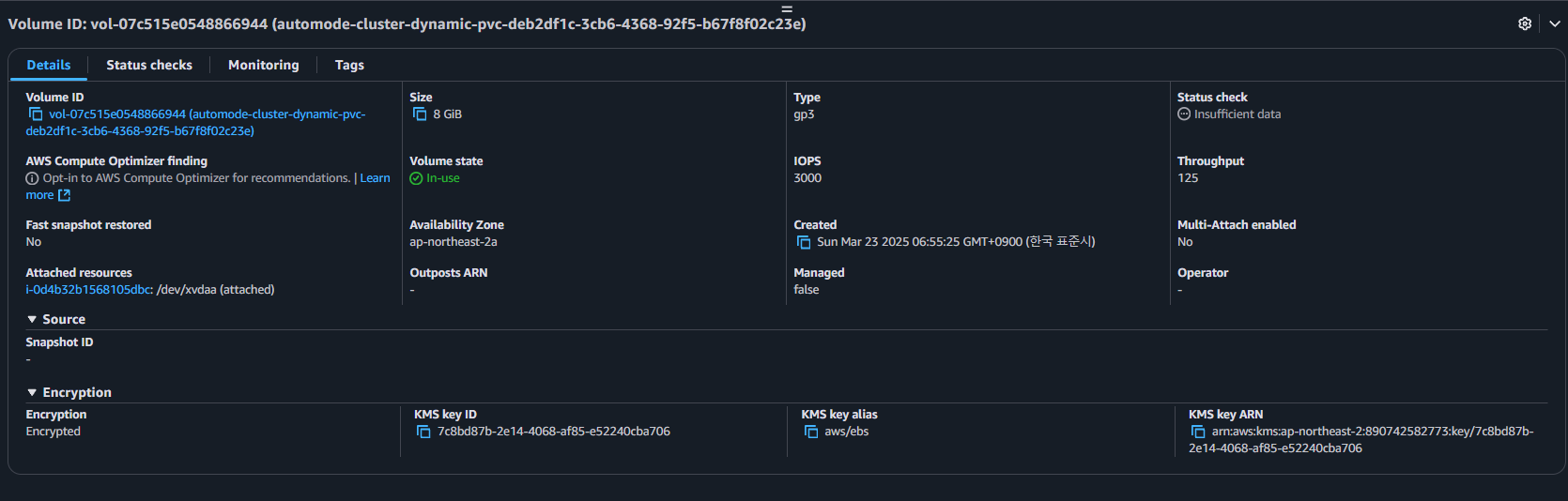

[스토리지] stateful workload with PV(EBS) 배포 : EBS Controller 동작 확인

# Create the storage class

cat <<EOF | kubectl apply -f -

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: auto-ebs-sc

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: ebs.csi.eks.amazonaws.com # Uses EKS Auto Mode

volumeBindingMode: WaitForFirstConsumer # Delays volume creation until a pod needs it

parameters:

type: gp3

encrypted: "true"

EOF

# Create the persistent volume claim

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: auto-ebs-claim

spec:

accessModes:

- ReadWriteOnce

storageClassName: auto-ebs-sc

resources:

requests:

storage: 8Gi

EOF

# Deploy the Application : The Deployment runs a container that writes timestamps to the persistent volume.

## Simple bash container that writes timestamps to a file

## Mounts the PVC at /data

## Requests 1 CPU core

## Uses node selector for EKS managed nodes

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: inflate-stateful

spec:

replicas: 1

selector:

matchLabels:

app: inflate-stateful

template:

metadata:

labels:

app: inflate-stateful

spec:

terminationGracePeriodSeconds: 0

nodeSelector:

eks.amazonaws.com/compute-type: auto

containers:

- name: bash

image: public.ecr.aws/docker/library/bash:4.4

command: ["/usr/local/bin/bash"]

args: ["-c", "while true; do echo \$(date -u) >> /data/out.txt; sleep 60; done"]

resources:

requests:

cpu: "1"

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: auto-ebs-claim

EOF

kubectl get events -w --sort-by '.lastTimestamp'

- 확인 및 삭제

# Verify the Setup

kubectl get pods -l app=inflate-stateful

kubectl get pvc auto-ebs-claim

# Check the EBS volume : 관리콘솔에서 EBS 확인

## Get the PV name

PV_NAME=$(kubectl get pvc auto-ebs-claim -o jsonpath='{.spec.volumeName}')

## Describe the EBS volume

aws ec2 describe-volumes \

--filters Name=tag:CSIVolumeName,Values=${PV_NAME}

# Verify data is being written:

kubectl exec "$(kubectl get pods -l app=inflate-stateful \

-o=jsonpath='{.items[0].metadata.name}')" -- \

cat /data/out.txt

kubectl exec "$(kubectl get pods -l app=inflate-stateful \

-o=jsonpath='{.items[0].metadata.name}')" -- \

tail -f /data/out.txt

# Cleanup - Delete all resources in one command

kubectl delete deployment/inflate-stateful pvc/auto-ebs-claim storageclass/auto-ebs-sc

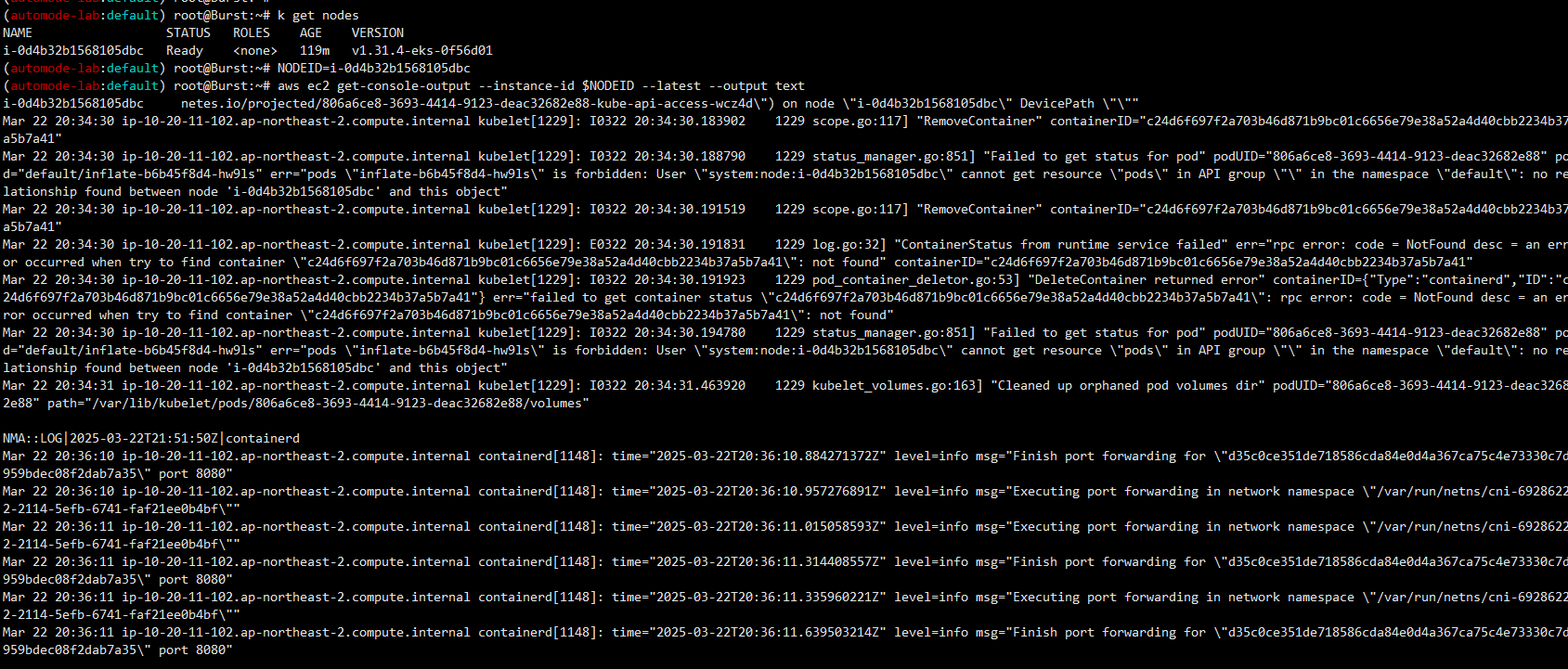

Node 정보 확인 방법

[운영] 노드 콘솔 출력 정보 확인

- console-output을 통하여 Node의 Log 확인

# 노드 인스턴스 ID 확인

kubectl get node

NODEID=<각자 자신의 노드ID>

NODEID=i-0b12733f9b75cd835

# Use the EC2 instance ID to retrieve the console output.

aws ec2 get-console-output --instance-id $NODEID --latest --output text

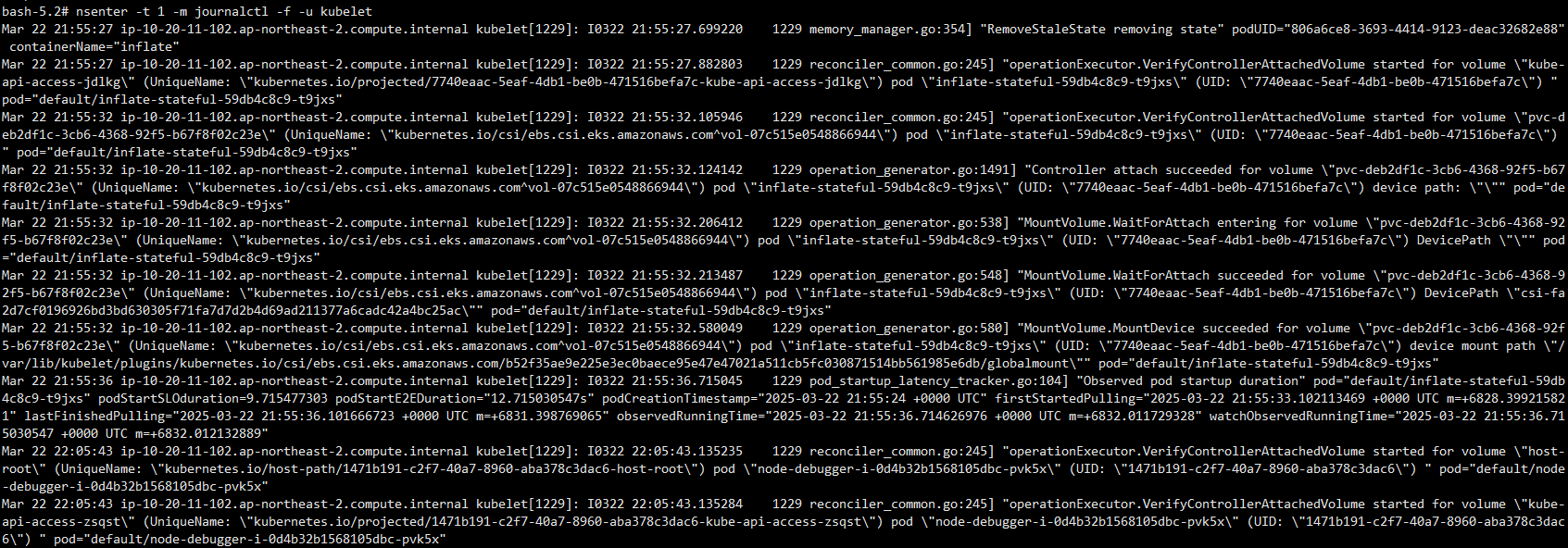

[운영] 노드 특정 프로세스 로그 실시간 확인

- Node의 접근이 불가능하여, Debug전용 Container를 배포하여 Node 확인

- 즉 Pod의 shell에 접근

# 노드 인스턴스 ID 확인

kubectl get node

NODEID=<각자 자신의 노드ID>

NODEID=i-0b12733f9b75cd835

# 디버그 컨테이너를 실행합니다. 다음 명령어는 노드의 인스턴스 ID에 i-01234567890123456을 사용하며,

# 대화형 사용을 위해 tty와 stdin을 할당하고 kubeconfig 파일의 sysadmin 프로필을 사용합니다.

## Create an interactive debugging session on a node and immediately attach to it.

## The container will run in the host namespaces and the host's filesystem will be mounted at /host

## --profile='legacy': Options are "legacy", "general", "baseline", "netadmin", "restricted" or "sysadmin"

kubectl debug -h

kubectl debug node/$NODEID -it --profile=sysadmin --image=public.ecr.aws/amazonlinux/amazonlinux:2023

-------------------------------------------------

bash-5.2# whoami

# 셸에서 이제 nsenter 명령을 제공하는 util-linux-core를 설치할 수 있습니다.

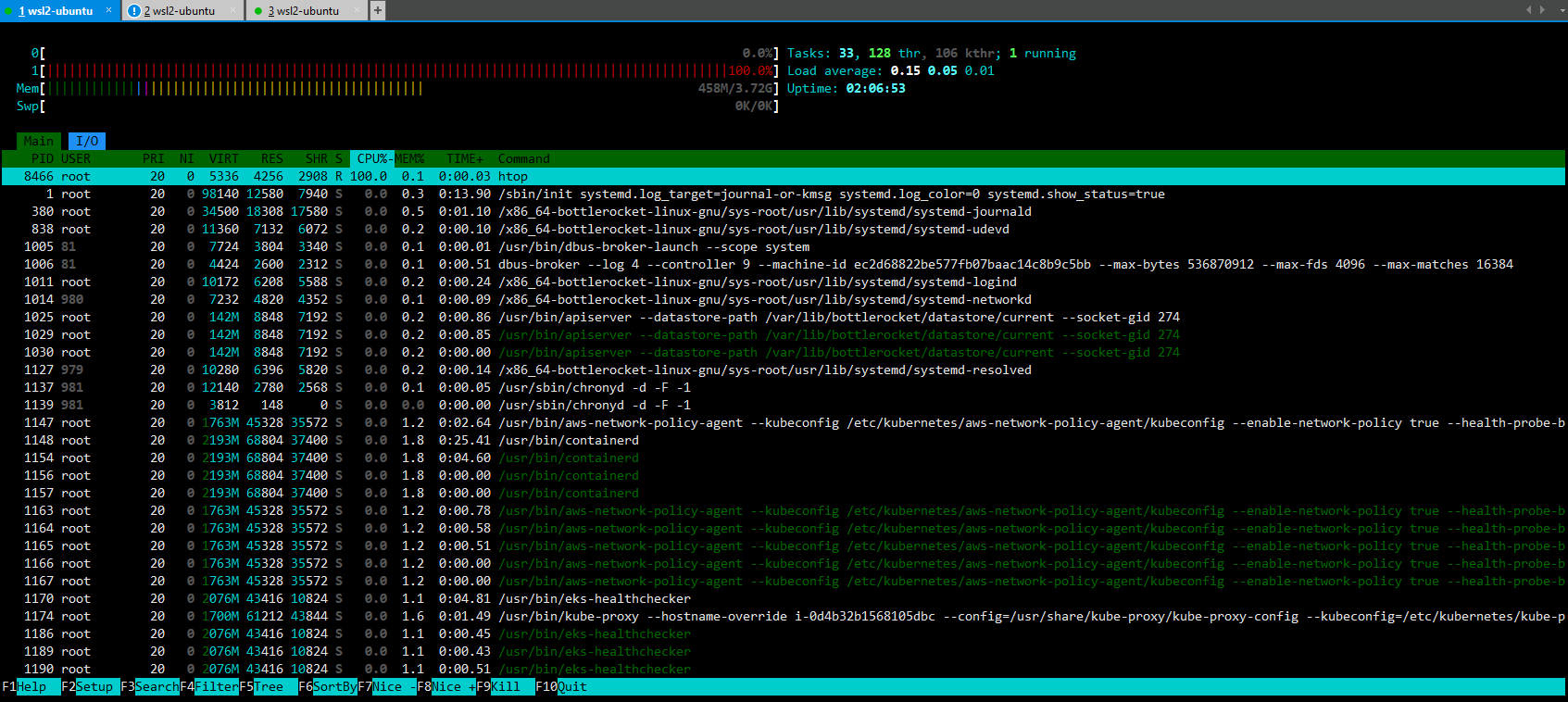

# nsenter를 사용하여 호스트에서 PID 1의 마운트 네임스페이스(init)를 입력하고 journalctl 명령을 실행하여 큐블릿에서 로그를 스트리밍합니다:

yum install -y util-linux-core htop

nsenter -t 1 -m journalctl -f -u kubelet

htop # 해당 노드(인스턴스) CPU,Memory 크기 확인

# 정보 확인

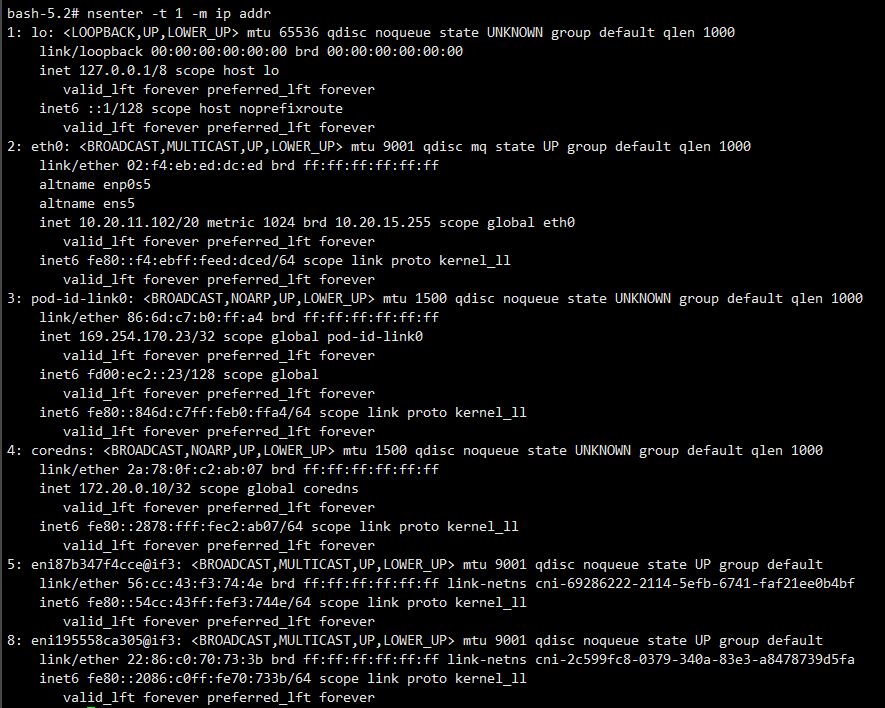

nsenter -t 1 -m ip addr

nsenter -t 1 -m ps -ef

nsenter -t 1 -m ls -l /proc

nsenter -t 1 -m df -hT

nsenter -t 1 -m ctr

nsenter -t 1 -m ctr ns ls

nsenter -t 1 -m ctr -n k8s.io containers ls

CONTAINER IMAGE RUNTIME

09e8f837f54d66305f3994afeee44d800971d7a921c06720382948dbdd9c6fab localhost/kubernetes/pause:0.1.0 io.containerd.runc.v2

2e1af1a9ff996505c0de0ee6b55bd8b3fefdaf6579fd6af46e978ad6e2096bae public.ecr.aws/amazonlinux/amazonlinux:2023 io.containerd.runc.v2

ebd4b8805ed0338a0bc6a54625c51f3a4c202641f0d492409d974871db49c476 localhost/kubernetes/pause:0.1.0 io.containerd.runc.v2

f4924417248c24b7eaac2498a11c912b575fc03cef1b8e55cae66772bdb36af5 docker.io/hjacobs/kube-ops-view:20.4.0 io.containerd.runc.v2

...

# (참고) 보안을 위해 Amazon Linux 컨테이너 이미지는 기본적으로 많은 바이너리를 설치하지 않습니다.

# yum whatproved 명령을 사용하여 특정 바이너리를 제공하기 위해 설치해야 하는 패키지를 식별할 수 있습니다.

yum whatprovides ps

-------------------------------------------------

[보안] 호스트 네임스페이스를 공유하는 파드 탈옥(?) 를 통해 호스트 정보 획득 시도

- Host의 권한이 있는 Pod를 배포

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: root-shell

namespace: kube-system

spec:

containers:

- command:

- /bin/cat

image: alpine:3

name: root-shell

securityContext:

privileged: true

tty: true

stdin: true

volumeMounts:

- mountPath: /host

name: hostroot

hostNetwork: true

hostPID: true

hostIPC: true

tolerations:

- effect: NoSchedule

operator: Exists

- effect: NoExecute

operator: Exists

volumes:

- hostPath:

path: /

name: hostroot

EOF

# 파드 확인 : 파드와 노드의 IP가 같다 (hostNetwork: true)

kubectl get pod -n kube-system root-shell

kubectl get node,pod -A -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node/i-0b12733f9b75cd835 Ready <none> 62m v1.31.4-eks-0f56d01 10.20.1.198 <none> Bottlerocket (EKS Auto) 2025.3.9 (aws-k8s-1.31) 6.1.129 containerd://1.7.25+bottlerocket

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system pod/kube-ops-view-657dbc6cd8-2lx4d 1/1 Running 0 62m 10.20.12.0 i-0b12733f9b75cd835 <none> <none>

kube-system pod/root-shell 1/1 Running 0 5m29s 10.20.1.198 i-0b12733f9b75cd835 <none> <none>

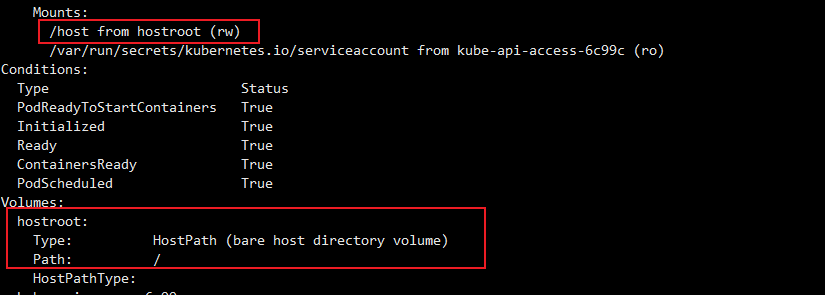

# 호스트패스 : 파드에 /host 경로에 rw로 마운트 확인

kubectl describe pod -n kube-system root-shell

...

Mounts:

/host from hostroot (rw)

...

Volumes:

hostroot:

Type: HostPath (bare host directory volume)

Path: /

HostPathType:

...

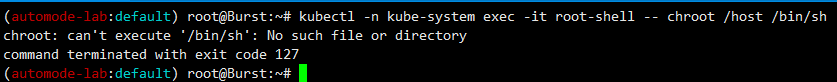

# 탈취 시도!

kubectl -n kube-system exec -it root-shell -- chroot /host /bin/sh

- host로 바로 연결은 불가능

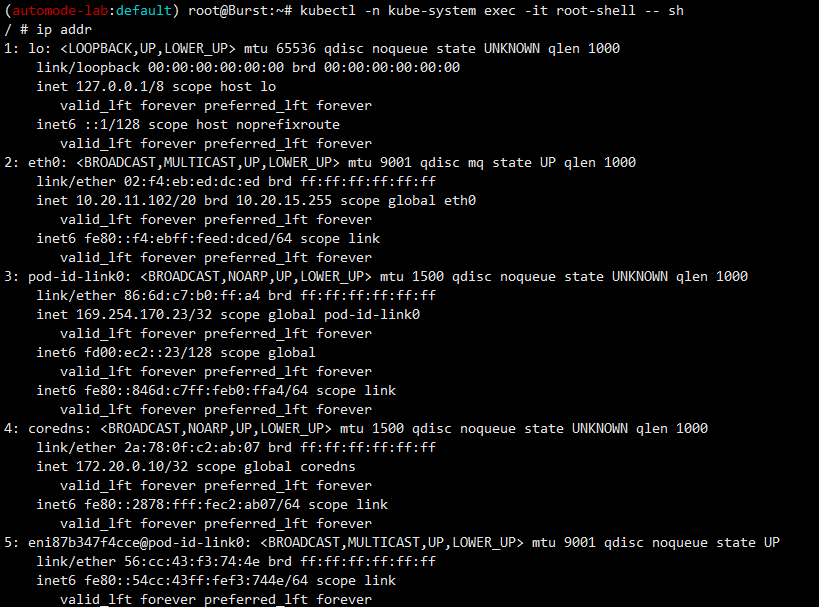

- 파드 내 shell 진입 후 확인

- debug 컨테이너보다 편하게 확인 가능 / 일반 리눅스 명령어 바로 사용 가능

kubectl -n kube-system exec -it root-shell -- sh

------------------------------------------------

whoami

pwd

# 네트워크 정보 확인 : 호스트와 같다 (hostNetwork: true)

ip link

ip addr # eth0, pod-id-link0, coredns

netstat -tnlp

netstat -unlp

# 프로세스 정보 확인 : 호스트와 같다 (hostPID: true)

top -d 1

ps aux

ps -ef

pstree

pstree -p

apk add htop

htop

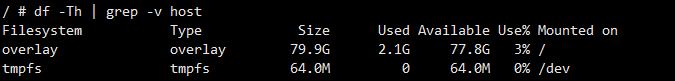

# 마운트 정보 : 데이터 EBS (80GiB)

df -hT | grep -v host

Filesystem Type Size Used Available Use% Mounted on

overlay overlay 79.9G 1.9G 78.0G 2% /

...

df -hT | grep host

/dev/root ext4 2.1G 1.1G 901.5M 55% /host

...

cat /etc/hostname

ip-10-20-1-198.ap-northeast-2.compute.internal

## (호스트패스 : 파드에 /host 경로에 rw로 마운트 확인)

ls -l /host

cat /host/usr/share/kube-proxy/kube-proxy-config

cat /host/etc/coredns/Corefile

cat /host/etc/kubernetes/ipamd/kubeconfig

cat /host/etc/kubernetes/eks-node-monitoring-agent/kubeconfig

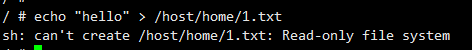

# 루트볼륨에 파일 생성 시도 : rw로 마운트

echo "hello" > /host/home/1.txt

sh: can't create /host/home/1.txt: Read-only file system

# 탈취 시도!

which apiclient

which sh

/bin/sh

chroot /host /bin/sh

chroot: can't execute '/bin/sh': No such file or directory

# 정보 확인

nsenter -t 1 -m journalctl -f -u kubelet

nsenter -t 1 -m ip addr

nsenter -t 1 -m ps -ef

nsenter -t 1 -m ls -l /proc

nsenter -t 1 -m df -hT

nsenter -t 1 -m ctr

nsenter -t 1 -m ctr ns ls

nsenter -t 1 -m ctr -n k8s.io containers ls

- 삭제

kubectl delete pod -n kube-system root-shell

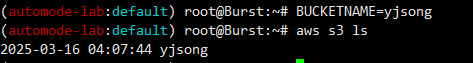

[운영] 노드 로그 수집 by S3 : Node monitoring agent

- 노드의 주요 정보 및 로그 정보를 S3 버킷에 저장 ( S3 Pre-signed URL 사용 )

- AWS API or SDK를 사용하여 생성( S3 Pre-signed URL)

- 버킷생성

# 버킷 생성 : 버킷명은 유일한 이름

BUCKETNAME=gasida-aews-study

aws s3api create-bucket --bucket $BUCKETNAME --create-bucket-configuration LocationConstraint=ap-northeast-2 --region ap-northeast-2

aws s3 ls

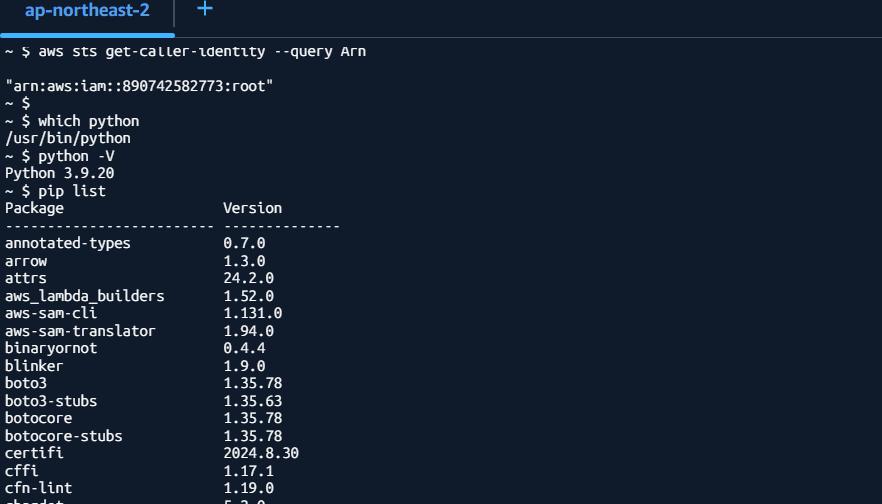

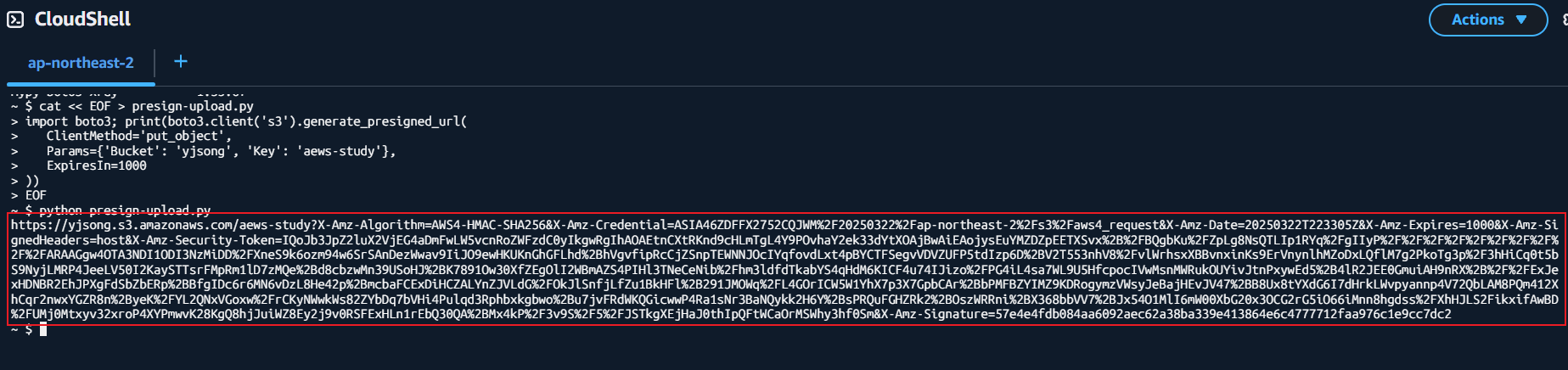

- S3 pre-sign url 생성

- AWS cloudshell에서 실행

- Params={'Bucket': 'gasida-aews-study', 'Key': 'awes-study'} → 버킷의 Tag정보 입력

aws sts get-caller-identity --query Arn

which python

python -V

pip list

# presign-upload.py 파일 작성 예시

import boto3; print(boto3.client('s3').generate_presigned_url(

ClientMethod='put_object',

Params={'Bucket': '<bucket-name>', 'Key': '<key>'},

ExpiresIn=1000

))

# 각자 아래 버킷명과 키값 수정

cat << EOF > presign-upload.py

import boto3; print(boto3.client('s3').generate_presigned_url(

ClientMethod='put_object',

Params={'Bucket': 'gasida-aews-study', 'Key': 'awes-study'},

ExpiresIn=1000

))

EOF

cat presign-upload.py

# 아래 출력된 presign url 메모(복사해두기)

python presign-upload.py

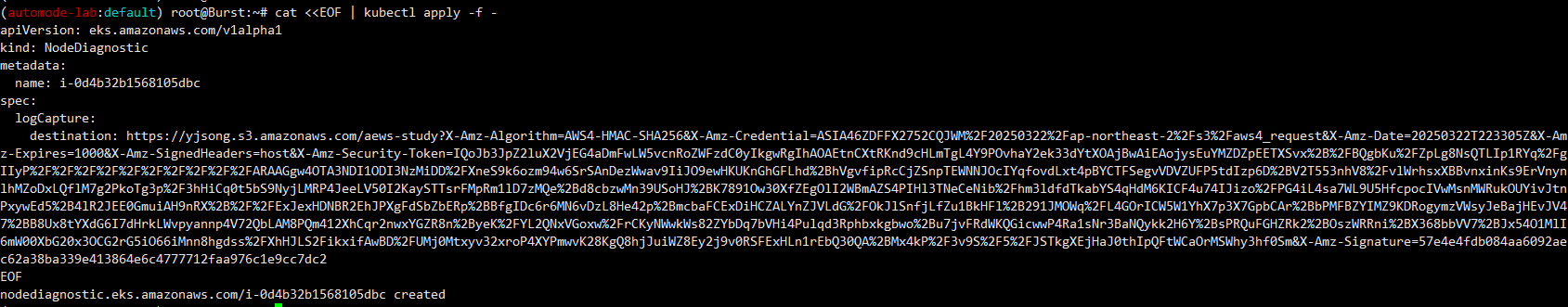

- NodeDiagnostic 생성

#

cat <<EOF | kubectl apply -f -

apiVersion: eks.amazonaws.com/v1alpha1

kind: NodeDiagnostic

metadata:

name: i-0a1abba3d52c59da0

spec:

logCapture:

destination: https://gasida-aews-study.s3.amazonaws.com/2025-03-16/logs1.tar.gz?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=ASIA5ILF2FJI2ZTNQEGA%2F20250316%2Fap-northeast-2%2Fs3%2Faws4_request&X-Amz-Date=20250316T141832Z&X-Amz-Expires=1000&X-Amz-SignedHeaders=host&X-Amz-Security-Token=IQoJb3JpZ2luX2VjENb%2F%2F%2F%2F%2F%2F%2F%2F%2F%2FwEaDmFwLW5vcnRoZWFzdC0yIkYwRAIgFB5O2tYI%2BtLDbdcqM2XOdpPlex%2Bejbu7idKpA3EzljQCIH9nSq2HIpiLMlz%2BR08uWKU4jG8nMIx24EcoUr5O9yG7KpYDCC8QAhoMOTExMjgzNDY0Nzg1Igyd4uJrSWVA1qnT5Gwq8wI9QsXOjuMn%2BIqkk8aZN1jtzkveuTw1Yv%2BJVaDs3krxWZiDU5KjWrzy1MW1%2B7lHsCDKo9Of%2B%2BlvTTegVxzzQS%2FdUPaIVQI5uPjblX0jag8HwrhB%2BpBm8vks0MDrELZoMVuUvGK6g1mkoFoRJ14Ot2f8IVRUnZCoLTZoDHy5euuh5wVw2QnUPovk5whxmwieszR4r8FjBmwy%2BVnjzaWcMTQPApQX19gkd3Gw4DciuSRBHK0tx8%2BBo8O1IJVCSjEMxdauUIn7rZYH8JDRn3odDoezTx%2BVdc%2BJViOdYwouScSoYXi9RyaDAhmWO2Gu%2Fg3p7ojfPPRylfEOh4h252Hf1t6%2F7YY7P2pnaEhBndVBc8IzqXA2pKMCglXGoFIQu7e%2F8PkXr0jjTv7t5EvM7fAlCbnE5nOJcuDy%2BjBz3Gq7DGfdHPoCYXfPNlX61aMZslKKdcKd7hGlVgOPr3Tkt7TffON7UUJvsg7qNo35Qpebzc2w%2FuwwejDUnNu%2BBjq0AqBy%2Fk3O%2FNggSTAuRwdvfmlbarX1tVW66qWebKGXmSCgJFgZ%2FTIE0lSX%2BvZtNxGilZWwDCUXuOuFLqli2pWK008jVwOpq3jYJcAbmua250SWEy4WruK1Ecgk7w52HDCRHA%2BOq9aHxgyeAh3nCVlHL2QUYkwu9HvkR6%2B3f%2BlyREkZXMztxadYf9Blf%2FeR2O1Dj9XcwL6uwZEZzY1ZGXYr1Ju%2BdbwqdEydlkjvHiA5XmhsgpOmAApzaj%2FLi1Nu%2Fc3hKFte172%2BwerUYLIX2m5yUP2M3LLB316r2uG15wysAswTWiMvTRJjxfZisH%2BCPV9JD8TM4Ey%2FzQy1agNZ3XamdUX8GdoTuiYWQUzTbStOwr37pboXMY58S0Fhe65s3KGUNWJCUDLLG0NkOpBzA05ay%2FJW7ao0&X-Amz-Signature=3463ba2536c1e0de75dd4d4d8cac91165419b093c872945d80d8b25870200c06

EOF

#

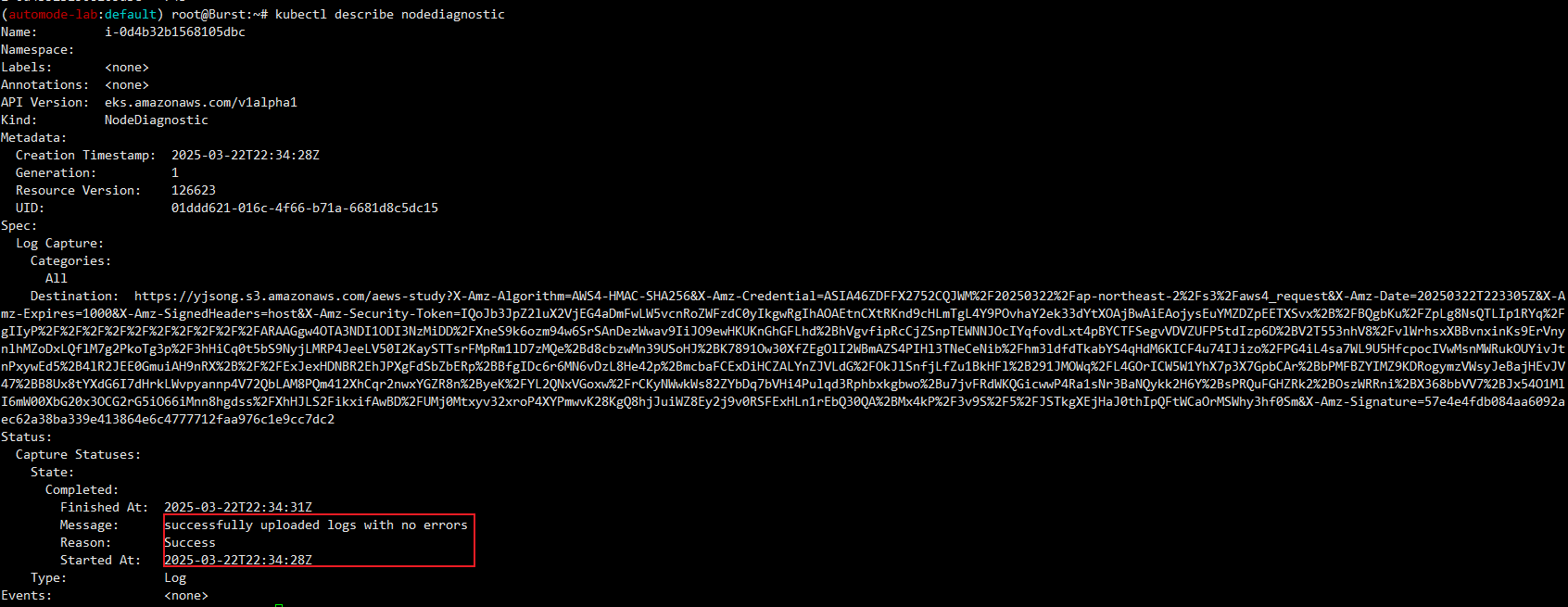

kubectl get nodediagnostic

kubectl describe nodediagnostic

#

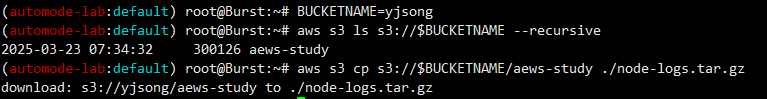

BUCKETNAME=yjsong

aws s3 ls s3://$BUCKETNAME --recursive

aws s3 cp s3://$BUCKETNAME/awes-study ./node-logs.tar.gz

tar -zxvf node-logs.tar.gz

# 디렉터리 구성 (예시)

% tree .

- S3 버킷에 저장된 Log 다운로드

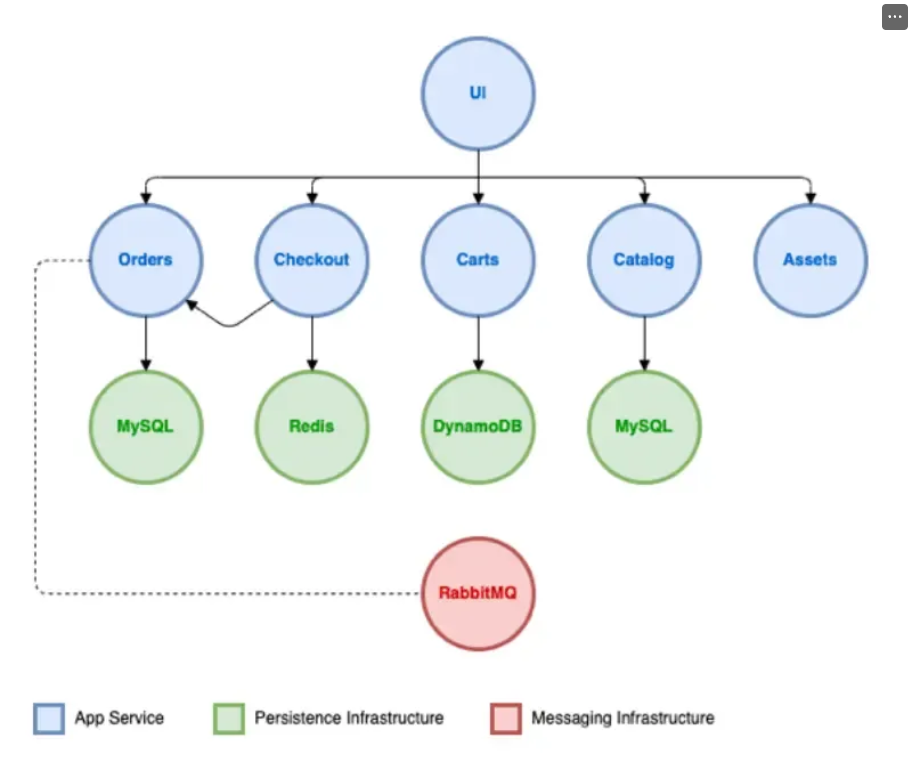

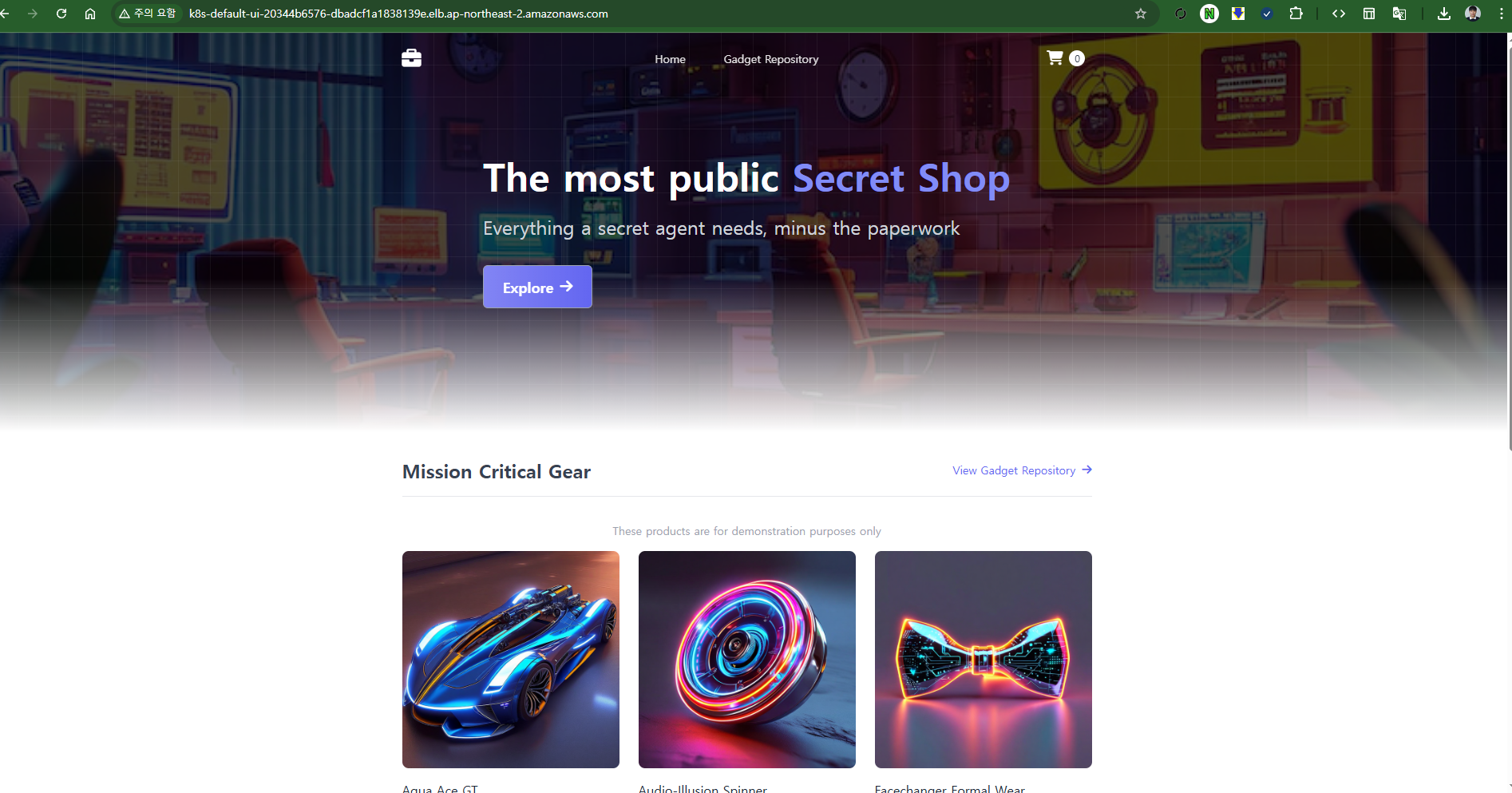

[네트워킹] Service(LoadBalancer) AWS NLB 사용해보기

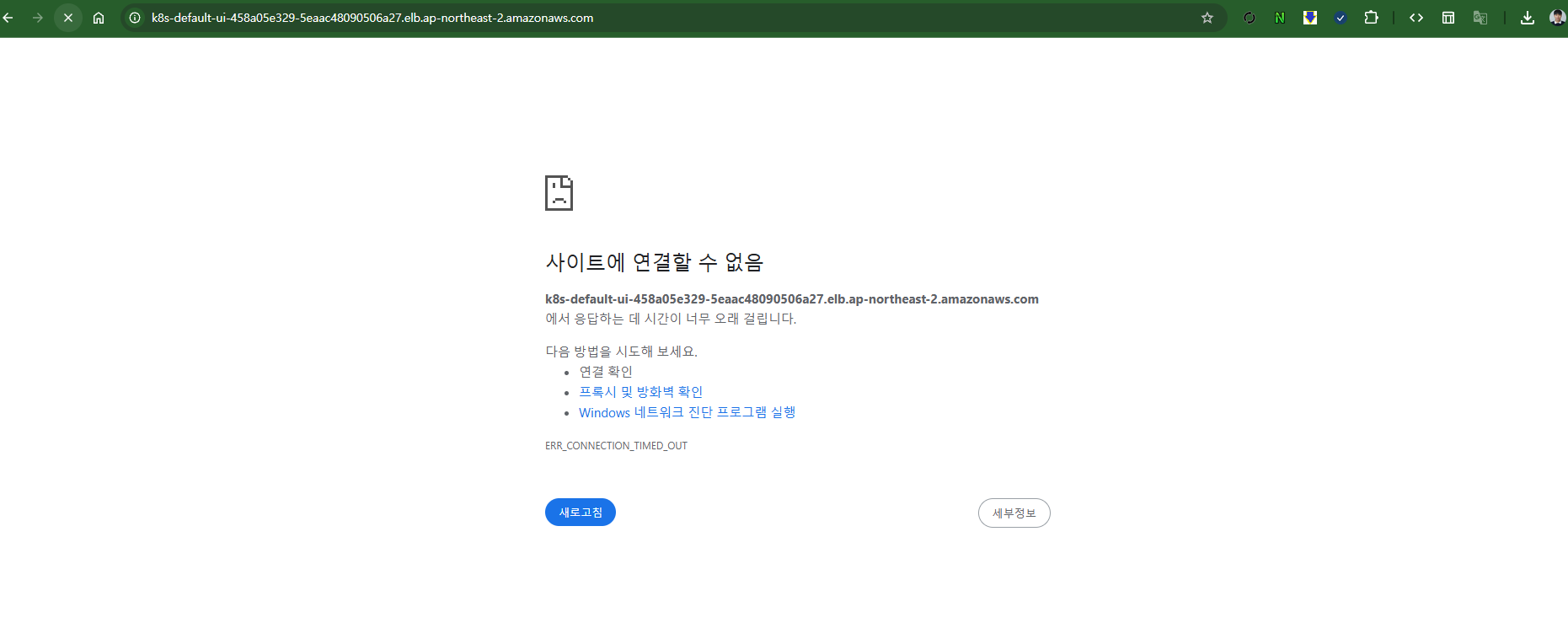

- 일반 EKS NLB를 배포하였기 때문에 정상 접속 불가

# 배포 시간 3분 정도 소요 : CrashLoopBackOff 는 다른 파드를 정상 상태되면서 자연 해소

kubectl apply -f https://github.com/aws-containers/retail-store-sample-app/releases/latest/download/kubernetes.yaml

kubectl get events -w --sort-by '.lastTimestamp'

watch -d kubectl get node,pod -owide

#

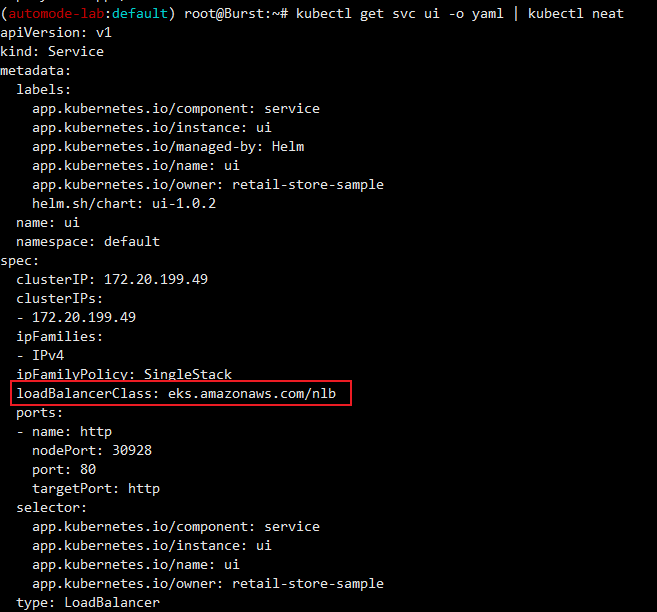

kubectl get svc ui -o yaml | kubectl neat

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: service

app.kubernetes.io/instance: ui

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ui

app.kubernetes.io/owner: retail-store-sample

helm.sh/chart: ui-1.0.1

name: ui

namespace: default

spec:

clusterIP: 172.20.181.70

clusterIPs:

- 172.20.181.70

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

loadBalancerClass: eks.amazonaws.com/nlb

ports:

- name: http

nodePort: 32223

port: 80

targetPort: http

selector:

app.kubernetes.io/component: service

app.kubernetes.io/instance: ui

app.kubernetes.io/name: ui

app.kubernetes.io/owner: retail-store-sample

type: LoadBalancer

#

kubectl get targetgroupbindings.eks.amazonaws.com

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

k8s-default-ui-c1e031d638 ui 80 ip 4m52s

kubectl get targetgroupbindings.eks.amazonaws.com -o yaml

...

# 아래 EXTERNAL-IP로 웹 접속

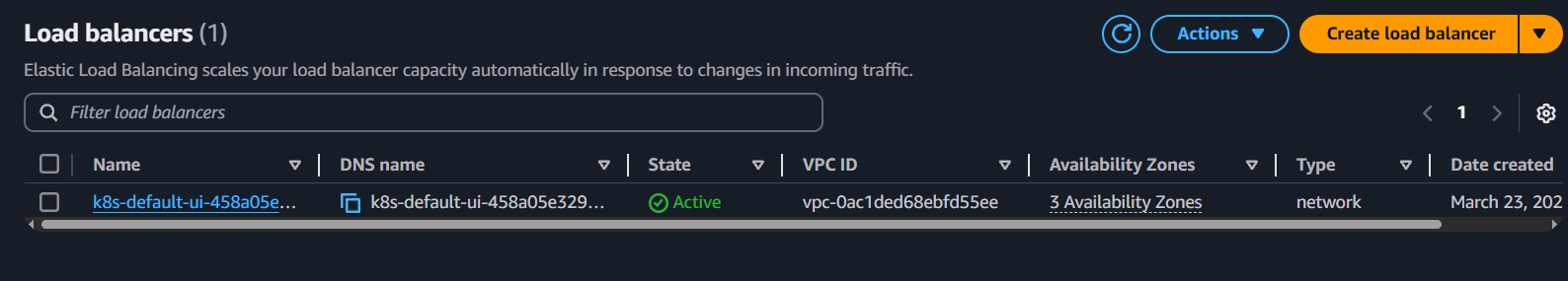

kubectl get svc,ep ui

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ui LoadBalancer 172.20.199.49 k8s-default-ui-458a05e329-5eaac48090506a27.elb.ap-northeast-2.amazonaws.com 80:30928/TCP 3m53s

NAME ENDPOINTS AGE

endpoints/ui 10.20.10.250:8080 3m53s

- NLB는 정상적으로 생성되었지만, 접근 불가

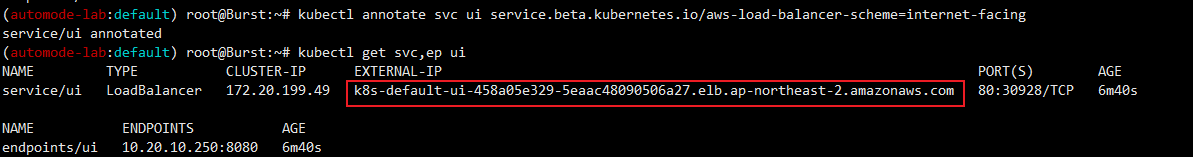

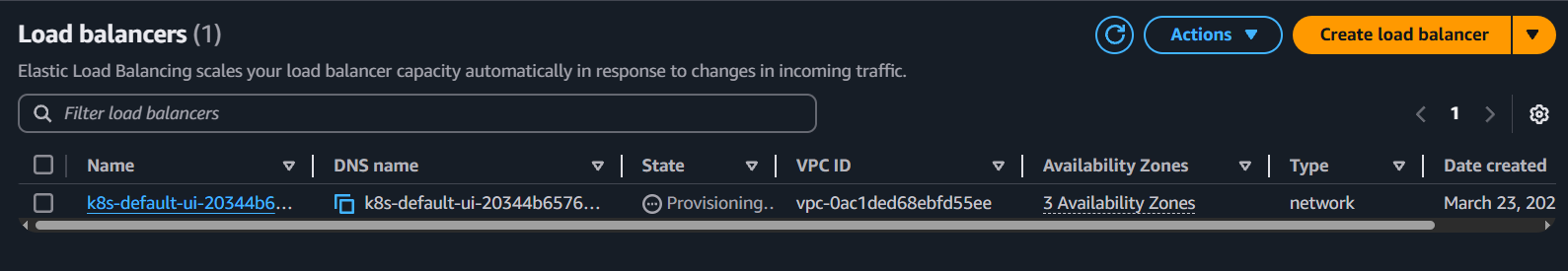

- Auto mode 규칙 적용

- NLB가 새롭게 생성되는 것을 확인

# 접속 안됨 => 아래 annotate 설정 후 신규 NLB 생성 되어 정상되면 접속 가능!

kubectl annotate svc ui service.beta.kubernetes.io/aws-load-balancer-scheme=internet-facing

# NLB 신규 확인

kubectl get svc,ep ui

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ui LoadBalancer 172.20.181.70 k8s-default-ui-d63e862f19-b318413dc179fd4e.elb.ap-northeast-2.amazonaws.com 80:32223/TCP 9m9s

NAME ENDPOINTS AGE

endpoints/ui 10.20.12.10:8080 9m9s

#

kubectl get svc ui -o yaml | kubectl neat

kubectl get targetgroupbindings.eks.amazonaws.com

kubectl get targetgroupbindings.eks.amazonaws.com -o yaml

728x90

'2025_AEWS Study' 카테고리의 다른 글

| 8주차 - K8S CI/CD (2) (0) | 2025.03.30 |

|---|---|

| 8주차 - K8S CI/CD (1) (0) | 2025.03.30 |

| 7주차 - EKS Mode/Nodes - Fargate(1) (0) | 2025.03.22 |

| 6주차 - EKS Security - IRSA & Pod Identity(3) (0) | 2025.03.16 |

| 6주차 - EKS Security - EKS 인증/인가(2) (0) | 2025.03.16 |