728x90

Blue/Green Upgrade

- 클러스터에 애플리케이션을 배포하고, AWS Route 53의 가중치 기반 라우팅을 사용하여 트래픽을 점진적으로 Blue에서 Green으로 전환

- SSH 키 생성 및 AWS Secrets Manager 생성

#로컬 서버 내 공개키/개인키 생성

ssh-keygen -t rsa

#AWS Secrets Manager 개인키 등록

aws secretsmanager create-secret \

--name github-blueprint-ssh-key \

--secret-string "$(cat ~/.ssh/id_rsa)"

- 개인 Github 공개키 등록

- Settings > SSH and GPG Keys > SSH Keys > Authentication Keys

- 로컬 서버(WSL2 Ubuntu24.04)에서 생성한 공개 키 등록

- Settings > SSH and GPG Keys > SSH Keys > Authentication Keys

※ 키 생성 및 Secrets Manager & Git hub 키등록을 하는 이유는, ArgoCD에서 Add-on, Workload 관련 소스코드를 Git hub(블로그 제공)에서 SSH로 가져오기 때문에, SSH 인증이 필요

ssh -T git@github.com

- 실습 소스코드

- 상위 디렉토리 terraform.tfvars파일을 environment, eks-blue, eks-green에 심볼릭링크 설정

git clone https://github.com/aws-ia/terraform-aws-eks-blueprints.git

cd terraform-aws-eks-blueprints/patterns/blue-green-upgrade/

cp terraform.tfvars.example terraform.tfvars

ln -s ../terraform.tfvars environment/terraform.tfvars

ln -s ../terraform.tfvars eks-blue/terraform.tfvars

ln -s ../terraform.tfvars eks-green/terraform.tfvars

- terraform.tfvars

- Region, Host Zone, EKS Role 설정

- eks_admin_role_named의 경우, 기존에 생성되어 있던 Role 확인 후 그대로 사용

- Workload의 경우 실습 공개 Repo 그대로 사용 → ArgoCDd에서 SSH로 소스코드를 가져와서 배포

- Region, Host Zone, EKS Role 설정

# You should update the below variables

aws_region = "ap-northeast-2"

environment_name = "eks-blueprint"

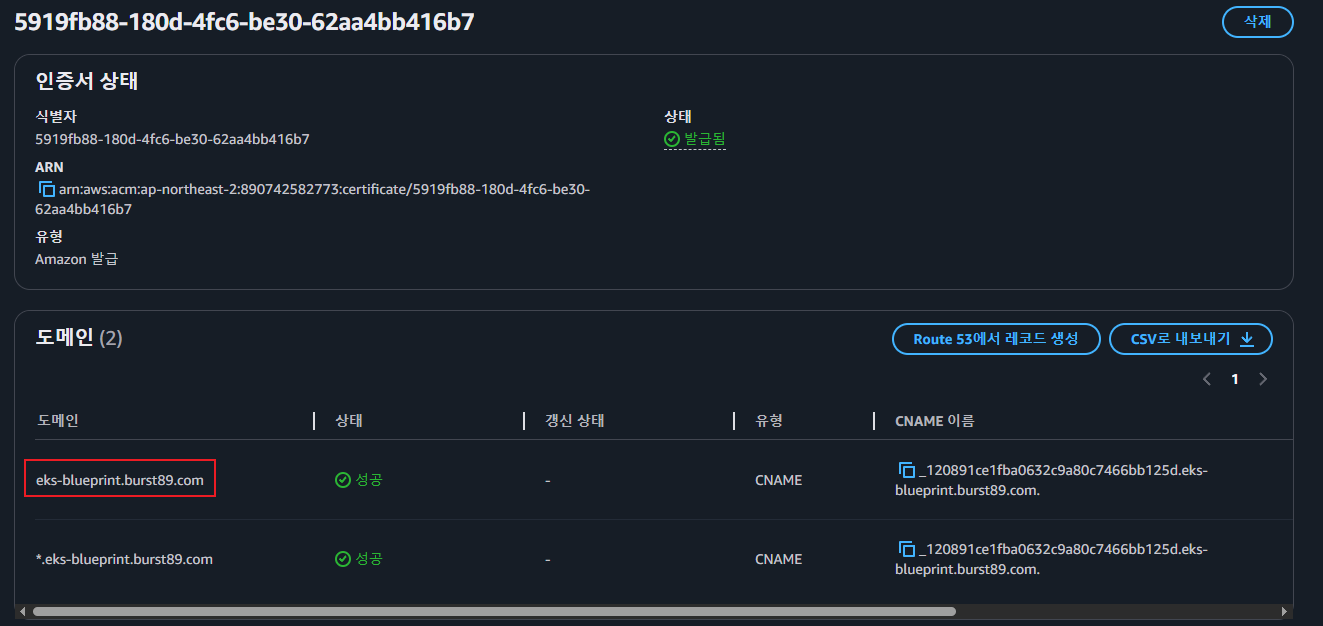

hosted_zone_name = "burst89.com" # your Existing Hosted Zone

eks_admin_role_name = "AWSServiceRoleForAmazonEKS" # Additional role admin in the cluster (usually the role I use in the AWS console)

#gitops_addons_org = "git@github.com:aws-samples"

#gitops_addons_repo = "eks-blueprints-add-ons"

#gitops_addons_path = "argocd/bootstrap/control-plane/addons"

#gitops_addons_basepath = "argocd/"

# EKS Blueprint Workloads ArgoCD App of App repository

gitops_workloads_org = "git@github.com:aws-samples"

gitops_workloads_repo = "eks-blueprints-workloads"

gitops_workloads_revision = "main"

gitops_workloads_path = "envs/dev"

#Secret manager secret for github ssk jey

aws_secret_manager_git_private_ssh_key_name = "github-blueprint-ssh-key"

- environment 배포

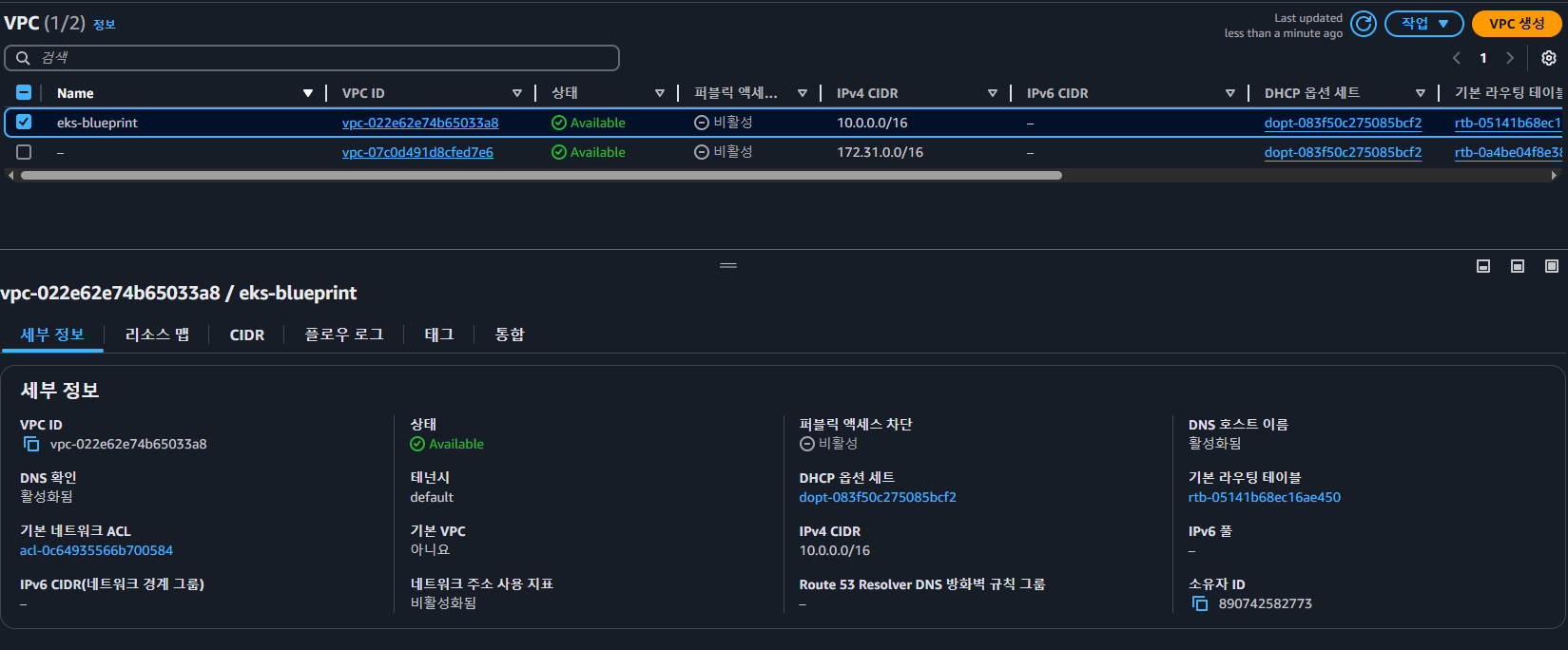

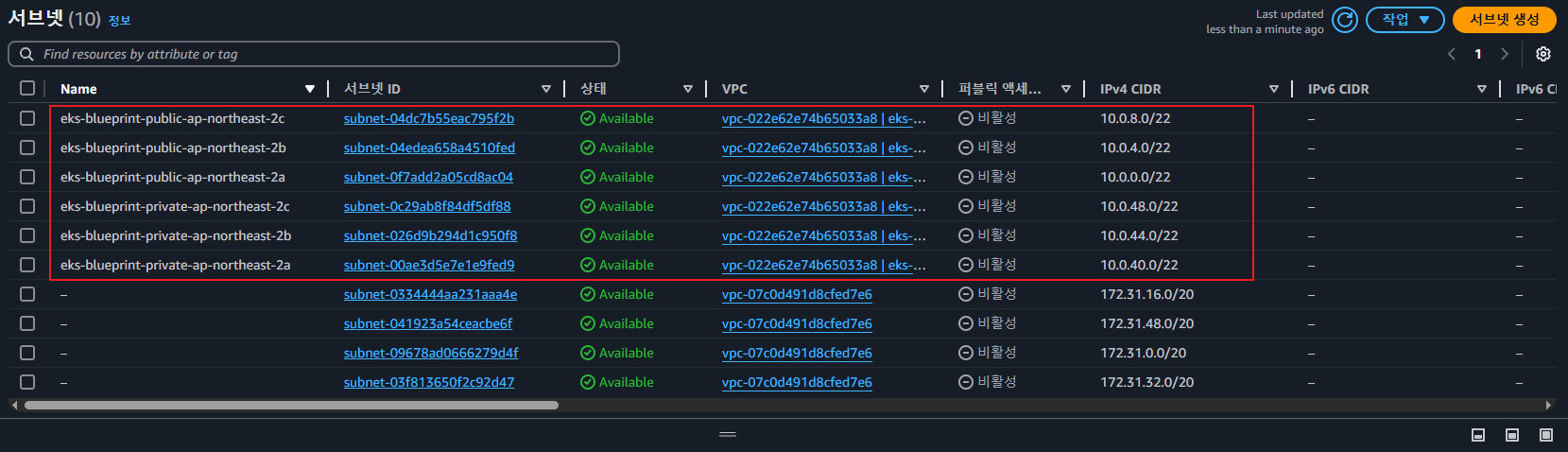

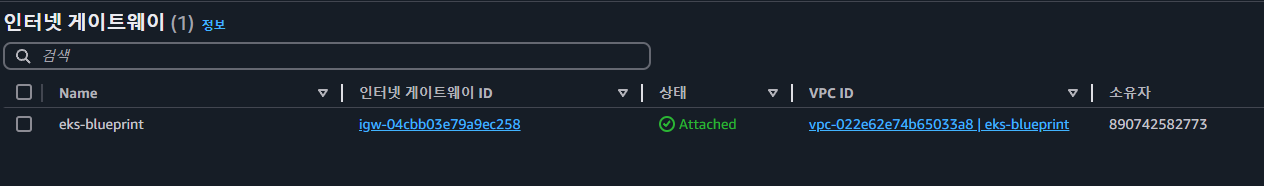

- VPC, Subnet, IGW 등 실습 환경 배포

#environment

terraform init

terraform plan

terraform apply -auto-approve

- eks-blue & eks-green 배포(각 약20분 소요)

#eks-blue

terraform init

terraform plan

terraform apply -auto-approve

#eks-green

terraform init

terraform plan

terraform apply -auto-approve

- 배포 후 Output 내용

Apply complete! Resources: 130 added, 0 changed, 0 destroyed.

Outputs:

access_argocd = <<EOT

export KUBECONFIG="/tmp/eks-blueprint-blue"

aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue

echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.eks-blueprint --query SecretString --output text --region ap-northeast-2)"

EOT

configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue"

eks_blueprints_dev_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::890742582773:role/team-burnham-20250406071804104100000022",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::890742582773:role/team-riker-20250406071803760900000020",

]

eks_blueprints_ecsdemo_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::890742582773:role/team-ecsdemo-crystal-20250406071804738900000025",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::890742582773:role/team-ecsdemo-frontend-20250406071804318500000023",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::890742582773:role/team-ecsdemo-nodejs-20250406071804738900000024",

]

eks_blueprints_platform_teams_configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::890742582773:role/team-platform-2025040607180326170000001d"

eks_cluster_id = "eks-blueprint-blue"

gitops_metadata = <sensitive>

Outputs:

access_argocd = <<EOT

export KUBECONFIG="/tmp/eks-blueprint-green"

aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green

echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.eks-blueprint --query SecretString --output text --region ap-northeast-2)"

EOT

configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green"

eks_blueprints_dev_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::890742582773:role/team-burnham-20250406025920735600000021",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::890742582773:role/team-riker-20250406025920818800000022",

]

eks_blueprints_ecsdemo_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::890742582773:role/team-ecsdemo-crystal-20250406025921731700000025",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::890742582773:role/team-ecsdemo-frontend-20250406025921466600000024",

"aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::890742582773:role/team-ecsdemo-nodejs-20250406025921463700000023",

]

eks_blueprints_platform_teams_configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green --role-arn arn:aws:iam::890742582773:role/team-platform-2025040602591990500000001b"

eks_cluster_id = "eks-blueprint-green"

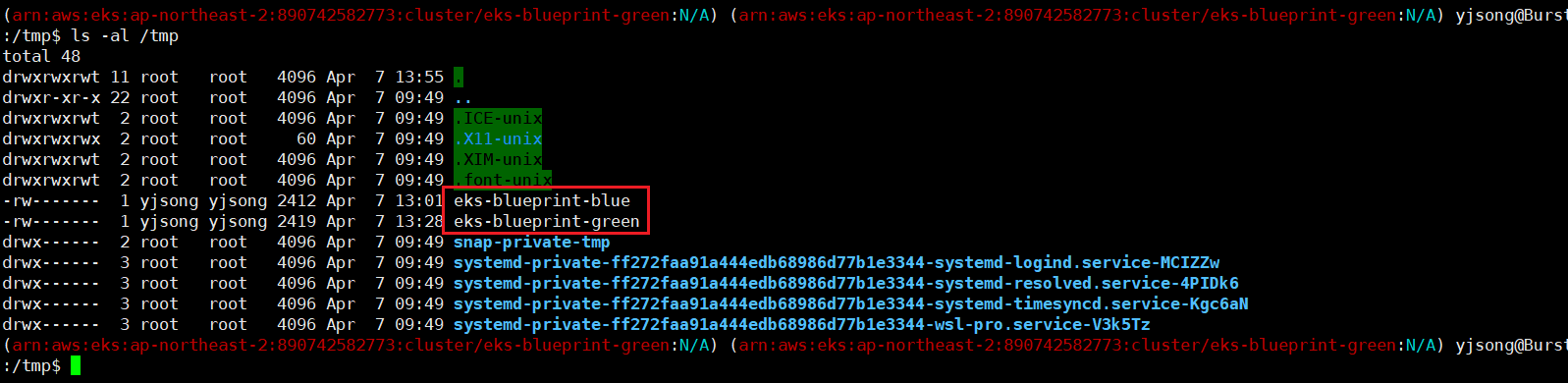

gitops_metadata = <sensitive>- KUBECONFIG 설정

export KUBECONFIG="/tmp/eks-blueprint-blue"

aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-blue

Added new context arn:aws:eks:ap-northeast-2:890742582773:cluster/eks-blueprint-blue to /tmp/eks-blueprint-blue

(arn:aws:eks:ap-northeast-2:890742582773:cluster/eks-blueprint-blue:N/A) (arn:aws:eks:ap-northeast-2:890742582773:cluster/eks-blueprint-blue:N/A)

export KUBECONFIG="/tmp/eks-blueprint-green"

aws eks --region ap-northeast-2 update-kubeconfig --name eks-blueprint-green

Added new context arn:aws:eks:ap-northeast-2:890742582773:cluster/eks-blueprint-green to /tmp/eks-blueprint-green

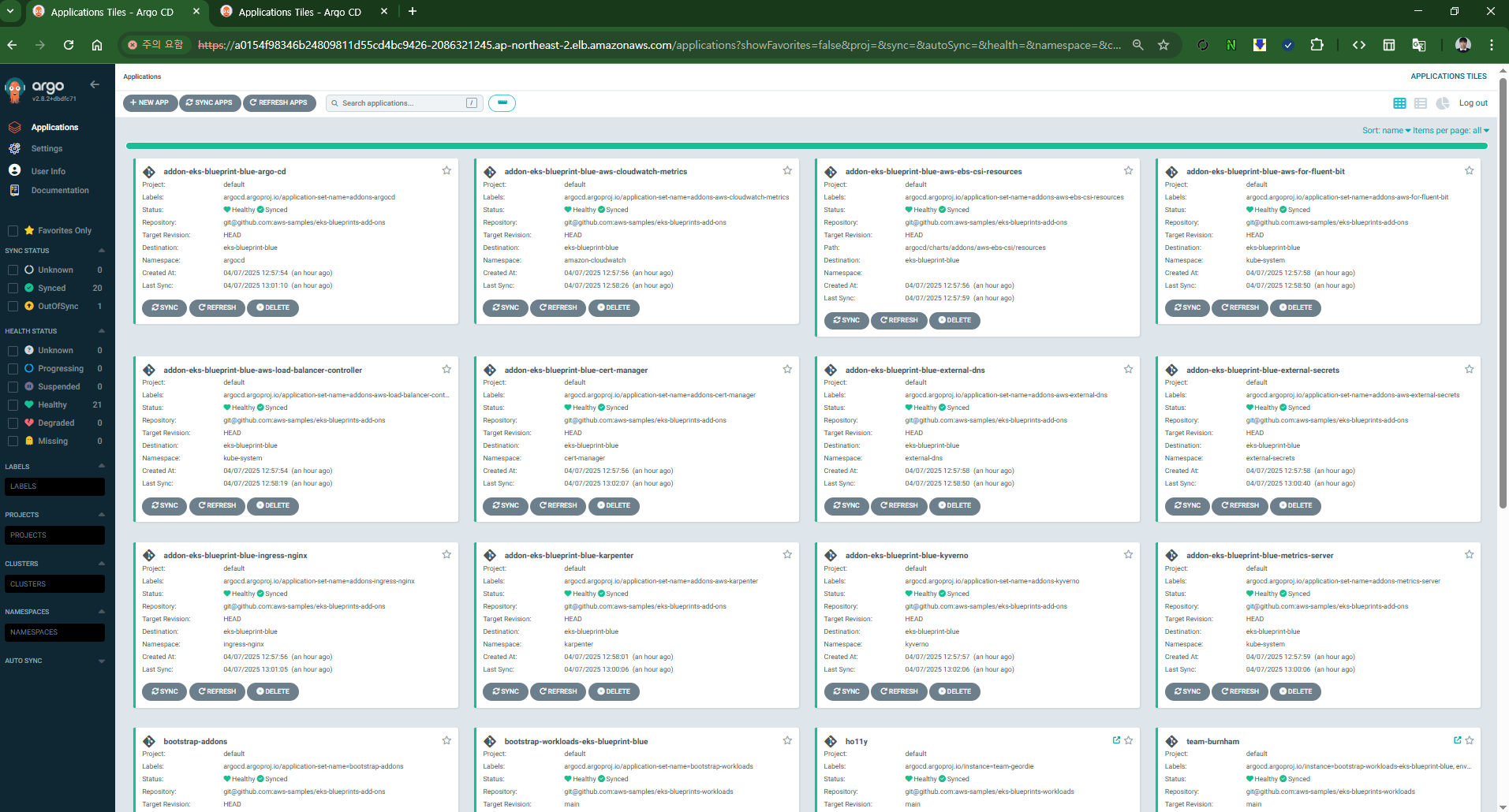

- ArgoCD 정보 확인

echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.eks-blueprint --query SecretString --output text --region ap-northeast-2)"

ArgoCD URL: https://acd839bd60b7f48d4b2f70ff6286b8b6-857535757.ap-northeast-2.elb.amazonaws.com

ArgoCD Username: admin

ArgoCD Password: iYPKJ!DCw]8G)9Hp

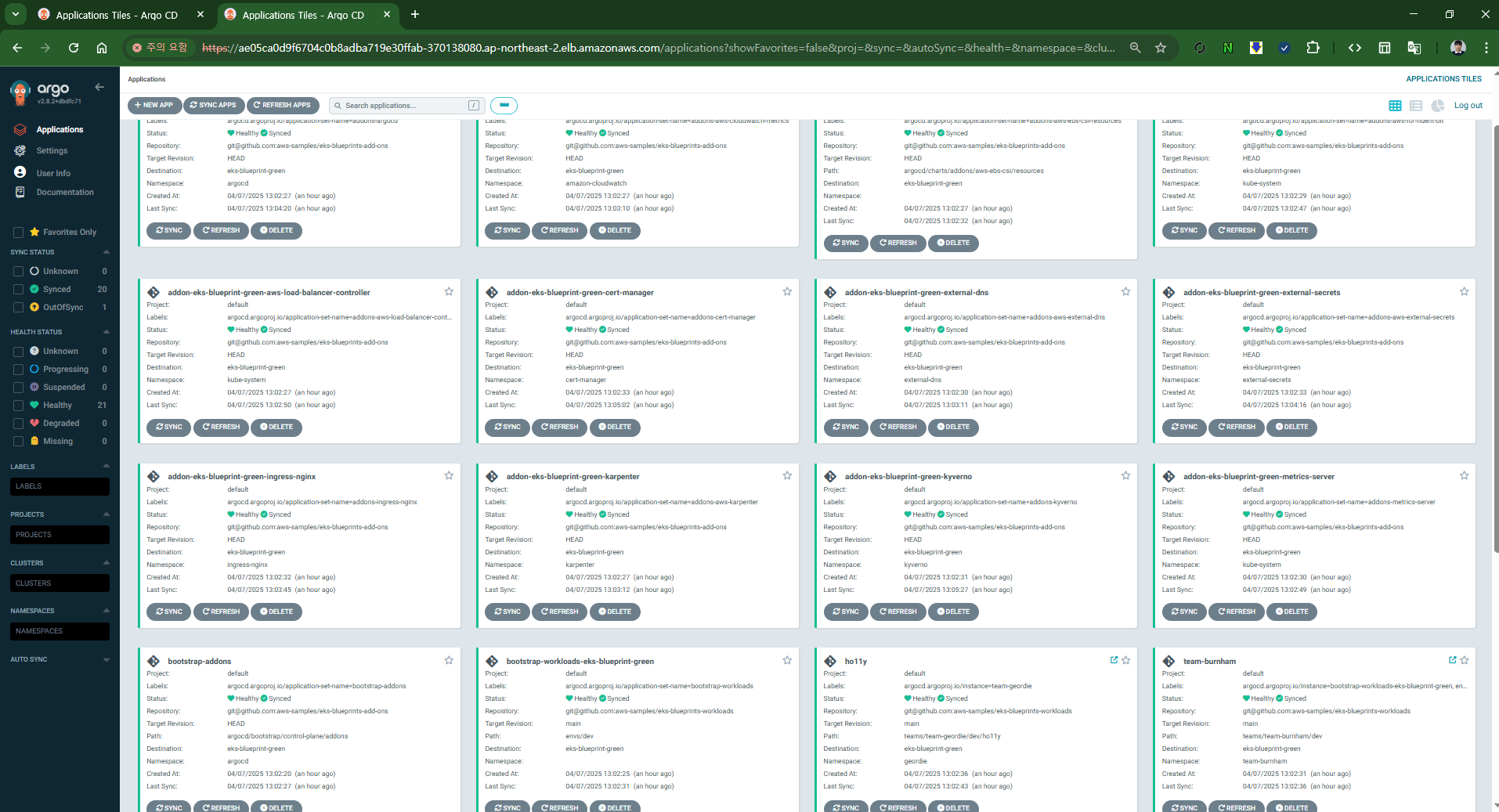

echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.eks-blueprint --query SecretString --output text --region ap-northeast-2)"

ArgoCD URL: https://ae05ca0d9f6704c0b8adba719e30ffab-370138080.ap-northeast-2.elb.amazonaws.com

ArgoCD Username: admin

ArgoCD Password: 8)h_+!75CP]!a)SJ

- eks-blue main.tf → EKS 클러스터 배포 시 Route53 가중치 100 설정 확인

- argocd_route53_weight = "100"

- route53_weight = "100"

- ecsfrontend_route53_weight = "100"

provider "aws" {

region = var.aws_region

}

provider "kubernetes" {

host = module.eks_cluster.eks_cluster_endpoint

cluster_ca_certificate = base64decode(module.eks_cluster.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["eks", "get-token", "--cluster-name", module.eks_cluster.eks_cluster_id]

}

}

provider "helm" {

kubernetes {

host = module.eks_cluster.eks_cluster_endpoint

cluster_ca_certificate = base64decode(module.eks_cluster.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["eks", "get-token", "--cluster-name", module.eks_cluster.eks_cluster_id]

}

}

}

module "eks_cluster" {

source = "../modules/eks_cluster"

aws_region = var.aws_region

service_name = "blue"

cluster_version = "1.26"

argocd_route53_weight = "100"

route53_weight = "100"

ecsfrontend_route53_weight = "100"

environment_name = var.environment_name

hosted_zone_name = var.hosted_zone_name

eks_admin_role_name = var.eks_admin_role_name

aws_secret_manager_git_private_ssh_key_name = var.aws_secret_manager_git_private_ssh_key_name

argocd_secret_manager_name_suffix = var.argocd_secret_manager_name_suffix

ingress_type = var.ingress_type

gitops_addons_org = var.gitops_addons_org

gitops_addons_repo = var.gitops_addons_repo

gitops_addons_basepath = var.gitops_addons_basepath

gitops_addons_path = var.gitops_addons_path

gitops_addons_revision = var.gitops_addons_revision

gitops_workloads_org = var.gitops_workloads_org

gitops_workloads_repo = var.gitops_workloads_repo

gitops_workloads_revision = var.gitops_workloads_revision

gitops_workloads_path = var.gitops_workloads_path

}

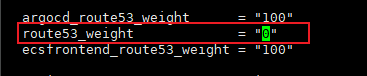

- eks-green main.tf → EKS 클러스터 배포 시 Route53 가중치 0 설정 확인

- argocd_route53_weight = "0"

- route53_weight = "0"

- ecsfrontend_route53_weight = "0"

provider "aws" {

region = var.aws_region

}

provider "kubernetes" {

host = module.eks_cluster.eks_cluster_endpoint

cluster_ca_certificate = base64decode(module.eks_cluster.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["eks", "get-token", "--cluster-name", module.eks_cluster.eks_cluster_id]

}

}

provider "helm" {

kubernetes {

host = module.eks_cluster.eks_cluster_endpoint

cluster_ca_certificate = base64decode(module.eks_cluster.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["eks", "get-token", "--cluster-name", module.eks_cluster.eks_cluster_id]

}

}

}

module "eks_cluster" {

source = "../modules/eks_cluster"

aws_region = var.aws_region

service_name = "green"

cluster_version = "1.27" # Here, we deploy the cluster with the N+1 Kubernetes Version

argocd_route53_weight = "0" # We control with theses parameters how we send traffic to the workloads in the new cluster

route53_weight = "0"

ecsfrontend_route53_weight = "0"

environment_name = var.environment_name

hosted_zone_name = var.hosted_zone_name

eks_admin_role_name = var.eks_admin_role_name

aws_secret_manager_git_private_ssh_key_name = var.aws_secret_manager_git_private_ssh_key_name

argocd_secret_manager_name_suffix = var.argocd_secret_manager_name_suffix

ingress_type = var.ingress_type

gitops_addons_org = var.gitops_addons_org

gitops_addons_repo = var.gitops_addons_repo

gitops_addons_basepath = var.gitops_addons_basepath

gitops_addons_path = var.gitops_addons_path

gitops_addons_revision = var.gitops_addons_revision

gitops_workloads_org = var.gitops_workloads_org

gitops_workloads_repo = var.gitops_workloads_repo

gitops_workloads_revision = var.gitops_workloads_revision

gitops_workloads_path = var.gitops_workloads_path

}

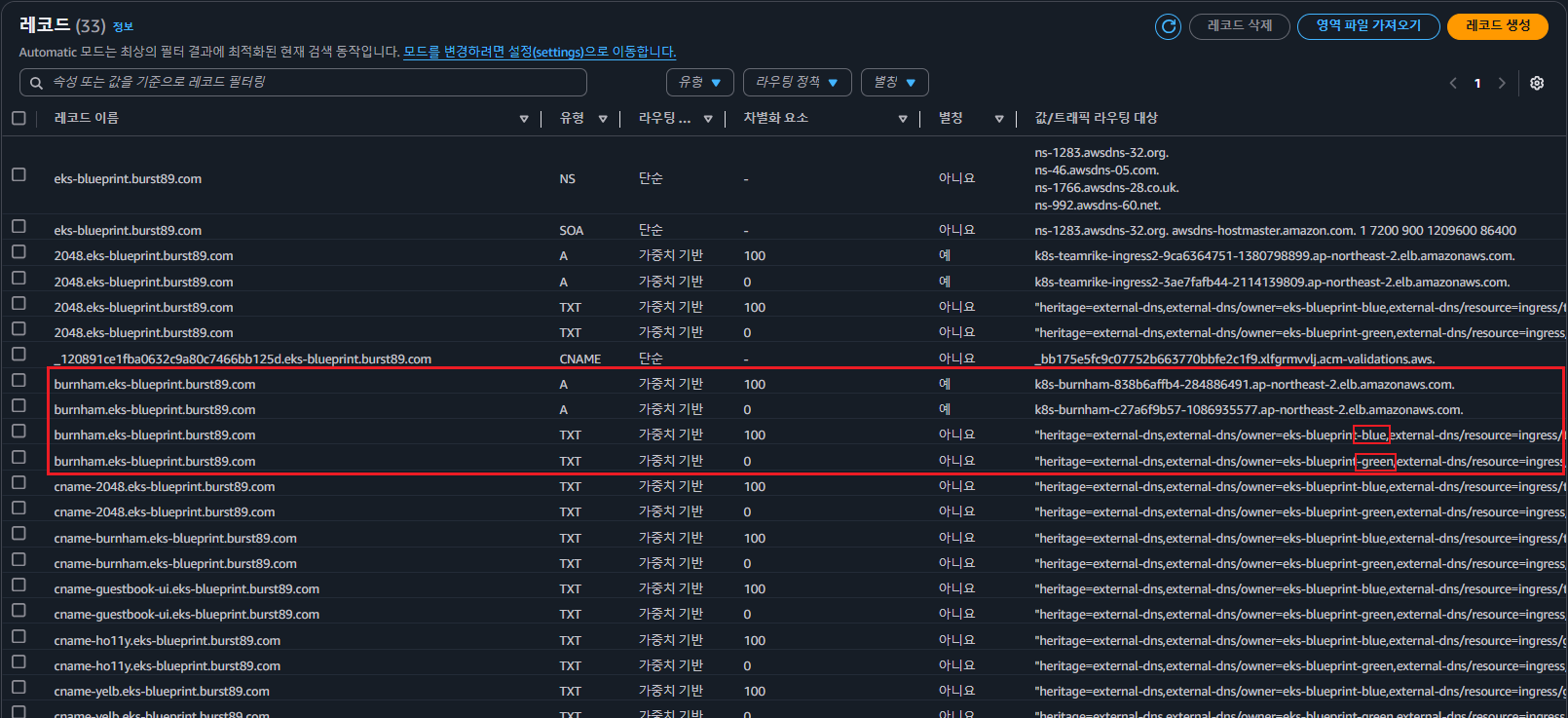

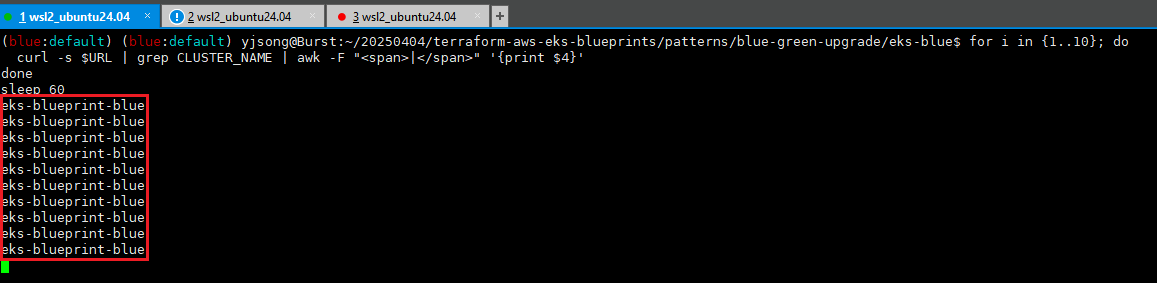

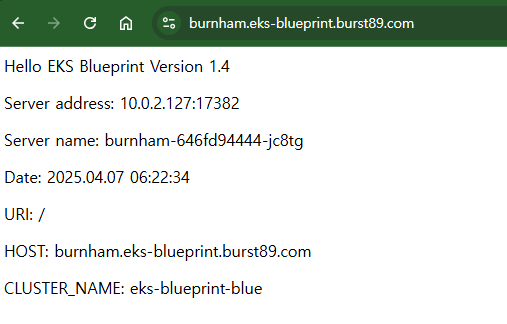

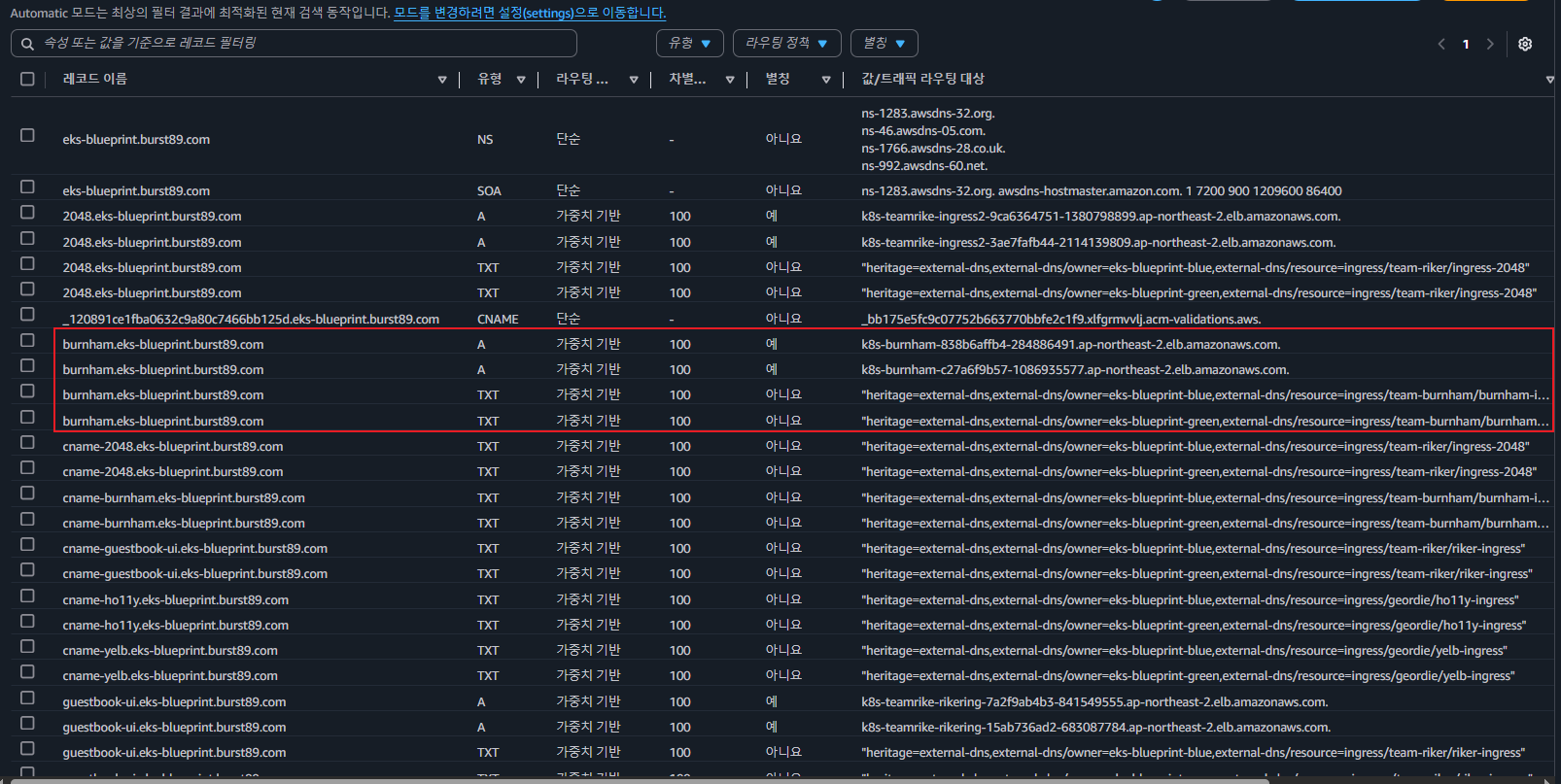

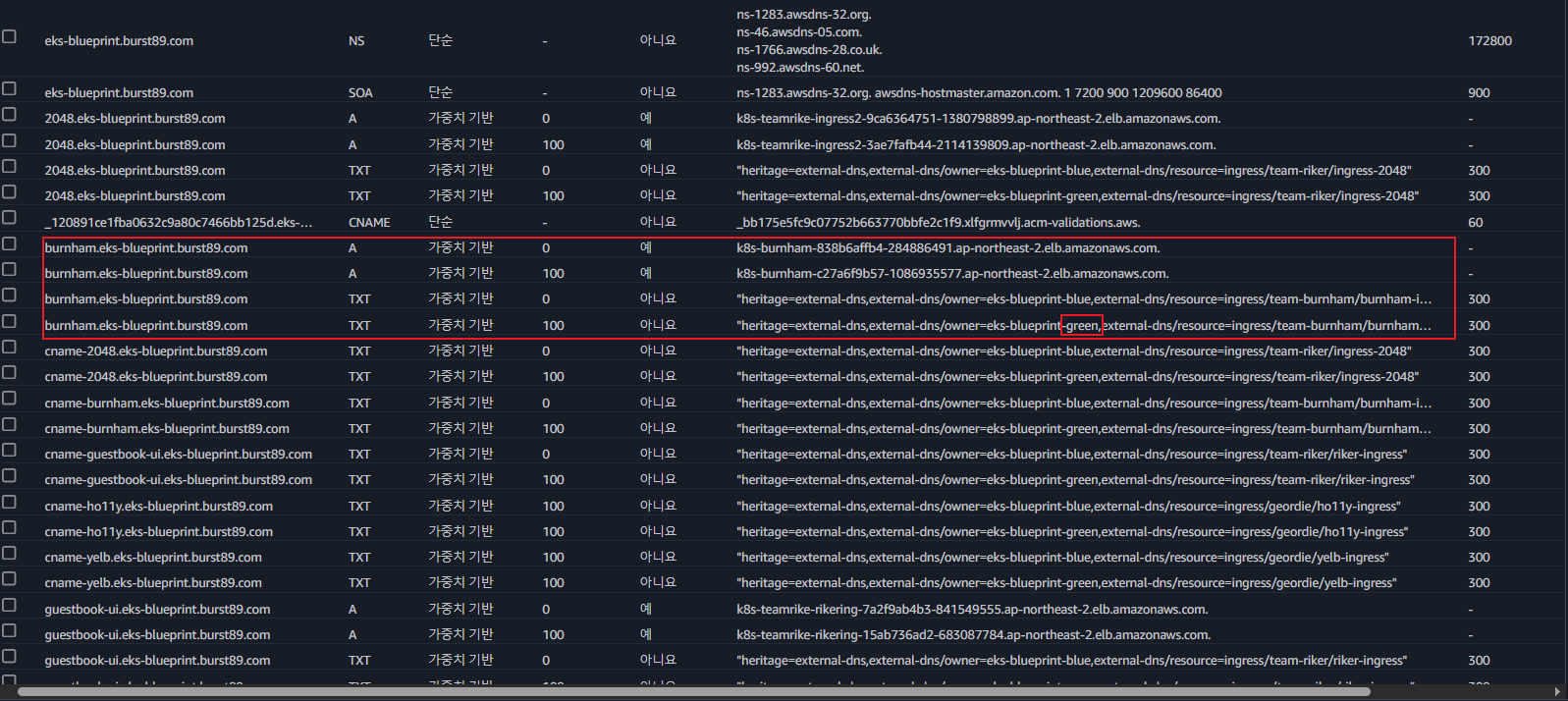

- Route53 레코드 확인-(eks-blue 가중치 100)

- burnham-ingress 확인

- eks-blue로 가중치가 100 설정되어 있기 때문에 burnham-ingress 접근 시 eks-blue 클러스터로 인입 확인

URL=$(echo -n "https://" ; kubectl get ing -n team-burnham burnham-ingress -o json | jq ".spec.rules[0].host" -r)

curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

eks-blueprint-blue- 10회 접근 확인

for i in {1..10}; do

curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

done

sleep 60

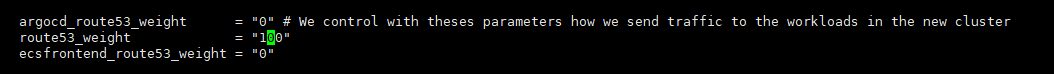

- Route53 레코드 확인-(eks-blue 가중치 100 / eks-green 가중치 100)

- eks-green main.tf파일 수정

- route53_weight = "0" → route53_weight = "100"

- 수정 후 terraform apply 수행

- eks-green main.tf파일 수정

- burnham-ingress 확인

URL=$(echo -n "https://" ; kubectl get ing -n team-burnham burnham-ingress -o json | jq ".spec.rules[0].host" -r)

curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

eks-blueprint-blue

eks-blueprint-blue

eks-blueprint-blue

eks-blueprint-green

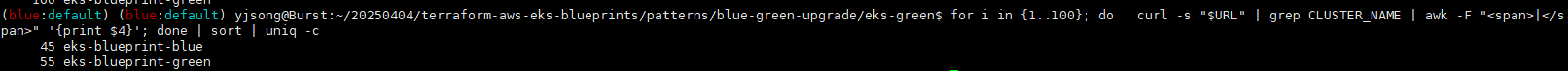

eks-blueprint-green- 100회 접근 확인

- 이론상 가중치가 각 각 100이기 때문에 50 blue / 50 green이 되야 하지만 TTL영향으로 번갈아가면서 트래픽이 변경되진 않음

for i in {1..100}; do

curl -s "$URL" | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

done | sort | uniq -c

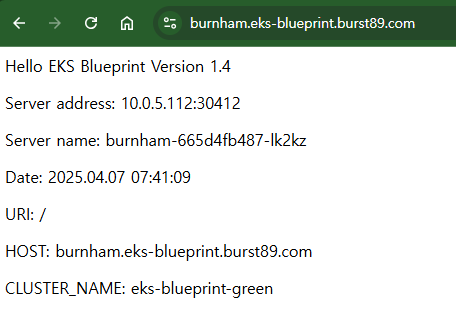

- Route53 레코드 확인-(eks-green 가중치 100 / eks-blue 가중치 0)

- burnham-ingress 확인

- eks-green로 가중치가 100 설정되어 있기 때문에 burnham-ingress 접근 시 eks-green 클러스터로 인입 확인

URL=$(echo -n "https://" ; kubectl get ing -n team-burnham burnham-ingress -o json | jq ".spec.rules[0].host" -r)

curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

eks-blueprint-green- 100회 접근 확인

- 100번 모두 eks-green 클러스터로 인입되는 것을 확인

for i in {1..100}; do

curl -s "$URL" | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

done | sort | uniq -c

728x90

'2025_AEWS Study' 카테고리의 다른 글

| 10주차 - K8s 시크릿 관리 Update(2) (0) | 2025.04.13 |

|---|---|

| 10주차 - K8s 시크릿 관리 Update(1) (0) | 2025.04.13 |

| 8주차 - K8S CI/CD (4) (0) | 2025.03.30 |

| 8주차 - K8S CI/CD (3) (0) | 2025.03.30 |

| 8주차 - K8S CI/CD (2) (0) | 2025.03.30 |