728x90

Istio gateways: Getting traffic into a cluster

- 실습환경: kind - k8s 1.23.17

- NodePort(30000 HTTP, 30005 HTTPS)

#

git clone https://github.com/AcornPublishing/istio-in-action

cd istio-in-action/book-source-code-master

pwd # 각자 자신의 pwd 경로

code .

# 아래 extramounts 생략 시, myk8s-control-plane 컨테이너 sh/bash 진입 후 직접 git clone 가능

kind create cluster --name myk8s --image kindest/node:v1.23.17 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000 # Sample Application (istio-ingrssgateway) HTTP

hostPort: 30000

- containerPort: 30001 # Prometheus

hostPort: 30001

- containerPort: 30002 # Grafana

hostPort: 30002

- containerPort: 30003 # Kiali

hostPort: 30003

- containerPort: 30004 # Tracing

hostPort: 30004

- containerPort: 30005 # Sample Application (istio-ingrssgateway) HTTPS

hostPort: 30005

- containerPort: 30006 # TCP Route

hostPort: 30006

- containerPort: 30007 # New Gateway

hostPort: 30007

extraMounts: # 해당 부분 생략 가능

- hostPath: /Users/gasida/Downloads/istio-in-action/book-source-code-master # 각자 자신의 pwd 경로로 설정

containerPath: /istiobook

networking:

podSubnet: 10.10.0.0/16

serviceSubnet: 10.200.1.0/24

EOF

# 설치 확인

docker ps

# 노드에 기본 툴 설치

docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree psmisc lsof wget bridge-utils net-tools dnsutils tcpdump ngrep iputils-ping git vim -y'

# (옵션) metrics-server

helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/

helm install metrics-server metrics-server/metrics-server --set 'args[0]=--kubelet-insecure-tls' -n kube-system

kubectl get all -n kube-system -l app.kubernetes.io/instance=metrics-server

- istio 1.17.8(’23.10.11)

# myk8s-control-plane 진입 후 설치 진행

docker exec -it myk8s-control-plane bash

-----------------------------------

# (옵션) 코드 파일들 마운트 확인

tree /istiobook/ -L 1

혹은

git clone ... /istiobook

# istioctl 설치

export ISTIOV=1.17.8

echo 'export ISTIOV=1.17.8' >> /root/.bashrc

curl -s -L https://istio.io/downloadIstio | ISTIO_VERSION=$ISTIOV sh -

cp istio-$ISTIOV/bin/istioctl /usr/local/bin/istioctl

istioctl version --remote=false

# default 프로파일 컨트롤 플레인 배포

istioctl install --set profile=default -y

# 설치 확인 : istiod, istio-ingressgateway, crd 등

kubectl get istiooperators -n istio-system -o yaml

kubectl get all,svc,ep,sa,cm,secret,pdb -n istio-system

kubectl get cm -n istio-system istio -o yaml

kubectl get crd | grep istio.io | sort

# 보조 도구 설치

kubectl apply -f istio-$ISTIOV/samples/addons

kubectl get pod -n istio-system

# 빠져나오기

exit

-----------------------------------

# 실습을 위한 네임스페이스 설정

kubectl create ns istioinaction

kubectl label namespace istioinaction istio-injection=enabled

kubectl get ns --show-labels

# istio-ingressgateway 서비스 : NodePort 변경 및 nodeport 지정 변경 , externalTrafficPolicy 설정 (ClientIP 수집)

kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec": {"type": "NodePort", "ports": [{"port": 80, "targetPort": 8080, "nodePort": 30000}]}}'

kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec": {"type": "NodePort", "ports": [{"port": 443, "targetPort": 8443, "nodePort": 30005}]}}'

kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec":{"externalTrafficPolicy": "Local"}}'

kubectl describe svc -n istio-system istio-ingressgateway

# NodePort 변경 및 nodeport 30001~30003으로 변경 : prometheus(30001), grafana(30002), kiali(30003), tracing(30004)

kubectl patch svc -n istio-system prometheus -p '{"spec": {"type": "NodePort", "ports": [{"port": 9090, "targetPort": 9090, "nodePort": 30001}]}}'

kubectl patch svc -n istio-system grafana -p '{"spec": {"type": "NodePort", "ports": [{"port": 3000, "targetPort": 3000, "nodePort": 30002}]}}'

kubectl patch svc -n istio-system kiali -p '{"spec": {"type": "NodePort", "ports": [{"port": 20001, "targetPort": 20001, "nodePort": 30003}]}}'

kubectl patch svc -n istio-system tracing -p '{"spec": {"type": "NodePort", "ports": [{"port": 80, "targetPort": 16686, "nodePort": 30004}]}}'

# Prometheus 접속 : envoy, istio 메트릭 확인

open http://127.0.0.1:30001

# Grafana 접속

open http://127.0.0.1:30002

# Kiali 접속 1 : NodePort

open http://127.0.0.1:30003

# (옵션) Kiali 접속 2 : Port forward

kubectl port-forward deployment/kiali -n istio-system 20001:20001 &

open http://127.0.0.1:20001

# tracing 접속 : 예거 트레이싱 대시보드

open http://127.0.0.1:30004

# 접속 테스트용 netshoot 파드 생성

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: netshoot

spec:

containers:

- name: netshoot

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

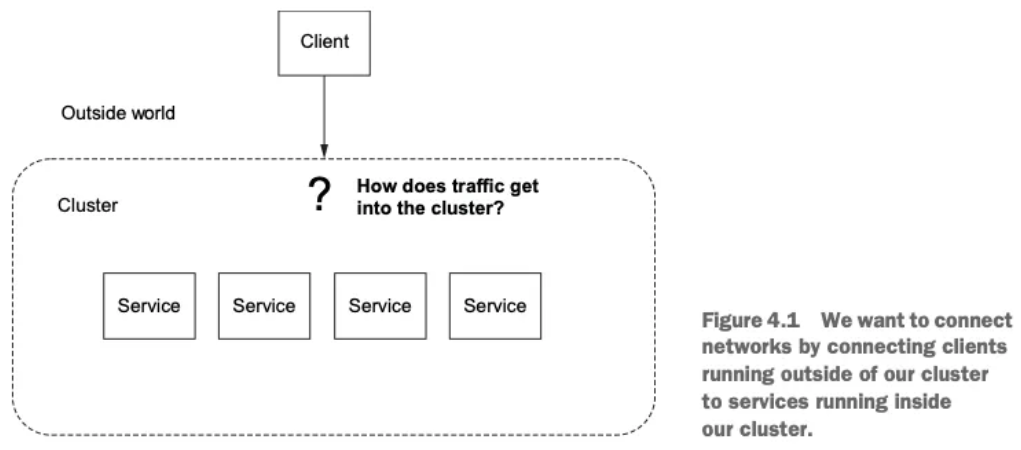

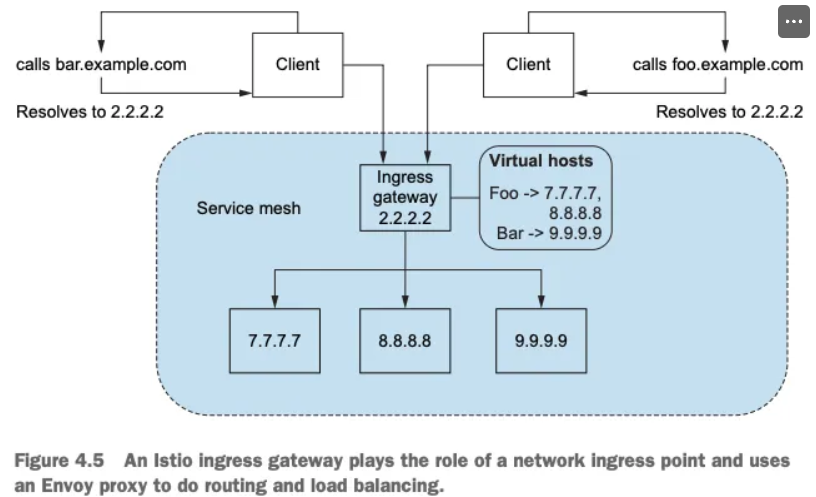

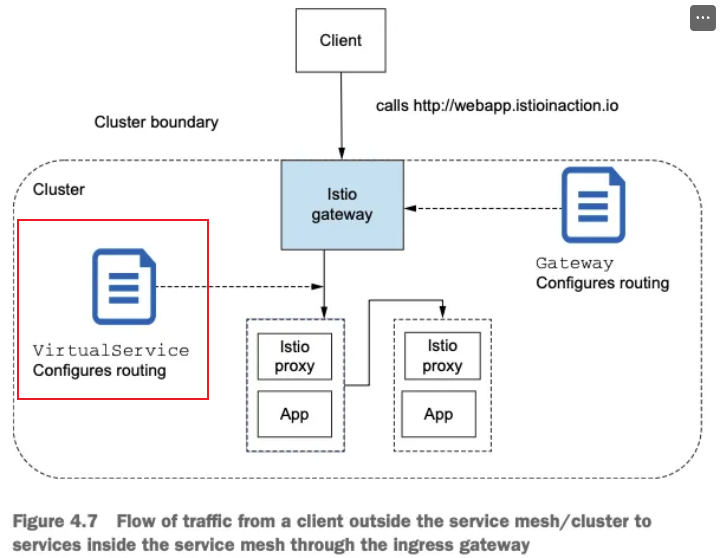

Istio ingress gateways

- Gateway(L4/L5) , VirtualService(L7)

- 이스티오에는 네트워크 인그레스 포인트 역할을 하는 인그레스 게이트웨이라는 개념이 있는데, 이것은 클러스터 외부에서 시작한 트래픽이 클러스터에 접근하는 것을 방어하고 제어

- 로그 밸런싱과 가상 호스트 라우팅도 처리

- 이스티오의 인그레스 게이트웨이가 클러스터로 트래픽을 허용하면서 리버스 프록시 기능도 수행

- 이스티오는 인그레스 게이트웨이로 단일 엔보이 프록시를 사용한다.

- 엔보이는 서비스 간 프록시이지만 서비스 메시 외부에서 내부의 서비스로 향하는 트래픽을 로드 밸런싱하고 라우팅하는 데 사용할 수도 있음을 확인

- 엔보이의 모든 기능은 인그레스 게이트웨이에서도 사용할 수 있다.

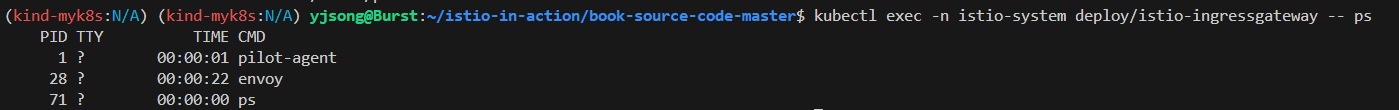

- 이스티오 서비스 프록시(엔보이 프록시)가 이스티오 인그레스 게이트웨이에서 실제로 작동 중인지 확인

# 파드에 컨테이너 1개 기동 : 별도의 애플리케이션 컨테이너가 불필요.

kubectl get pod -n istio-system -l app=istio-ingressgateway

NAME READY STATUS RESTARTS AGE

istio-ingressgateway-996bc6bb6-p6k79 1/1 Running 0 12m

# proxy 상태 확인

docker exec -it myk8s-control-plane istioctl proxy-status

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

istio-ingressgateway-996bc6bb6-p6k79.istio-system Kubernetes SYNCED SYNCED SYNCED NOT SENT NOT SENT istiod-7df6ffc78d-w9szx 1.17.8

# proxy 설정 확인

docker exec -it myk8s-control-plane istioctl proxy-config all deploy/istio-ingressgateway.istio-system

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway.istio-system

ADDRESS PORT MATCH DESTINATION

0.0.0.0 15021 ALL Inline Route: /healthz/ready*

0.0.0.0 15090 ALL Inline Route: /stats/prometheus*

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system

NAME DOMAINS MATCH VIRTUAL SERVICE

* /stats/prometheus*

* /healthz/ready*

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/istio-ingressgateway.istio-system

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/istio-ingressgateway.istio-system

docker exec -it myk8s-control-plane istioctl proxy-config log deploy/istio-ingressgateway.istio-system

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/istio-ingressgateway.istio-system

# 설정 참고

kubectl get istiooperators -n istio-system -o yaml

# pilot-agent 프로세스가 envoy 를 부트스트랩

kubectl exec -n istio-system deploy/istio-ingressgateway -- ps

PID TTY TIME CMD

1 ? 00:00:00 pilot-agent

23 ? 00:00:06 envoy

47 ? 00:00:00 ps

kubectl exec -n istio-system deploy/istio-ingressgateway -- ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

istio-p+ 1 0.1 0.3 753064 48876 ? Ssl 03:57 0:00 /usr/local/bin/pilot-agent proxy router --domain istio-system.svc.cluster.local --proxyLogLevel=warning --proxyComponentLogLevel=misc:error --log_output_level=default:info

istio-p+ 23 1.1 0.4 276224 57864 ? Sl 03:57 0:06 /usr/local/bin/envoy -c etc/istio/proxy/envoy-rev.json --drain-time-s 45 --drain-strategy immediate --local-address-ip-version v4 --file-flush-interval-msec 1000 --disable-hot-restart --allow-unknown-static-fields --log-format %Y-%m-%dT%T.%fZ.%l.envoy %n %g:%#.%v.thread=%t -l warning --component-log-level misc:error

istio-p+ 53 0.0 0.0 6412 2484 ? Rs 04:07 0:00 ps aux

# 프로세스 실행 유저 정보 확인

kubectl exec -n istio-system deploy/istio-ingressgateway -- whoami

istio-proxy

kubectl exec -n istio-system deploy/istio-ingressgateway -- id

uid=1337(istio-proxy) gid=1337(istio-proxy) groups=1337(istio-proxy)

- pilot-agent 프로세스는 처음에 엔보이 프록시를 설정하고 부트스트랩

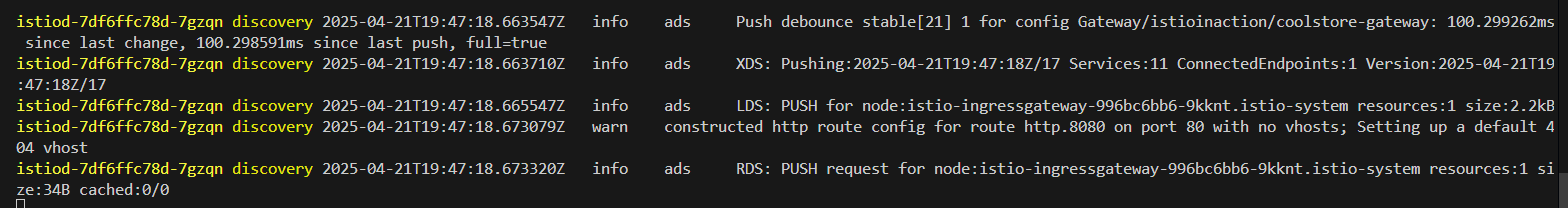

Specifying Gateway resources 게이트웨이 리소스 지정하기 (실습)

- 이스티오에서 인그레스 게이트웨이를 설정하려면, Gateway 리소스를 사용해 개방하고 싶은 포트와 그 포트에서 허용할 가상 호스트를 지정한다.

- 살펴볼 예시 Gateway 리소스는 매우 간단해 80 포트에서 가상 호스트 webapp.istioinaction.io 를 향하는 트래픽을 허용하는 HTTP 포트를 개방한다.

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: coolstore-gateway #(1) 게이트웨이 이름

spec:

selector:

istio: ingressgateway #(2) 어느 게이트웨이 구현체인가?

servers:

- port:

number: 80 #(3) 노출할 포트

name: http

protocol: HTTP

hosts:

- "webapp.istioinaction.io" #(4) 이 포트의 호스트

# 신규터미널 : istiod 로그

kubectl stern -n istio-system -l app=istiod

istiod-7df6ffc78d-w9szx discovery 2025-04-13T04:50:04.531700Z info ads Push debounce stable[20] 1 for config Gateway/istioinaction/coolstore-gateway: 100.4665ms since last change, 100.466166ms since last push, full=true

istiod-7df6ffc78d-w9szx discovery 2025-04-13T04:50:04.532520Z info ads XDS: Pushing:2025-04-13T04:50:04Z/14 Services:12 ConnectedEndpoints:1 Version:2025-04-13T04:50:04Z/14

istiod-7df6ffc78d-w9szx discovery 2025-04-13T04:50:04.537272Z info ads LDS: PUSH for node:istio-ingressgateway-996bc6bb6-p6k79.istio-system resources:1 size:2.2kB

istiod-7df6ffc78d-w9szx discovery 2025-04-13T04:50:04.545298Z warn constructed http route config for route http.8080 on port 80 with no vhosts; Setting up a default 404 vhost

istiod-7df6ffc78d-w9szx discovery 2025-04-13T04:50:04.545584Z info ads RDS: PUSH request for node:istio-ingressgateway-996bc6bb6-p6k79.istio-system resources:1 size:34B cached:0/0

# 터미널2

cat ch4/coolstore-gw.yaml

kubectl -n istioinaction apply -f ch4/coolstore-gw.yaml

# 확인

kubectl get gw,vs -n istioinaction

NAME AGE

gateway.networking.istio.io/coolstore-gateway 26m

#

docker exec -it myk8s-control-plane istioctl proxy-status

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

istio-ingressgateway-996bc6bb6-p6k79.istio-system Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-7df6ffc78d-w9szx 1.17.8

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway.istio-system

ADDRESS PORT MATCH DESTINATION

0.0.0.0 8080 ALL Route: http.8080

...

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system

NAME DOMAINS MATCH VIRTUAL SERVICE

http.8080 * /* 404

...

# http.8080 정보의 의미는? 그외 나머지 포트의 역할은?

kubectl get svc -n istio-system istio-ingressgateway -o jsonpath="{.spec.ports}" | jq

[

{

"name": "status-port",

"nodePort": 31674,

"port": 15021,

"protocol": "TCP",

"targetPort": 15021

},

{

"name": "http2",

"nodePort": 30000, # 순서1

"port": 80,

"protocol": "TCP",

"targetPort": 8080 # 순서2

},

{

"name": "https",

"nodePort": 30005,

"port": 443,

"protocol": "TCP",

"targetPort": 8443

}

]

# HTTP 포트(80)을 올바르게 노출했다. VirtualService 는 아직 아무것도 없다.

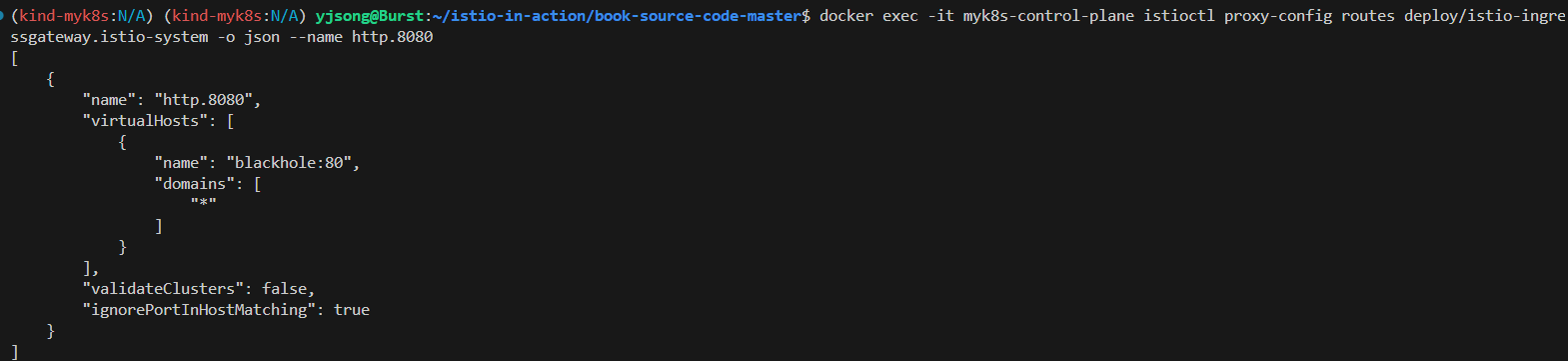

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system -o json

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system -o json --name http.8080

[

{

"name": "http.8080",

"virtualHosts": [

{

"name": "blackhole:80",

"domains": [

"*"

]

}

],

"validateClusters": false,

"ignorePortInHostMatching": true

}

]

Gateway routing with virtual services VirtualService로 게이트웨이 라우팅하기 (실습)

- 이스티오 게이트웨이

- 특정 포트를 노출하고, 그 포트에서 특정 프로토콜을 예상하고, 그 포트/프로토콜 쌍에서 서빙할 특정 호스트를 정의

- 트래픽이 게이트웨이로 들어오면 그 트래픽을 서비스 메시 내 특정 서비스로 가져올 방법이 필요

- VirtualService

- 이스티오에서 VirtualService 리소스는 클라이언트가 특정 서비스와 통신하는 방법을 정의하는데, 구체적으로 FQDN, 사용 가능한 서비스 버전, 기타 라우팅 속성(재시도, 요청 타임아웃 등)이 있다.

- 게이트웨이로 들어오는 트래픽에 대해 수행할 작업을 이 VirtualService 리소스로 정의

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: webapp-vs-from-gw #1 VirtualService 이름

spec:

hosts:

- "webapp.istioinaction.io" #2 비교할 가상 호스트네임(또는 호스트네임들)

gateways:

- coolstore-gateway #3 이 VirtualService 를 적용할 게이트웨이

http:

- route:

- destination: #4 이 트래픽의 목적 서비스

host: webapp

port:

number: 80

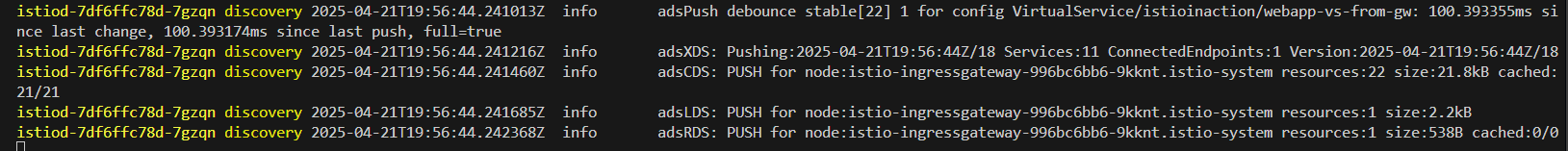

# 신규터미널 : istiod 로그

kubectl stern -n istio-system -l app=istiod

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:15:16.485143Z info ads Push debounce stable[21] 1 for config VirtualService/istioinaction/webapp-vs-from-gw: 102.17225ms since last change, 102.172083ms since last push, full=true

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:15:16.485918Z info ads XDS: Pushing:2025-04-13T05:15:16Z/15 Services:12 ConnectedEndpoints:1 Version:2025-04-13T05:15:16Z/15

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:15:16.487330Z info ads CDS: PUSH for node:istio-ingressgateway-996bc6bb6-p6k79.istio-system resources:23 size:22.9kB cached:22/22

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:15:16.488346Z info ads LDS: PUSH for node:istio-ingressgateway-996bc6bb6-p6k79.istio-system resources:1 size:2.2kB

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:15:16.489937Z info ads RDS: PUSH for node:istio-ingressgateway-996bc6bb6-p6k79.istio-system resources:1 size:538B cached:0/0

#

cat ch4/coolstore-vs.yaml

kubectl apply -n istioinaction -f ch4/coolstore-vs.yaml

# 확인

kubectl get gw,vs -n istioinaction

NAME AGE

gateway.networking.istio.io/coolstore-gateway 26m

NAME GATEWAYS HOSTS AGE

virtualservice.networking.istio.io/webapp-vs-from-gw ["coolstore-gateway"] ["webapp.istioinaction.io"] 49s

#

docker exec -it myk8s-control-plane istioctl proxy-status

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

istio-ingressgateway-996bc6bb6-p6k79.istio-system Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-7df6ffc78d-w9szx 1.17.8

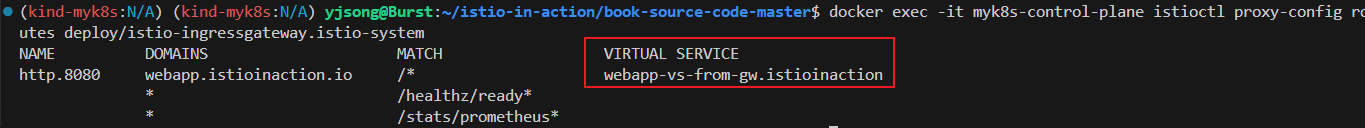

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway.istio-system

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system

NAME DOMAINS MATCH VIRTUAL SERVICE

http.8080 webapp.istioinaction.io /* webapp-vs-from-gw.istioinaction

...

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system -o json --name http.8080

[

{

"name": "http.8080",

"virtualHosts": [

{

"name": "webapp.istioinaction.io:80",

"domains": [

"webapp.istioinaction.io" #1 비교할 도메인

],

"routes": [

{

"match": {

"prefix": "/"

},

"route": { #2 라우팅 할 곳

"cluster": "outbound|80||webapp.istioinaction.svc.cluster.local",

"timeout": "0s",

"retryPolicy": {

"retryOn": "connect-failure,refused-stream,unavailable,cancelled,retriable-status-codes",

"numRetries": 2,

"retryHostPredicate": [

{

"name": "envoy.retry_host_predicates.previous_hosts",

"typedConfig": {

"@type": "type.googleapis.com/envoy.extensions.retry.host.previous_hosts.v3.PreviousHostsPredicate"

}

}

],

"hostSelectionRetryMaxAttempts": "5",

"retriableStatusCodes": [

503

]

},

"maxGrpcTimeout": "0s"

},

"metadata": {

"filterMetadata": {

"istio": {

"config": "/apis/networking.istio.io/v1alpha3/namespaces/istioinaction/virtual-service/webapp-vs-from-gw"

}

}

},

"decorator": {

"operation": "webapp.istioinaction.svc.cluster.local:80/*"

}

}

],

"includeRequestAttemptCount": true

}

],

"validateClusters": false,

"ignorePortInHostMatching": true

}

]

# 실제 애플리케이션(서비스)를 배포 전으로 cluster 에 webapp, catalog 정보가 없다.

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/istio-ingressgateway.istio-system

...

- 동작을 위해서 실제 애플리케이션(서비스)를 배포

# 로그

kubectl stern -n istioinaction -l app=webapp

kubectl stern -n istioinaction -l app=catalog

kubectl stern -n istio-system -l app=istiod

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:59:52.736261Z info ads LDS: PUSH request for node:webapp-7685bcb84-mck2d.istioinaction resources:21 size:50.1kB

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:59:52.749641Z info ads RDS: PUSH request for node:webapp-7685bcb84-mck2d.istioinaction resources:13 size:9.9kB cached:12/13

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:59:54.076322Z info ads CDS: PUSH for node:webapp-7685bcb84-mck2d.istioinaction resources:28 size:26.4kB cached:22/24

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:59:54.076489Z info ads EDS: PUSH for node:webapp-7685bcb84-mck2d.istioinaction resources:24 size:4.0kB empty:0 cached:24/24

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:59:54.078289Z info ads LDS: PUSH for node:webapp-7685bcb84-mck2d.istioinaction resources:21 size:50.1kB

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:59:54.078587Z info ads RDS: PUSH for node:webapp-7685bcb84-mck2d.istioinaction resources:13 size:9.9kB cached:12/13

istiod-7df6ffc78d-w9szx discovery 2025-04-13T05:59:54.185611Z info ads Push debounce stable[37] 1 for config ServiceEntry/istioinaction/webapp.istioinaction.svc.cluster.local: 100.490667ms since last change, 100.490459ms since last push, full=false

...

# 배포

cat services/catalog/kubernetes/catalog.yaml

cat services/webapp/kubernetes/webapp.yaml

kubectl apply -f services/catalog/kubernetes/catalog.yaml -n istioinaction

kubectl apply -f services/webapp/kubernetes/webapp.yaml -n istioinaction

# 확인

kubectl get pod -n istioinaction -owide

NAME READY STATUS RESTARTS AGE

catalog-6cf4b97d-nwh9w 2/2 Running 0 10m

webapp-7685bcb84-mck2d 2/2 Running 0 10m

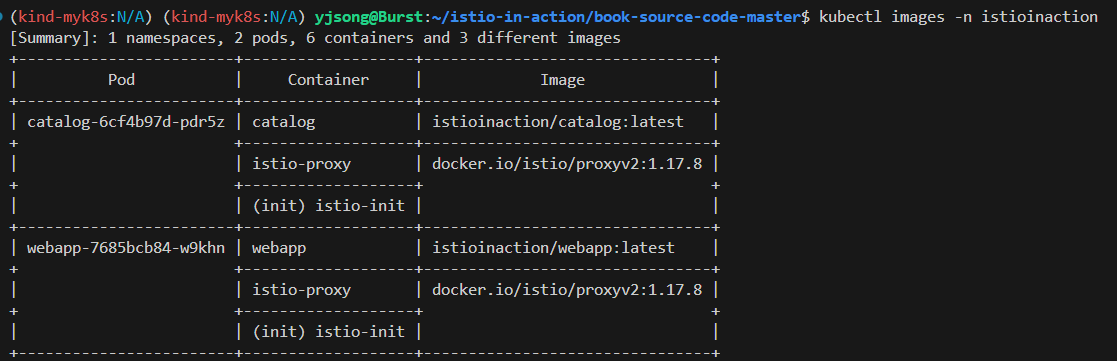

# krew plugin images 설치 후 사용

kubectl images -n istioinaction

[Summary]: 1 namespaces, 2 pods, 6 containers and 3 different images

+------------------------+-------------------+--------------------------------+

| Pod | Container | Image |

+------------------------+-------------------+--------------------------------+

| catalog-6cf4b97d-nwh9w | catalog | istioinaction/catalog:latest |

+ +-------------------+--------------------------------+

| | istio-proxy | docker.io/istio/proxyv2:1.17.8 |

+ +-------------------+ +

| | (init) istio-init | |

+------------------------+-------------------+--------------------------------+

| webapp-7685bcb84-mck2d | webapp | istioinaction/webapp:latest |

+ +-------------------+--------------------------------+

| | istio-proxy | docker.io/istio/proxyv2:1.17.8 |

+ +-------------------+ +

| | (init) istio-init | |

+------------------------+-------------------+--------------------------------+

# krew plugin resource-capacity 설치 후 사용 : istioinaction 네임스페이스에 파드에 컨테이너별 CPU/Mem Request/Limit 확인

kubectl resource-capacity -n istioinaction -c --pod-count

kubectl resource-capacity -n istioinaction -c --pod-count -u

NODE POD CONTAINER CPU REQUESTS CPU LIMITS CPU UTIL MEMORY REQUESTS MEMORY LIMITS MEMORY UTIL POD COUNT

myk8s-control-plane * * 200m (2%) 4000m (50%) 9m (0%) 256Mi (2%) 2048Mi (17%) 129Mi (1%) 2/110

myk8s-control-plane catalog-6cf4b97d-m7jq9 * 100m (1%) 2000m (25%) 5m (0%) 128Mi (1%) 1024Mi (8%) 70Mi (0%)

myk8s-control-plane catalog-6cf4b97d-m7jq9 catalog 0m (0%) 0m (0%) 0m (0%) 0Mi (0%) 0Mi (0%) 22Mi (0%)

myk8s-control-plane catalog-6cf4b97d-m7jq9 istio-proxy 100m (1%) 2000m (25%) 5m (0%) 128Mi (1%) 1024Mi (8%) 48Mi (0%)

...

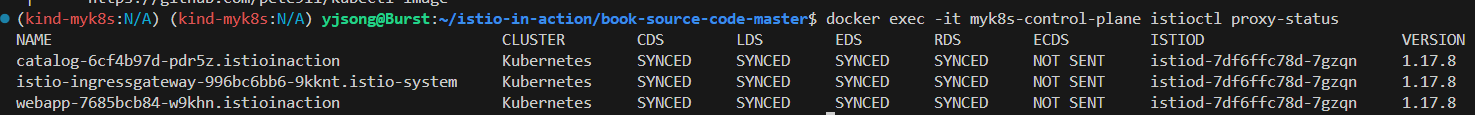

#

docker exec -it myk8s-control-plane istioctl proxy-status

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

istio-ingressgateway-996bc6bb6-p6k79.istio-system Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-7df6ffc78d-w9szx 1.17.8

catalog-6cf4b97d-nwh9w.istioinaction Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-7df6ffc78d-w9szx 1.17.8

webapp-7685bcb84-mck2d.istioinaction Kubernetes SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-7df6ffc78d-w9szx 1.17.8

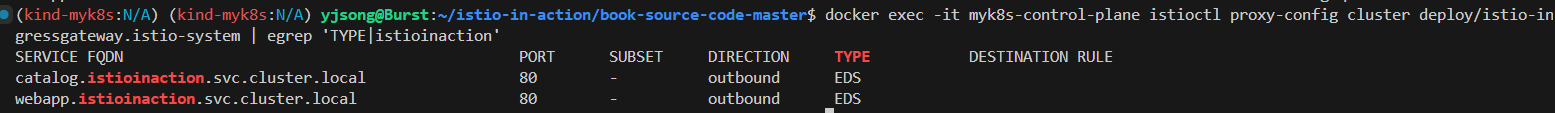

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway.istio-system

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/istio-ingressgateway.istio-system | egrep 'TYPE|istioinaction'

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

catalog.istioinaction.svc.cluster.local 80 - outbound EDS

webapp.istioinaction.svc.cluster.local 80 - outbound EDS

# istio-ingressgateway 에서 catalog/webapp 의 Service(ClusterIP)로 전달하는게 아니라, 바로 파드 IP인 Endpoint 로 전달함.

## 즉, istio 를 사용하지 않았다면, Service(ClusterIP) 동작 처리를 위해서 Node에 iptable/conntrack 를 사용했었어야 하지만,

## istio 사용 시에는 Node에 iptable/conntrack 를 사용하지 않아서, 이 부분에 대한 통신 라우팅 효율이 있다.

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/istio-ingressgateway.istio-system | egrep 'ENDPOINT|istioinaction'

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.10.0.18:3000 HEALTHY OK outbound|80||catalog.istioinaction.svc.cluster.local

10.10.0.19:8080 HEALTHY OK outbound|80||webapp.istioinaction.svc.cluster.local

#

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/webapp.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/webapp.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/webapp.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/webapp.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/webapp.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/webapp.istioinaction | egrep 'ENDPOINT|istioinaction'

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.10.0.18:3000 HEALTHY OK outbound|80||catalog.istioinaction.svc.cluster.local

10.10.0.19:8080 HEALTHY OK outbound|80||webapp.istioinaction.svc.cluster.local

# 현재 모든 istio-proxy 가 EDS로 K8S Service(Endpoint) 정보를 알 고 있다

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/catalog.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/catalog.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/catalog.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/catalog.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/catalog.istioinaction

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/catalog.istioinaction | egrep 'ENDPOINT|istioinaction'

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.10.0.18:3000 HEALTHY OK outbound|80||catalog.istioinaction.svc.cluster.local

10.10.0.19:8080 HEALTHY OK outbound|80||webapp.istioinaction.svc.cluster.local

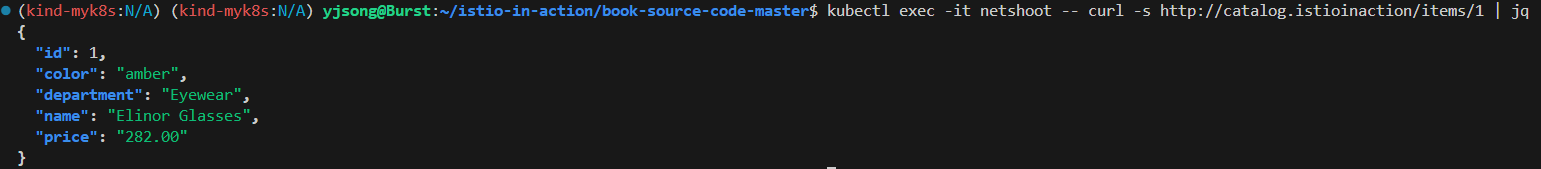

# netshoot로 내부에서 catalog 접속 확인

kubectl exec -it netshoot -- curl -s http://catalog.istioinaction/items/1 | jq

{

"id": 1,

"color": "amber",

"department": "Eyewear",

"name": "Elinor Glasses",

"price": "282.00"

}

# netshoot로 내부에서 webapp 접속 확인 : 즉 webapp은 다른 백엔드 서비스의 파사드 facade 역할을 한다.

kubectl exec -it netshoot -- curl -s http://webapp.istioinaction/api/catalog/items/1 | jq

{

"id": 1,

"color": "amber",

"department": "Eyewear",

"name": "Elinor Glasses",

"price": "282.00"

}

- 외부에서 호출 시도

# 터미널 : istio-ingressgateway 로깅 수준 상향

kubectl exec -it deploy/istio-ingressgateway -n istio-system -- curl -X POST http://localhost:15000/logging?http=debug

kubectl stern -n istio-system -l app=istio-ingressgateway

혹은

kubectl logs -n istio-system -l app=istio-ingressgateway -f

istio-ingressgateway-996bc6bb6-p6k79 istio-proxy 2025-04-13T06:22:13.389828Z debug envoy http external/envoy/source/common/http/conn_manager_impl.cc:1032 [C4403][S8504958039178930365] request end stream thread=42

istio-ingressgateway-996bc6bb6-p6k79 istio-proxy 2025-04-13T06:22:13.390654Z debug envoy http external/envoy/source/common/http/filter_manager.cc:917 [C4403][S8504958039178930365] Preparing local reply with details route_not_found thread=42

istio-ingressgateway-996bc6bb6-p6k79 istio-proxy 2025-04-13T06:22:13.390756Z debug envoy http external/envoy/source/common/http/filter_manager.cc:959 [C4403][S8504958039178930365] Executing sending local reply. thread=42

istio-ingressgateway-996bc6bb6-p6k79 istio-proxy 2025-04-13T06:22:13.390789Z debug envoy http external/envoy/source/common/http/conn_manager_impl.cc:1687 [C4403][S8504958039178930365] encoding headers via codec (end_stream=true):

istio-ingressgateway-996bc6bb6-p6k79 istio-proxy ':status', '404'

...

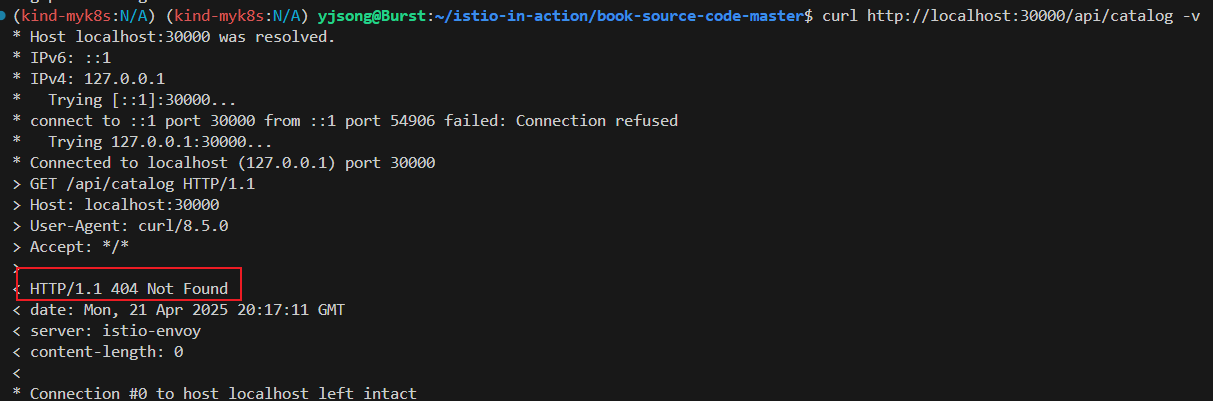

# 외부(?)에서 호출 시도 : Host 헤더가 게이트웨이가 인식하는 호스트가 아니다

curl http://localhost:30000/api/catalog -v

* Host localhost:30000 was resolved.

* IPv6: ::1

* IPv4: 127.0.0.1

* Trying [::1]:30000...

* Connected to localhost (::1) port 30000

> GET /api/catalog HTTP/1.1

> Host: localhost:30000

> User-Agent: curl/8.7.1

> Accept: */*

>

* Request completely sent off

< HTTP/1.1 404 Not Found

< date: Sun, 13 Apr 2025 06:22:12 GMT

< server: istio-envoy

< content-length: 0

<

* Connection #0 to host localhost left intact

# curl 에 host 헤더 지정 후 호출 시도

curl -s http://localhost:30000/api/catalog -H "Host: webapp.istioinaction.io" | jq

[

{

"id": 1,

"color": "amber",

"department": "Eyewear",

"name": "Elinor Glasses",

"price": "282.00"

...

Securing gateway traffic 게이트웨이 트래픽 보안

- HTTP traffic with TLS (실습)

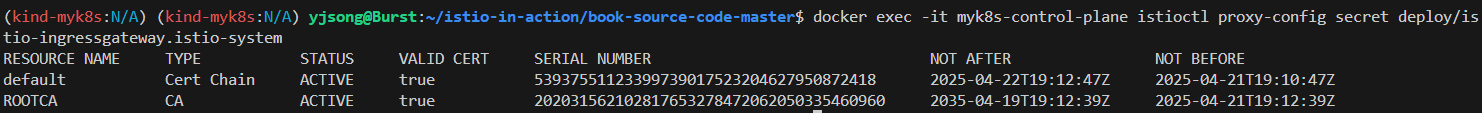

- 기본 istio-ingressgateway 가 인증서와 키를 사용하도록 설정하려면 먼저 인증서/키를 쿠버네티스 시크릿으로 만들어야 함

#

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/istio-ingressgateway.istio-system

RESOURCE NAME TYPE STATUS VALID CERT SERIAL NUMBER NOT AFTER NOT BEFORE

default Cert Chain ACTIVE true 5384840145379860934113500875842526824 2025-04-14T11:51:01Z 2025-04-13T11:49:01Z

ROOTCA CA ACTIVE true 218882737649632505517373517490277818381 2035-04-11T11:50:53Z 2025-04-13T11:50:53Z

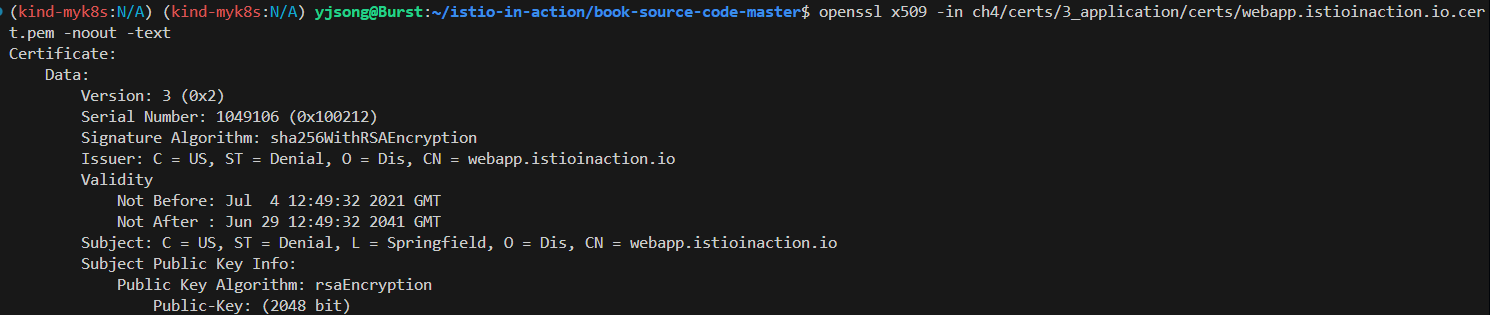

# 파일 정보 확인

cat ch4/certs/3_application/private/webapp.istioinaction.io.key.pem # 비밀키(개인키)

cat ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem

openssl x509 -in ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem -noout -text

...

Issuer: C=US, ST=Denial, O=Dis, CN=webapp.istioinaction.io

Validity

Not Before: Jul 4 12:49:32 2021 GMT

Not After : Jun 29 12:49:32 2041 GMT

Subject: C=US, ST=Denial, L=Springfield, O=Dis, CN=webapp.istioinaction.io

...

X509v3 extensions:

X509v3 Basic Constraints:

CA:FALSE

Netscape Cert Type:

SSL Server

Netscape Comment:

OpenSSL Generated Server Certificate

X509v3 Subject Key Identifier:

87:0E:5E:A4:4C:A5:57:C5:6D:97:95:64:C4:7D:60:1E:BB:07:94:F4

X509v3 Authority Key Identifier:

keyid:B9:F3:84:08:22:37:2C:D3:75:18:D2:07:C4:6F:4E:67:A9:0C:7D:14

DirName:/C=US/ST=Denial/L=Springfield/O=Dis/CN=webapp.istioinaction.io

serial:10:02:12

X509v3 Key Usage: critical

Digital Signature, Key Encipherment

X509v3 Extended Key Usage:

TLS Web Server Authentication

...

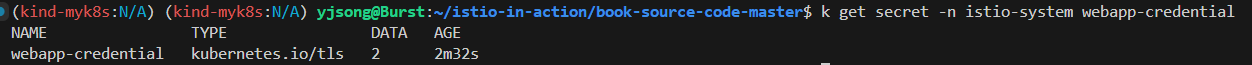

# webapp-credential 시크릿 만들기

kubectl create -n istio-system secret tls webapp-credential \

--key ch4/certs/3_application/private/webapp.istioinaction.io.key.pem \

--cert ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem

# 확인 : krew view-secret

kubectl view-secret -n istio-system webapp-credential --all

- 이스티오 게이트웨이 리소스가 인증서와 키를 사용하도록 설정

- Gateway 리소스에서 인그레스 게이트웨이의 443 포트를 열고, 이를 HTTPS로 지정

- 또한 게이트웨이 설정에 tls 부분을 추가해 TLS에 사용할 인증서와 키의 위치를 지정

- 이 위치가 앞서 istio-ingressgateway 에 마운트한 위치와 동일하다는 것을 확인

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: coolstore-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80 #1 HTTP 트래픽 허용

name: http

protocol: HTTP

hosts:

- "webapp.istioinaction.io"

- port:

number: 443 #2 HTTPS 트래픽 허용

name: https

protocol: HTTPS

tls:

mode: SIMPLE #3 보안 연결

credentialName: webapp-credential #4 TLS 인증서가 들어 있는 쿠버네티스 시크릿 이름

hosts:

- "webapp.istioinaction.io"

#

cat ch4/coolstore-gw-tls.yaml

kubectl apply -f ch4/coolstore-gw-tls.yaml -n istioinaction

gateway.networking.istio.io/coolstore-gateway configured

#

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/istio-ingressgateway.istio-system

RESOURCE NAME TYPE STATUS VALID CERT SERIAL NUMBER NOT AFTER NOT BEFORE

default Cert Chain ACTIVE true 135624833228815846167305474128694379500 2025-04-14T03:57:41Z 2025-04-13T03:55:41Z

kubernetes://webapp-credential Cert Chain ACTIVE true 1049106 2041-06-29T12:49:32Z 2021-07-04T12:49:32Z

ROOTCA CA ACTIVE true 236693590886065381062210660883183746411 2035-04-11T03:57:31Z 2025-04-13T03:57:31Z

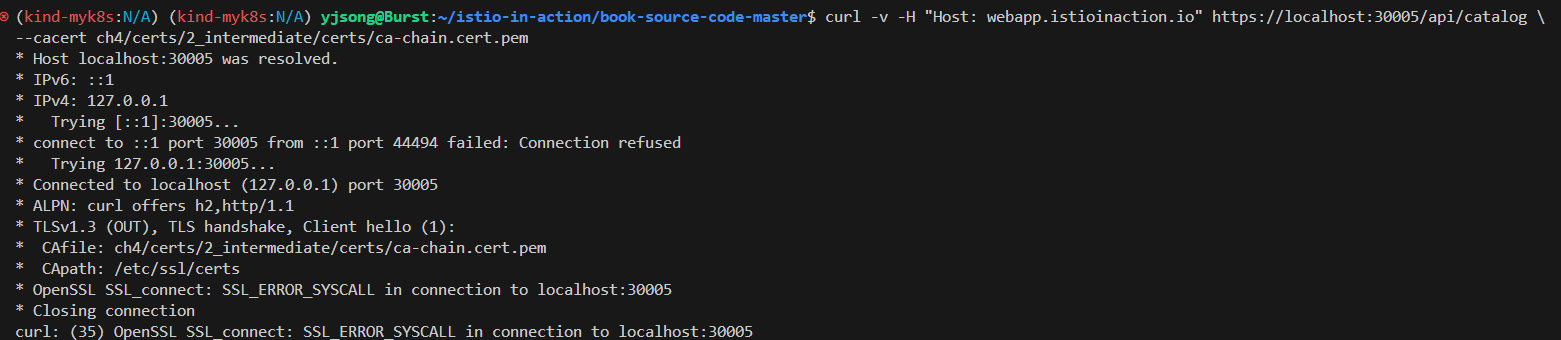

# 호출 테스트 1

curl -v -H "Host: webapp.istioinaction.io" https://localhost:30005/api/catalog

* Host localhost:30005 was resolved.

* IPv6: ::1

* IPv4: 127.0.0.1

* Trying [::1]:30005...

* Connected to localhost (::1) port 30005

* ALPN: curl offers h2,http/1.1

* (304) (OUT), TLS handshake, Client hello (1):

* CAfile: /etc/ssl/cert.pem

* CApath: none

* LibreSSL SSL_connect: SSL_ERROR_SYSCALL in connection to localhost:30005

* Closing connection

curl: (35) LibreSSL SSL_connect: SSL_ERROR_SYSCALL in connection to localhost:30005

# 서버(istio-ingressgateway 파드)에서 제공하는 인증서는 기본 CA 인증서 체인을 사용해 확인할 수 없다는 의미다.

# curl 클라이언트에 적절한 CA 인증서 체인을 전달해보자.

# (호출 실패) 원인: (기본 인증서 경로에) 인증서 없음. 사설인증서 이므로 “사설CA 인증서(체인)” 필요

#

kubectl exec -it deploy/istio-ingressgateway -n istio-system -- ls -l /etc/ssl/certs

...

lrwxrwxrwx 1 root root 23 Oct 4 2023 f081611a.0 -> Go_Daddy_Class_2_CA.pem

lrwxrwxrwx 1 root root 47 Oct 4 2023 f0c70a8d.0 -> SSL.com_EV_Root_Certification_Authority_ECC.pem

lrwxrwxrwx 1 root root 44 Oct 4 2023 f249de83.0 -> Trustwave_Global_Certification_Authority.pem

lrwxrwxrwx 1 root root 41 Oct 4 2023 f30dd6ad.0 -> USERTrust_ECC_Certification_Authority.pem

lrwxrwxrwx 1 root root 34 Oct 4 2023 f3377b1b.0 -> Security_Communication_Root_CA.pem

lrwxrwxrwx 1 root root 24 Oct 4 2023 f387163d.0 -> Starfield_Class_2_CA.pem

lrwxrwxrwx 1 root root 18 Oct 4 2023 f39fc864.0 -> SecureTrust_CA.pem

lrwxrwxrwx 1 root root 20 Oct 4 2023 f51bb24c.0 -> Certigna_Root_CA.pem

lrwxrwxrwx 1 root root 20 Oct 4 2023 fa5da96b.0 -> GLOBALTRUST_2020.pem

...

#

cat ch4/certs/2_intermediate/certs/ca-chain.cert.pem

openssl x509 -in ch4/certs/2_intermediate/certs/ca-chain.cert.pem -noout -text

...

X509v3 Basic Constraints: critical

CA:TRUE, pathlen:0

...

# 호출 테스트 2

curl -v -H "Host: webapp.istioinaction.io" https://localhost:30005/api/catalog \

--cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem

* Host localhost:30005 was resolved.

* IPv6: ::1

* IPv4: 127.0.0.1

* Trying [::1]:30005...

* Connected to localhost (::1) port 30005

* ALPN: curl offers h2,http/1.1

* (304) (OUT), TLS handshake, Client hello (1):

* CAfile: ch4/certs/2_intermediate/certs/ca-chain.cert.pem

* CApath: none

* LibreSSL SSL_connect: SSL_ERROR_SYSCALL in connection to localhost:30005

* Closing connection

curl: (35) LibreSSL SSL_connect: SSL_ERROR_SYSCALL in connection to localhost:30005

# (호출 실패) 원인: 인증실패. 서버인증서가 발급된(issued) 도메인 “webapp.istioinaction.io”로 호출하지 않음 (localhost로 호출함)

# 도메인 질의를 위한 임시 설정 : 실습 완료 후에는 삭제 해둘 것

echo "127.0.0.1 webapp.istioinaction.io" | sudo tee -a /etc/hosts

cat /etc/hosts | tail -n 1

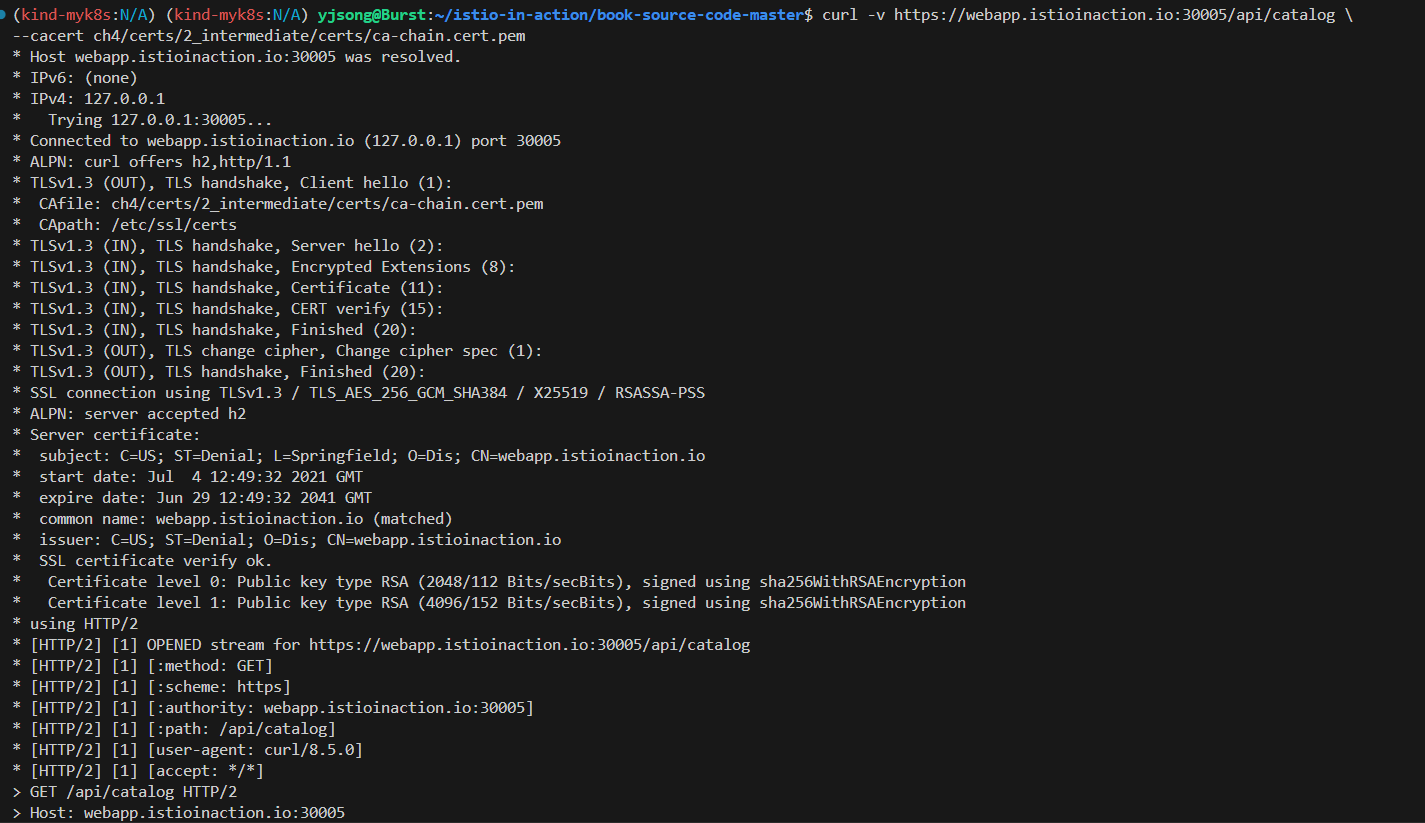

# 호출 테스트 3

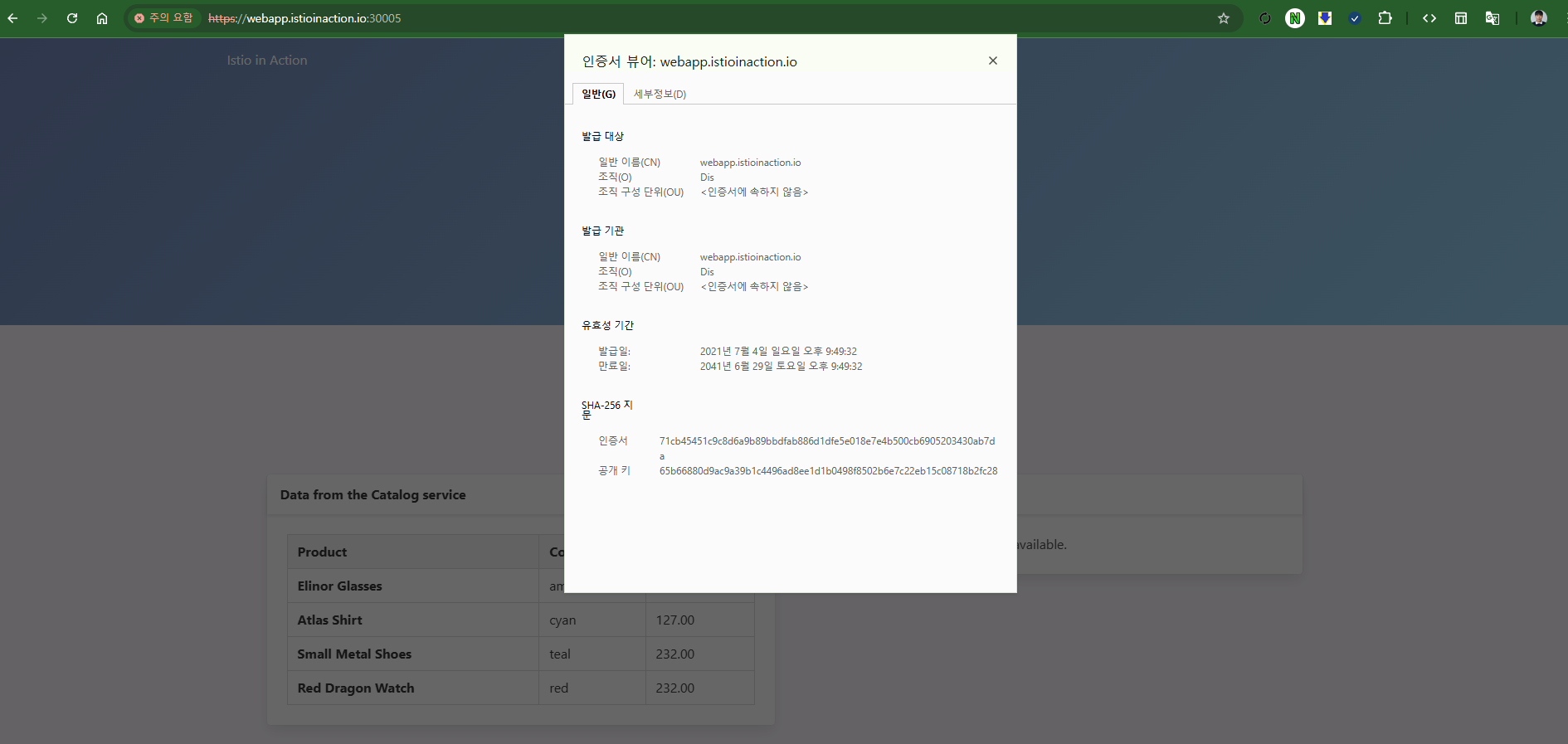

curl -v https://webapp.istioinaction.io:30005/api/catalog \

--cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem

open https://webapp.istioinaction.io:30005

open https://webapp.istioinaction.io:30005/api/catalog

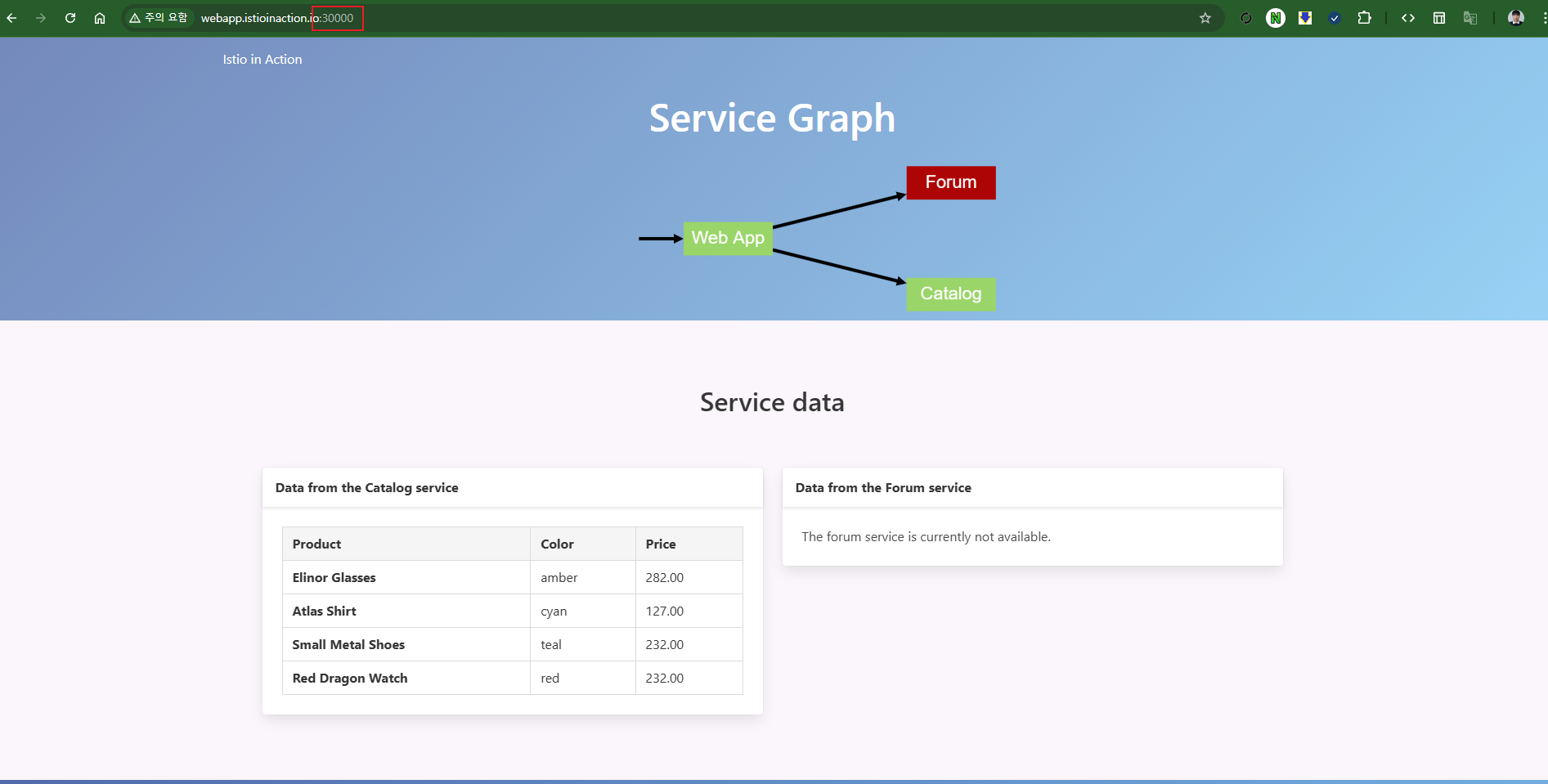

# http 접속도 확인해보자

curl -v http://webapp.istioinaction.io:30000/api/catalog

open http://webapp.istioinaction.io:30000

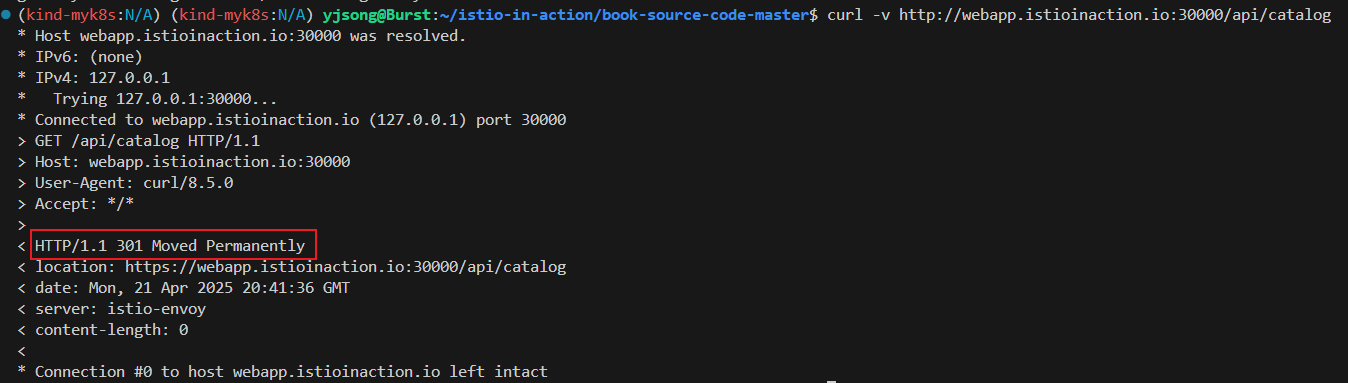

HTTP redirect to HTTPS (실습)

- 모든 트래픽 항상 TLS를 쓰도록 강제, HTTP 트래픽을 강제로 리다이렉트하게 Gateway 수정

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: coolstore-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "webapp.istioinaction.io"

tls:

httpsRedirect: true

- port:

number: 443

name: https

protocol: HTTPS

tls:

mode: SIMPLE

credentialName: webapp-credential

hosts:

- "webapp.istioinaction.io"

- 실행

#

kubectl apply -f ch4/coolstore-gw-tls-redirect.yaml

# HTTP 301 리다이렉트

curl -v http://webapp.istioinaction.io:30000/api/catalog

* Host webapp.istioinaction.io:30000 was resolved.

* IPv6: (none)

* IPv4: 127.0.0.1

* Trying 127.0.0.1:30000...

* Connected to webapp.istioinaction.io (127.0.0.1) port 30000

> GET /api/catalog HTTP/1.1

> Host: webapp.istioinaction.io:30000

> User-Agent: curl/8.7.1

> Accept: */*

>

* Request completely sent off

< HTTP/1.1 301 Moved Permanently

< location: https://webapp.istioinaction.io:30000/api/catalog

< date: Sun, 13 Apr 2025 08:01:31 GMT

< server: istio-envoy

< content-length: 0

<

* Connection #0 to host webapp.istioinaction.io left intact

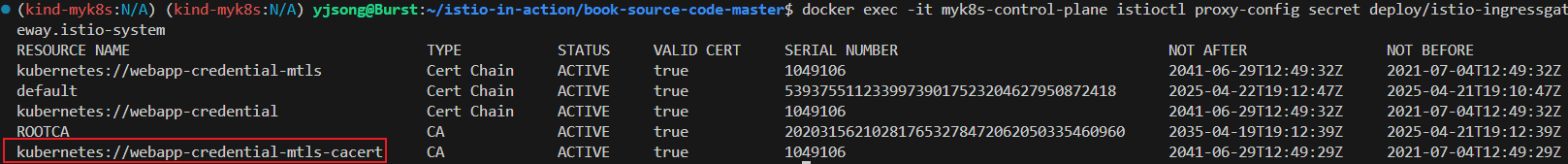

HTTP traffic with mutual TLS (실습)

- 기본 istio-ingressgateway 가 mTLS 커넥션에 참여하도록 구성하려면, 클라이언트의 인증서를 검증하는 데 사용할 수 있도록 CA 인증서 집합을 제공

- 쿠버네티스 시크릿으로 istio-ingressgateway 에세 이 CA 인증서(더 구체적으로 인증서 체인)를 사용할 수 있게 해야 한다.

- 적절한 CA 인증서 체인으로 istio-ingressgateway-ca-cert 시크릿을 구성

# 인증서 파일들 확인

cat ch4/certs/3_application/private/webapp.istioinaction.io.key.pem

cat ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem

cat ch4/certs/2_intermediate/certs/ca-chain.cert.pem

openssl x509 -in ch4/certs/2_intermediate/certs/ca-chain.cert.pem -noout -text

# Secret 생성 : (적절한 CA 인증서 체인) 클라이언트 인증서

kubectl create -n istio-system secret \

generic webapp-credential-mtls --from-file=tls.key=\

ch4/certs/3_application/private/webapp.istioinaction.io.key.pem \

--from-file=tls.crt=\

ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem \

--from-file=ca.crt=\

ch4/certs/2_intermediate/certs/ca-chain.cert.pem

# 확인

kubectl view-secret -n istio-system webapp-credential-mtls --all

- 이제 CA 인증서 체인의 위치를 가리키도록 이스티오 Gateway 리소스를 업데이트하고, expected 프로토콜을 mTLS로 구성

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: coolstore-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "webapp.istioinaction.io"

- port:

number: 443

name: https

protocol: HTTPS

tls:

mode: MUTUAL # mTLS 설정

credentialName: webapp-credential-mtls # 신뢰할 수 있는 CA가 구성된 자격 증명

hosts:

- "webapp.istioinaction.io"

#

kubectl apply -f ch4/coolstore-gw-mtls.yaml -n istioinaction

gateway.networking.istio.io/coolstore-gateway configured

# (옵션) SDS 로그 확인

kubectl stern -n istio-system -l app=istiod

istiod-7df6ffc78d-w9szx discovery 2025-04-13T08:14:14.405397Z info ads SDS: PUSH request for node:istio-ingressgateway-996bc6bb6-p6k79.istio-system resources:0 size:0B cached:0/0 filtered:2

...

#

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/istio-ingressgateway.istio-system

RESOURCE NAME TYPE STATUS VALID CERT SERIAL NUMBER NOT AFTER NOT BEFORE

default Cert Chain ACTIVE true 135624833228815846167305474128694379500 2025-04-14T03:57:41Z 2025-04-13T03:55:41Z

ROOTCA CA ACTIVE true 236693590886065381062210660883183746411 2035-04-11T03:57:31Z 2025-04-13T03:57:31Z

kubernetes://webapp-credential Cert Chain ACTIVE true 1049106 2041-06-29T12:49:32Z 2021-07-04T12:49:32Z

kubernetes://webapp-credential-mtls Cert Chain ACTIVE true 1049106 2041-06-29T12:49:32Z 2021-07-04T12:49:32Z

kubernetes://webapp-credential-mtls-cacert CA ACTIVE true 1049106 2041-06-29T12:49:29Z 2021-07-04T12:49:29Z

# 호출 테스트 1 : (호출실패) 클라이언트 인증서 없음 - SSL 핸드섀이크가 성공하지 못하여 거부됨

curl -v https://webapp.istioinaction.io:30005/api/catalog \

--cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem

# 웹브라우저에서 확인 시 클라이언트 인증서 확인되지 않아서 접속 실패 확인

open https://webapp.istioinaction.io:30005

open https://webapp.istioinaction.io:30005/api/catalog

# 호출 테스트 2 : 클라이언트 인증서/키 추가 성공!

curl -v https://webapp.istioinaction.io:30005/api/catalog \

--cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem \

--cert ch4/certs/4_client/certs/webapp.istioinaction.io.cert.pem \

--key ch4/certs/4_client/private/webapp.istioinaction.io.key.pem

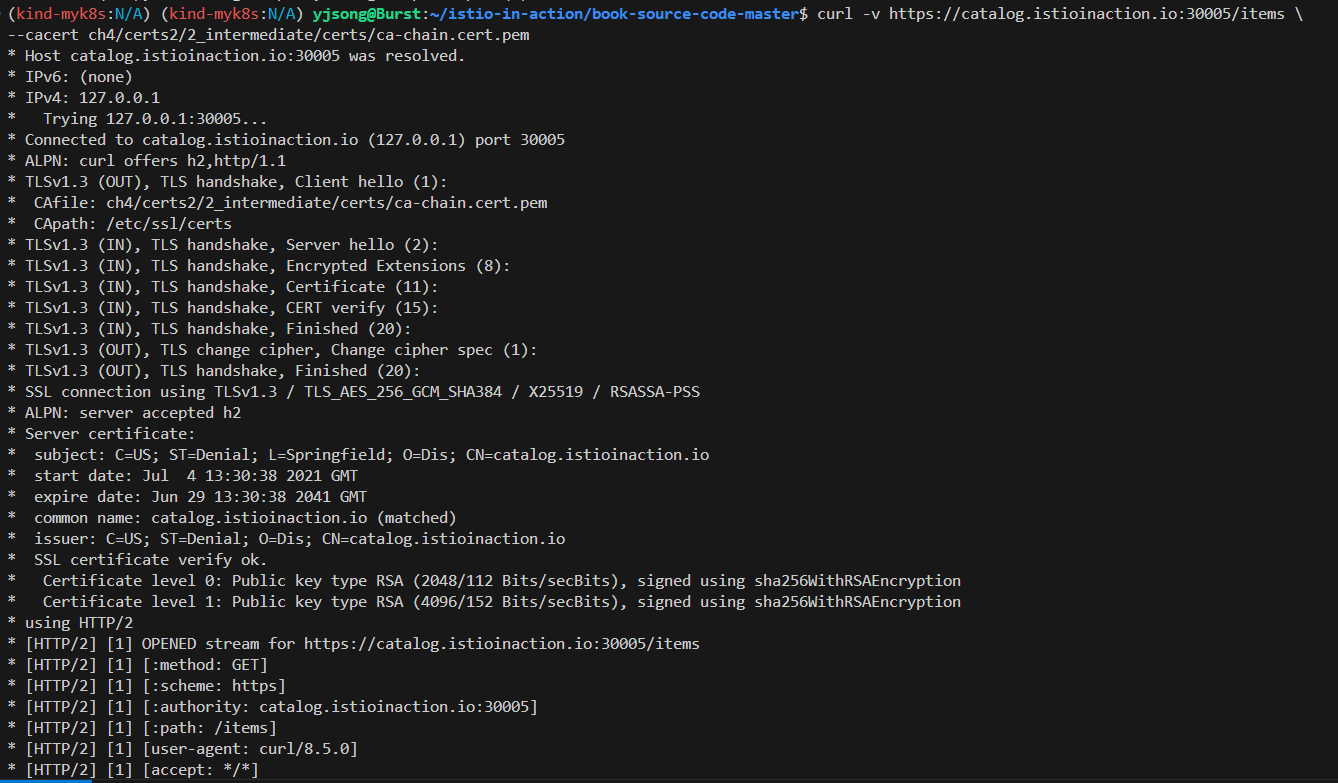

Serving multiple virtual hosts with TLS

- 이스티오의 인그레스 게이트웨이는 동일한 HTTPS 포트(443)에서 자체 인증서와 비밀 키가 있는 여러 가상 호스트를 서빙할 수 있다.

- 이를 위해 동일 포트 및 프로토콜에 여러 항목을 추가한다.

- 예를 들어 자체 인증서와 키 쌍이 있는 `webapp.istioinaction.io` 와 `catalog.istioinaction.io` 서비스를 둘 다 추가할 수 있다.

- HTTPS로 서빙하는 가상 호스트를 여럿 기술하고 있는 이스티오 Gateway 리소스는 다음과 같다.

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: coolstore-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 443

name: https-webapp

protocol: HTTPS

tls:

mode: SIMPLE

credentialName: webapp-credential

hosts:

- "webapp.istioinaction.io"

- port:

number: 443

name: https-catalog

protocol: HTTPS

tls:

mode: SIMPLE

credentialName: catalog-credential

hosts:

- "catalog.istioinaction.io"- 둘 다 443 포트 리스닝하고 HTTPS 를 서빙하지만, 호스트이름이 다르다는 것을 유의

#

cat ch4/certs2/3_application/private/catalog.istioinaction.io.key.pem

cat ch4/certs2/3_application/certs/catalog.istioinaction.io.cert.pem

openssl x509 -in ch4/certs2/3_application/certs/catalog.istioinaction.io.cert.pem -noout -text

...

Issuer: C=US, ST=Denial, O=Dis, CN=catalog.istioinaction.io

Validity

Not Before: Jul 4 13:30:38 2021 GMT

Not After : Jun 29 13:30:38 2041 GMT

Subject: C=US, ST=Denial, L=Springfield, O=Dis, CN=catalog.istioinaction.io

...

#

kubectl create -n istio-system secret tls catalog-credential \

--key ch4/certs2/3_application/private/catalog.istioinaction.io.key.pem \

--cert ch4/certs2/3_application/certs/catalog.istioinaction.io.cert.pem

# Gateway 설정 업데이트

kubectl apply -f ch4/coolstore-gw-multi-tls.yaml -n istioinaction

# Gateway 로 노출한 catalog 서비스용 VirtualService 리소스 생성

cat ch4/catalog-vs.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: catalog-vs-from-gw

spec:

hosts:

- "catalog.istioinaction.io"

gateways:

- coolstore-gateway

http:

- route:

- destination:

host: catalog

port:

number: 80

#

kubectl apply -f ch4/catalog-vs.yaml -n istioinaction

kubectl get gw,vs -n istioinaction

# 도메인 질의를 위한 임시 설정 : 실습 완료 후에는 삭제 해둘 것

echo "127.0.0.1 catalog.istioinaction.io" | sudo tee -a /etc/hosts

cat /etc/hosts | tail -n 2

# 호출테스트 1 - webapp.istioinaction.io

curl -v https://webapp.istioinaction.io:30005/api/catalog \

--cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem

# 호출테스트 2 - catalog.istioinaction.io (cacert 경로가 ch4/certs2/* 임에 유의)

curl -v https://catalog.istioinaction.io:30005/items \

--cacert ch4/certs2/2_intermediate/certs/ca-chain.cert.pem

728x90

'2025_Istio Hands-on Study' 카테고리의 다른 글

| 2주차 - Envoy, Isto Gateway(3) (0) | 2025.04.22 |

|---|---|

| 2주차 - Envoy, Isto Gateway(1) (0) | 2025.04.22 |

| 1주차 - Istio 소개, 첫걸음 (2) (0) | 2025.04.12 |

| 1주차 - Istio 소개, 첫걸음 (1) (0) | 2025.04.12 |